ProUCL Version 5.1

User Guide

Statistical Software for Environmental Applications

for Data Sets with and without Nondetect

Observations

R E S E A R C H A N D D E V E L O P M E N T

2

Notice: Although this work was reviewed by EPA and approved for publication, it may not necessarily reflect official

Agency policy. Mention of trade names and commercial products does not constitute endorsement or

recommendation for use.

129cmb07

ProUCL Version 5.1

User Guide

Statistical Software for Environmental Applications

for Data Sets with and without Nondetect

Observations

Prepared for:

Felicia Barnett, Director

ORD Site Characterization and Monitoring Technical Support Center (SCMTSC)

Superfund and Technology Liaison, Region 4

U.S. Environmental Protection Agency

61 Forsyth Street SW, Atlanta, GA 30303

Prepared by:

Anita Singh, Ph.D.

and Robert Maichle

Lockheed Martin/SERAS

IS&GS-CIVIL

2890 Woodbridge Ave

Edison NJ 08837

EPA/600/R-07/041

October 2015

www.epa.gov

U.S. Environmental Protection Agency

Office of Research and Development

Washington, DC 20460

1

NOTICE

The United States Environmental Protection Agency (U.S. EPA) through its Office of Research and

Development (ORD) funded and managed the research described in ProUCL Technical Guide and

methods incorporated in the ProUCL software. It has been peer reviewed by the U.S. EPA and approved

for publication. Mention of trade names or commercial products does not constitute endorsement or

recommendation by the U.S. EPA for use.

All versions of the ProUCL software including the current version ProUCL 5.1 have been

developed by Lockheed Martin, IS&GS - CIVIL under the Science, Engineering, Response and

Analytical contract with the U.S. EPA and is made available through the U.S. EPA Technical

Support Center (TSC) in Atlanta, Georgia (GA).

Use of any portion of ProUCL that does not comply with the ProUCL Technical Guide is not

recommended.

ProUCL contains embedded licensed software. Any modification of the ProUCL source code

may violate the embedded licensed software agreements and is expressly forbidden.

ProUCL software provided by the U.S. EPA was scanned with McAfee VirusScan version 4.5.1

SP1 and is certified free of viruses.

With respect to ProUCL distributed software and documentation, neither the U.S. EPA nor any of their

employees, assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of

any information, apparatus, product, or process disclosed. Furthermore, software and documentation are

supplied “as-is” without guarantee or warranty, expressed or implied, including without limitation, any

warranty of merchantability or fitness for a specific purpose.

ProUCL software is a statistical software package providing statistical methods described in various U.S.

EPA guidance documents. ProUCL does not describe U.S. EPA policies and should not be considered to

represent U.S. EPA policies.

2

Minimum Hardware Requirements

ProUCL 5.1 will function but will run slowly and page a lot.

Intel Pentium 1.0 gigahertz (GHz)

45 MB of hard drive space

512 MB of memory (RAM)

CD-ROM drive or internet connection

Windows XP (with SP3), Vista (with SP1 or later), and Windows 7.

ProUCL 5.1 will function but some titles and some Graphical User Interfaces (GUIs) will need to be

scrolled. Definition without color will be marginal.

800 by 600 Pixels

Basic Color is preferred

Preferred Hardware Requirements

1 GHz or faster Processor.

1 gigabyte (GB) of memory (RAM)

1024 by 768 Pixels or greater color display

Software Requirements

ProUCL 5.1 has been developed in the Microsoft .NET Framework 4.0 using the C# programming

language. To properly run ProUCL 5.1 software, the computer using the program must have the .NET

Framework 4.0 pre-installed. The downloadable .NET Framework 4.0 files can be obtained from one of

the following websites:

http://msdn.microsoft.com/netframework/downloads/updates/default.aspx

http://www.microsoft.com/en-us/download/details.aspx?id=17851

Quicker site for 32 Bit Operating systems

http://www.microsoft.com/en-us/download/details.aspx?id=24872

Use this site if you have a 64 Bit operating system

3

Installation Instructions when Downloading ProUCL 5.1 from the EPA Web Site

Download the file SETUP.EXE from the EPA Web site and save to a temporary location.

Run the SETUP.EXE program. This will create a ProUCL directory and two folders:

1) The USER GUIDE (this document), and 2) DATA (example data sets).

To run the program, use Windows Explorer to locate the ProUCL application file, and

Double click on it, or use the RUN command from the start menu to locate the

ProUCL.exe file, and run ProUCL.exe.

To uninstall the program, use Windows Explorer to locate and delete the ProUCL folder.

Caution: If you have previous versions of the ProUCL, which were installed on your computer, you

should remove or rename the directory in which earlier ProUCL versions are currently located.

Installation Instructions when Copying ProUCL 5.1 from a CD

Create a folder named ProUCL 5.1 on a local hard drive of the machine you wish to

install ProUCL 5.1.

Extract the zipped file ProUCL.zip to the folder you have just created.

Run ProUCL.exe.

Note: If you have extension turned off, the program will show with the name ProUCL in your directory

and have an Icon with the label ProUCL.

Creating a Shortcut for ProUCL 5.1 on Desktop

To create a shortcut of the ProUCL program on your desktop, go to your ProUCL

directory and right click on the executable program and send it to desktop. A ProUCL

icon will be displayed on your desktop. This shortcut will point to the ProUCL directory

consisting of all files required to execute ProUCL 5.1.

Caution: Because all files in your ProUCL directory are needed to execute the ProUCL software, one

needs to generate a shortcut using the process described above. Simply dragging the ProUCL executable

file from Window Explorer onto your desktop will not work successfully (an error message will appear)

as all files needed to run the software are not available on your desktop. Your shortcut should point to the

directory path with all required ProUCL files.

4

ProUCL 5.1

Software ProUCL version 5.1 (ProUCL 5.1), its earlier versions: ProUCL version 3.00.01, 4.00.02,

4.00.04, 4.00.05, 4.1.00, 4.1.01, and ProUCL 5.0.00, associated Facts Sheet, User Guides and Technical

Guides (e.g., EPA 2010a, 2010b, 2013a, 2013b) can be downloaded from the following EPA website:

http://www.epa.gov/osp/hstl/tsc/software.htm

http://www.epa.gov/osp/hstl/tsc/softwaredocs.htm

Material for ProUCL webinars offered in March 2011, and relevant literature used in the development of

various ProUCL versions can also be downloaded from the above EPA website.

Contact Information for all Versions of ProUCL

Since 1999, the ProUCL software has been developed under the direction of the Technical Support Center

(TSC). As of November 2007, the direction of the TSC is transferred from Brian Schumacher to Felicia

Barnett. Therefore, any comments or questions concerning all versions of ProUCL software should be

addressed to:

Felicia Barnett, Director

ORD Site Characterization and Monitoring Technical Support Center (SCMTSC)

Superfund and Technology Liaison, Region 4

U.S. Environmental Protection Agency

61 Forsyth Street SW, Atlanta, GA 30303-8960

barnett.felicia@epa.gov

(404)562-8659

Fax: (404) 562-8439

5

Getting Started

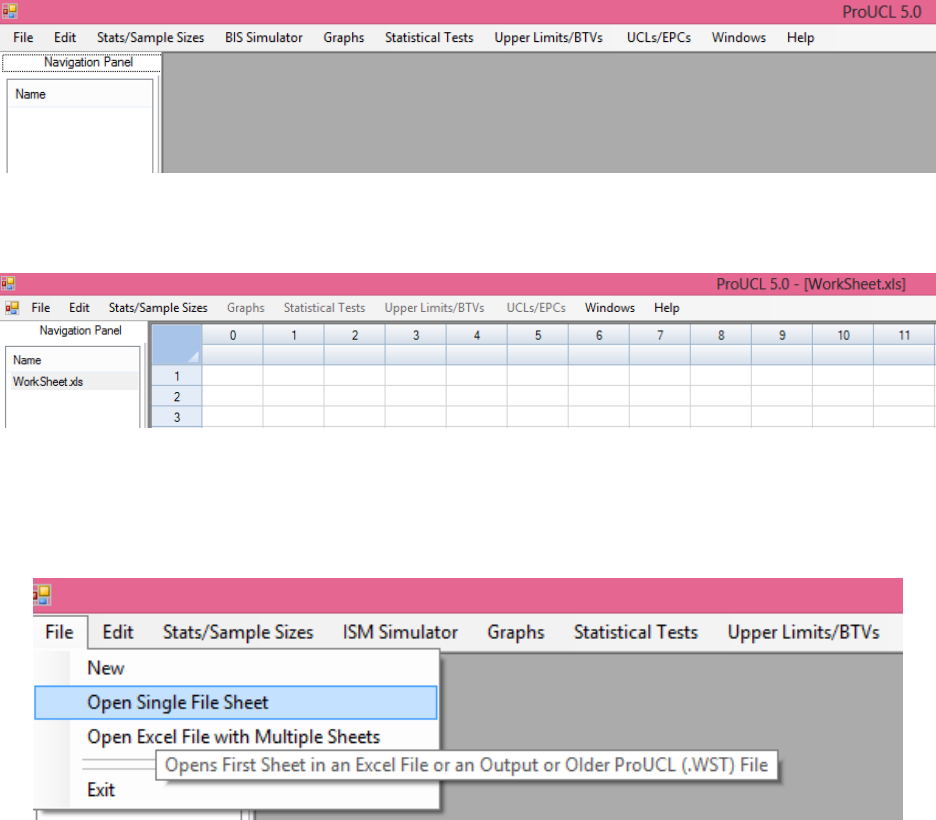

The look and feel of ProUCL 5.1 is similar to that of ProUCL 5.0; and they share the same names for

modules and drop-down menus. The functionality and the use of the methods and options available in

ProUCL 5.1 have been illustrated using Screen shots of output screens generated by ProUCL 5.1.

ProUCL 5.1 uses a pull-down menu structure, similar to a typical Windows program. For modules where

no changes have been made in ProUCL since 2010 (e.g., Sample Sizes), screen shots as used in ProUCL

5.0 documents have been used in ProUCL 5.1 documents. Some of the screen shots generated using

ProUCL 5.1 might have ProUCL 5.0 in their titles as those screen shots have not been re-generated and

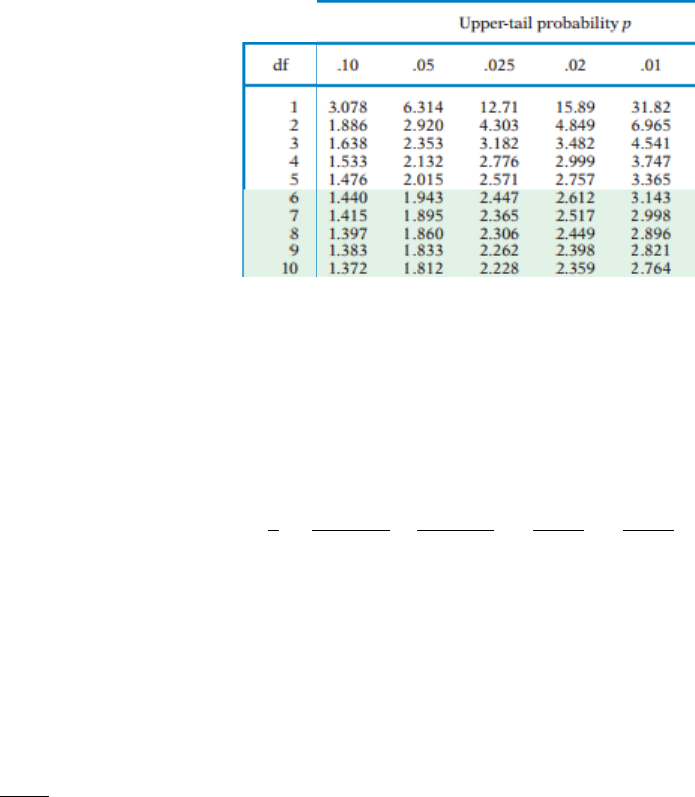

replaced. The screen shown below appears when the program is executed.

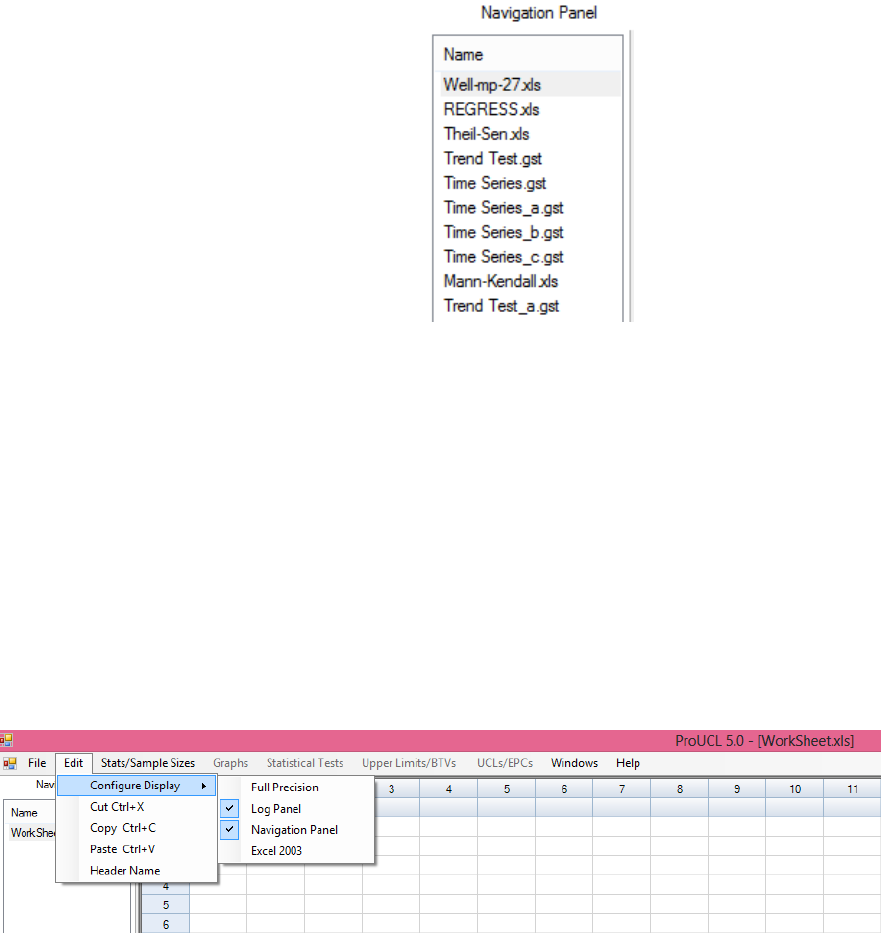

The above screen consists of three main window panels:

The MAIN WINDOW displays data sheets and outputs results from the procedure used.

The NAVIGATION PANEL displays the name of data sets and all generated outputs.

o The navigation panel can hold up to 40 output files. In order to see more files (data

files or generated output files), one can click on Widow Option.

o In the NAVIGATION PANEL, ProUCL assigns self explanatory names to output

files generated using the various modules of ProUCL. If the same module (e.g.,

Time Series Plot) is used many times, ProUCL identifies them by using letters a, b,

c,...and so on as shown below.

← Main

Window

Navigation

Panel

↓

← Log Panel

6

o The user may want to assign names of his choice to these output files when saving

them using the "Save" or "Save As" Options.

The LOG PANEL displays transactions in green, warning messages in orange, and errors in

red. For an example, when one attempts to run a procedure meant for left-censored data sets

on a full-uncensored data set, ProUCL 5.1 will output a warning in orange in this panel.

o Should both panels be unnecessary, you can choose Configure ► Panel ON/OFF.

The use of this option gives extra space to see and print out the statistics of interest. For example, one

may want to turn off these panels when multiple variables (e.g., multiple quantile-quantile [Q-Q] plots)

are analyzed and goodness-of-fit (GOF) statistics and other statistics may need to be captured for all of

the selected variables. The following screen was generated using ProUCL 5.0. An identical screen will be

generated using ProUCL 5.1 with title name as ProUCL 5.1 - [WorkSheet.xls].

7

EXECUTIVE SUMMARY

The main objective of the ProUCL software funded by the United States Environmental Protection

Agency (EPA) is to compute rigorous statistics to help decision makers and project teams in making good

decisions at a polluted site which are cost-effective, and protective of human health and the environment.

The ProUCL software is based upon the philosophy that rigorous statistical methods can be used to

compute reliable estimates of population parameters and decision making statistics including: the upper

confidence limit (UCL) of the mean, the upper tolerance limit (UTL), and the upper prediction limit

(UPL) to help decision makers and project teams in making correct decisions. A few commonly used text

book type methods (e.g., Central Limit Theorem [CLT], Student's t-UCL) alone cannot address all

scenarios and situations occurring in environmental studies. Since many environmental decisions are

based upon a 95 percent (%) UCL (UCL95) of the population mean, it is important to compute UCLs of

practical merit. The use and applicability of a statistical method (e.g., student's t-UCL, CLT-UCL,

adjusted gamma-UCL, Chebyshev UCL, bootstrap-t UCL) depend upon data size, data skewness, and

data distribution. ProUCL computes decision statistics using several parametric and nonparametric

methods covering a wide-range of data variability, distribution, skewness, and sample size. It is

anticipated that the availability of the statistical methods in the ProUCL software covering a wide range

of environmental data sets will help the decision makers in making more informative and correct

decisions at Superfund and Resource Conservation and Recovery Act (RCRA) sites.

It is noted that for moderately skewed to highly skewed environmental data sets, UCLs based on the CLT

and the Student's t-statistic fail to provide the desired coverage (e.g., 0.95) to the population mean even

when the sample sizes are as large as 100 or more. The sample size requirements associated with the CLT

increases with skewness. It would be incorrect to state that a CLT or Student's statistic based UCLs are

adequate to estimate Exposure Point Concentrations (EPC) terms based upon skewed data sets. These

facts have been described in the published documents (Singh, Singh, and Engelhardt [1997, 1999]; Singh,

Singh, and Iaci 2002; Singh and Singh 2003; and Singh et al. 2006) summarizing simulation experiments

conducted on positively skewed data sets to evaluate the performances of the various UCL computation

methods. The use of a parametric lognormal distribution on a lognormally distributed data set yields

unstable impractically large UCLs values, especially when the standard deviation (sd) of the log-

transformed data becomes greater than 1.0 and the data set is of small size less than (<) 30-50. Many

environmental data sets can be modeled by a gamma as well as a lognormal distribution. The use of a

gamma distribution on gamma distributed data sets tends to yield UCL values of practical merit.

Therefore, the use of gamma distribution based decision statistics such as UCLs, UPLs, and UTLs should

not be dismissed by stating that it is easier to use a lognormal model to compute these upper limits.

The suggestions made in ProUCL are based upon the extensive experience of the developers in

environmental statistical methods, published environmental literature, and procedures described in many

EPA guidance documents. These suggestions are made to help the users in selecting the most appropriate

UCL to estimate the EPC term which is routinely used in exposure assessment and risk management

studies of the USEPA. The suggestions are based upon the findings of many simulation studies described

in Singh, Singh, and Engelhardt (1997, 1999); Singh, Singh, and Iaci (2002); Singh and Singh (2003); and

Singh et al. (2006). It should be pointed out that a typical simulation study does not (cannot) cover all

real world data sets of various sizes and skewness from all distributions. When deemed necessary, the

user may want to consult a statistician to select an appropriate upper limit to estimate the EPC term and

other environmental parameters of interest. For an analyte (data set) with skewness (sd of logged data)

near the end points of the skewness intervals presented in decision tables of Chapter 2 (e.g., Tables 2-9

8

through 2-11), the user may select the most appropriate UCL based upon the site conceptual site model

(CSM), expert site knowledge, toxicity of the analyte, and exposure risks associated with that analyte.

The inclusion of outliers in the computation of the various decision statistics tends to yield inflated values

of those decision statistics, which can lead to poor decisions. Often statistics that are computed for a data

set which includes a few outliers tend to be inflated and represent those outliers rather than representing

the main dominant population of interest (e.g., reference area). Identification of outliers, observations

coming from population(s) other than the main dominant population is suggested, before computing the

decision statistics needed to address project objectives. The project team may want to perform the

statistical evaluations twice, once with outliers and once without outliers. This exercise will help the

project team in computing reliable and defensible decision statistics which are needed to make cleanup

and remediation decisions at polluted sites.

The initial development during 1999-2000 and all subsequent upgrades and enhancements of the ProUCL

software have been funded by U.S. EPA through its Office of Research and Development (ORD).

Initially ProUCL was developed as a research tool for U.S. EPA scientists and researchers of the

Technical Support Center (TSC) and ORD- National Exposure Research Laboratory (NERL), Las Vegas.

Background evaluations, groundwater (GW) monitoring, exposure and risk management and cleanup

decisions in support of the Comprehensive Environmental Recovery, Compensation, and Liability Act

(CERCLA) and RCRA site projects of the U.S. EPA are often derived based upon test statistics such as

the Shapiro-Wilk (S-W) test, t-test, Wilcoxon-Mann-Whitney (WMW) test, analysis of variance

(ANOVA), and Mann-Kendall (MK) test and decision statistics including UCLs of the mean, UPLs, and

UTLs. To address the statistical needs of the environmental projects of the USEPA, over the years

ProUCL software has been upgraded and enhanced to include many graphical tools and statistical

methods described in many EPA guidance documents including: EPA 1989a, 1989b, 1991, 1992a, 1992b,

2000 Multi-Agency Radiation Survey and Site Investigation Manual (MARSSIM), 2002a, 2002b, 2002c,

2006a, 2006b, and 2009. Several statistically rigorous methods (e.g., for data sets with nondetects [NDs])

not easily available in the existing guidance documents and in the environmental literature are also

available in ProUCL 5.0/ProUCL 5.1.

ProUCL 5.1/ProUCL 5.0 has graphical, estimation, and hypotheses testing methods for uncensored-full

data sets and for left-censored data sets including ND observations with multiple detection limits (DLs) or

reporting limits (RLs). In addition to computing general statistics, ProUCL 5.1 has goodness-of-fit (GOF)

tests for normal, lognormal and gamma distributions, and parametric and nonparametric methods

including bootstrap methods for skewed data sets for computation of decision making statistics such as

UCLs of the mean (EPA 2002a), percentiles, UPLs for a pre-specified number of future observations

(e.g., k with k=1, 2, 3,...), UPLs for mean of future k (≥1) observations, and UTLs (e.g., EPA 1992b,

2002b, and 2009). Many positively skewed environmental data sets can be modeled by a lognormal as

well as a gamma model. It is well-known that for moderately skewed to highly skewed data sets, the use

of a lognormal distribution tends to yield inflated and unrealistically large values of the decision statistics

especially when the sample size is small (e.g., <20-30). For gamma distributed skewed uncensored and

left-censored data sets, ProUCL software computes decision statistics including UCLs, percentiles, UPLs

for future k (≥1) observations, UTLs, and upper simultaneous limits (USLs).

For data sets with NDs, ProUCL has several estimation methods including the Kaplan-Meier (KM)

method, regression on order statistics (ROS) methods and substitution methods (e.g., replacing NDs by

DL, DL/2). ProUCL 5.1 can be used to compute upper limits which adjust for data skewness;

specifically, for skewed data sets, ProUCL computes upper limits using KM estimates in gamma

(lognormal) UCL and UTL equations provided the detected observations in the left-censored data set

follow a gamma (lognormal) distribution. Some poor performing commonly used and cited methods such

9

as the DL/2 substitution method and H-statistic based UCL computation method have been retained in

ProUCL 5.1 for historical reasons, and research and comparison purposes.

The Sample Sizes module of ProUCL can be used to develop data quality objectives (DQOs) based

sampling designs and to perform power evaluations needed to address statistical issues associated with a

variety of site projects. ProUCL provides user-friendly options to enter the desired values for the decision

parameters such as Type I and Type II error rates, and other DQOs used to determine the minimum

sample sizes needed to address project objectives. The Sample Sizes module can compute DQO-based

minimum sample sizes needed: to estimate the population mean; to perform single and two-sample

hypotheses testing approaches; and in acceptance sampling to accept or reject a batch of discrete items

such as a lot of drums containing hazardous waste. Both parametric (e.g., t-test) and nonparametric (e.g.,

Sign test, WMW test, test for proportions) sample size determination methods are available in ProUCL.

ProUCL has exploratory graphical methods for both uncensored data sets and for left-censored data sets

consisting of ND observations. Graphical methods in ProUCL include histograms, multiple quantile-

quantile (Q-Q) plots, and side-by-side box plots. The use of graphical displays provides additional insight

about the information contained in a data set that may not otherwise be revealed by the use of estimates

(e.g., 95% upper limits) and test statistics (e.g., two-sample t-test, WMW test). In addition to providing

information about the data distributions (e.g., normal or gamma), Q-Q plots are also useful in identifying

outliers and the presence of mixture populations (e.g., data from several populations) potentially present

in a data set. Side-by-side box plots and multiple Q-Q plots are useful to visually compare two or more

data sets, such as: site-versus-background concentrations, surface-versus-subsurface concentrations, and

constituent concentrations of several GW monitoring wells (MWs). ProUCL also has a couple of classical

outlier test procedures, such as the Dixon test and the Rosner test which can be used on uncensored data

sets as well as on left-censored data sets containing ND observations.

ProUCL has parametric and nonparametric single-sample and two-sample hypotheses testing approaches

for uncensored as well as left-censored data sets. Single-sample hypotheses tests: Student’s t-test, Sign

test, Wilcoxon Signed Rank test, and the Proportion test are used to compare site mean/median

concentrations (or some other threshold such as an upper percentile) with some average cleanup standard,

C

s

(or a not-to-exceed compliance limit, A

0

) to verify the attainment of cleanup levels (EPA, 1989a; 2000,

2006a) at remediated site areas of concern. Single-sample tests such as the Sign test and Proportion test,

and upper limits including UTLs and UPLs are also used to perform intra-well comparisons. Several two-

sample hypotheses tests as described in EPA guidance documents (e.g., 2002b, 2006b, 2009) are also

available in the ProUCL software. The two-sample hypotheses testing approaches in ProUCL include:

Student’s t-test, WMW test, Gehan test and Tarone-Ware (T-W) test. The two-sample tests are used to

compare concentrations of two populations such as site versus background, surface versus subsurface

soils, and upgradient versus downgradient wells.

The Oneway ANOVA module in ProUCL has both classical and nonparametric Kruskal-Wallis (K-W)

tests. Oneway ANOVA is used to compare means (or medians) of multiple groups such as comparing

mean concentrations of areas of concern and to perform inter-well comparisons. In GW monitoring

applications, the ordinary least squares (OLS) regression model, trend tests, and time series plots are used

to identify upwards or downwards trends potentially present in constituent concentrations identified in

wells over a certain period of time. The Trend Analysis module performs the M-K trend test and Theil-

Sen (T-S) trend test on data sets with missing values; and generates trend graphs displaying a parametric

OLS regression line and nonparametric T-S trend line. The Time Series Plots option can be used to

compare multiple time-series data sets.

The use of the incremental sampling methodology (ISM) has been recommended by the Interstate

Technology and Regulatory Council (ITRC 2012) for collecting ISM soil samples to compute mean

10

concentrations of the decision units (DUs) and sampling units (SUs) requiring characterization and

remediation activities. At many polluted sites, a large amount of discrete onsite and/or offsite background

data are already available which cannot be directly compared with newly collected ISM data. In order to

provide a tool to compare the existing discrete background data with actual field onsite or background

ISM data, a Monte Carlo Background Incremental Sample Simulator (BISS) module was incorporated in

ProUCL 5.0 and retained in ProUCL 5.1 (currently blocked from general use) which may be used on a

large existing discrete background data set. The BISS module simulates incremental sampling

methodology based equivalent background incremental samples. The availability of a large discrete

background data set collected from areas with geological conditions comparable to the DU(s) of interest

is a pre-requisite for successful application of this module. For now, the BISS module has been blocked

for use as this module is awaiting adequate guidance and instructions for its intended use on discrete

background data sets.

ProUCL software is a user-friendly freeware package providing statistical and graphical tools needed to

address statistical issues described in many U.S. EPA guidance documents. ProUCL 5.0/ProUCL 5.1 can

process many constituents (variables) simultaneously to: perform statistical tests (e.g., ANOVA and trend

test statistics) and compute decision statistics including UCLs of mean, UPLs, and UTLs – a capability

not available in several commercial software packages such as Minitab 16 and NADA for R (Helsel

2013). ProUCL also has the capability of processing data by group variables. Special care has been taken

to make the software as user friendly as possible. For example, on the various GOF graphical displays,

output sheets for GOF tests, OLS and ANOVA, in addition to critical values and/or p-values, the

conclusion derived based upon those values is also displayed. ProUCL is easy to use and does not require

any programming skills as needed when using commercial software packages and programs written in R

script.

Methods incorporated in ProUCL have been tested and verified extensively by the developers,

researchers, scientists, and users. The results obtained by ProUCL are in agreement with the results

obtained by using other software packages including Minitab, SAS

®

, and programs written in R Script.

ProUCL 5.0/ProUCL 5.1 computes decision statistics (e.g., UPL, UTL) based upon the KM method in a

straight forward manner without flipping the data and re-flipping the computed statistics for left-censored

data sets; these operations are not easy for a typical user to understand and perform. This can become

unnecessarily tedious when computing decision statistics for multiple variables/analytes. Moreover,

unlike survival analysis, it is important to compute an accurate estimate of the sd which is needed to

compute decision making statistics including UPLs and UTLs. For left-censored data sets, ProUCL

computes a KM estimate of sd directly. These issues are elaborated by examples discussed in this User

Guide and in the accompanying ProUCL 5.1 Technical Guide.

ProUCL does not represent a policy software of the government. ProUCL has been developed on limited

resources, and it does provide many statistical methods often used in environmental applications. The

objective of the freely available user-friendly software, ProUCL is to provide statistical and graphical

tools to address environmental issues of environmental site projects for all users including those users

who cannot or may not want to program and/or do not have access to commercial software packages.

Some users have criticized ProUCL and pointed out some deficiencies such as: it does not have

geostatistical methods; it does not perform simulations; and does not offer programming interface for

automation. Due to the limited scope of ProUCL, advanced methods have not been incorporated in

ProUCL. For methods not available in ProUCL, users can use other statistical software packages such as

SAS

®

(available to EPA personnel) and R script to address their computational needs. Contributions from

scientists and researchers to enhance methods incorporated in ProUCL will be very much appreciated.

Just like other government documents (e.g., U.S. EPA 2009), various versions of ProUCL (2007, 2009,

2011, 2013, 2016) also make some rule-of thumb type suggestions (e.g., minimum sample size

11

requirement of 8-10) based upon professional judgment and experience of the developers. It is

recommended that the users/project team/agencies make their own determinations about the rule-of-

thumb type suggestions made in ProUCL before applying a statistical method.

12

ACRONYMS and ABBREVIATIONS

ACL

Alternative compliance or concentration limit

A-D, AD

Anderson-Darling test

AL

Action limit

AOC

Area(s) of concern

ANOVA

Analysis of variance

A

0

Not to exceed compliance limit or specified action level

BC

Box-Cox transformation

BCA

Bias-corrected accelerated bootstrap method

BD

Binomial distribution

BISS

Background Incremental Sample Simulator

BTV

Background threshold value

CC, cc

Confidence coefficient

CERCLA

Comprehensive Environmental Recovery, Compensation, and Liability Act

CL

Compliance limit

CLT

Central Limit Theorem

COPC

Contaminant/constituent of potential concern

C

s

Cleanup standards

CSM

Conceptual site model

Df

Degrees of freedom

DL

Detection limit

DL/2 (t)

UCL based upon DL/2 method using Student’s t-distribution cutoff value

DL/2 Estimates

Estimates based upon data set with NDs replaced by 1/2 of the respective detection

limits

DOE

Department of Energy

DQOs

Data quality objectives

DU

Decision unit

EA

Exposure area

EDF

Empirical distribution function

EM

Expectation maximization

EPA

United States Environmental Protection Agency

13

EPC

Exposure point concentration

GA

Georgia

GB

Gigabyte

GHz

Gigahertz

GROS

Gamma ROS

GOF, G.O.F.

Goodness-of-fit

GUI

Graphical user interface

GW

Groundwater

H

A

Alternative hypothesis

H

0

Null hypothesis

H-UCL

UCL based upon Land’s H-statistic

i.i.d.

Independently and identically distributed

ISM

Incremental sampling methodology

ITRC

Interstate Technology & Regulatory Council

k, K

Positive integer representing future or next k observations

K

Shape parameter of a gamma distribution

K,k

Number of nondetects in a data set

k hat

MLE of the shape parameter of a gamma distribution

k star

Biased corrected MLE of the shape parameter of a gamma distribution

KM (%)

UCL based upon Kaplan-Meier estimates using the percentile bootstrap method

KM (Chebyshev)

UCL based upon Kaplan-Meier estimates using the Chebyshev inequality

KM (t)

UCL based upon Kaplan-Meier estimates using the Student’s t-distribution critical

value

KM (z)

UCL based upon Kaplan-Meier estimates using critical value of a standard normal

distribution

K-M, KM

Kaplan-Meier

K-S, KS

Kolmogorov-Smirnov

K-W

Kruskal Wallis

LCL

Lower confidence limit

LN, ln

Lognormal distribution

LCL

Lower confidence limit of mean

LPL

Lower prediction limit

LROS

LogROS; robust ROS

14

LTL

Lower tolerance limit

LSL

Lower simultaneous limit

M,m

Applied to incremental sampling: number in increments in an ISM sample

MARSSIM

Multi-Agency Radiation Survey and Site Investigation Manual

MCL

Maximum concentration limit, maximum compliance limit

MDD

Minimum detectable difference

MDL

Method detection limit

MK, M-K

Mann-Kendall

ML

Maximum likelihood

MLE

Maximum likelihood estimate

n

Number of observations/measurements in a sample

N

Number of observations/measurements in a population

MVUE

Minimum variance unbiased estimate

MW

Monitoring well

NARPM

National Association of Remedial Project Managers

ND, nd, Nd

Nondetect

NERL

National Exposure Research Laboratory

NRC

Nuclear Regulatory Commission

OKG

Orthogonalized Kettenring Gnanadesikan

OLS

Ordinary least squares

ORD

Office of Research and Development

OSRTI

Office of Superfund Remediation and Technology Innovation

OU

Operating unit

PCA

Principal component analysis

PDF, pdf

Probability density function

.pdf

Files in Portable Document Format

PRG

Preliminary remediation goals

PROP

Proposed influence function

p-values

Probability-values

QA

Quality assurance

QC

Quality

Q-Q

Quantile-quantile

15

R,r

Applied to incremental sampling: number of replicates of ISM samples

RAGS

Risk Assessment Guidance for Superfund

RCRA

Resource Conservation and Recovery Act

RL

Reporting limit

RMLE

Restricted maximum likelihood estimate

ROS

Regression on order statistics

RPM

Remedial Project Manager

RSD

Relative standard deviation

RV

Random variable

S

Substantial difference

SCMTSC

Site Characterization and Monitoring Technical Support Center

SD, Sd, sd

Standard deviation

SE

Standard error

SND

Standard Normal Distribution

SNV

Standard Normal Variate

SSL

Soil screening levels

SQL

Sample quantitation limit

SU

Sampling unit

S-W, SW

Shapiro-Wilk

T-S

Theil-Sen

TSC

Technical Support Center

TW, T-W

Tarone-Ware

UCL

Upper confidence limit

UCL95

95% upper confidence limit

UPL

Upper prediction limit

U.S. EPA, EPA

United States Environmental Protection Agency

UTL

Upper tolerance limit

UTL95-95

95% upper tolerance limit with 95% coverage

USGS

U.S. Geological Survey

USL

Upper simultaneous limit

vs.

Versus

WMW

Wilcoxon-Mann-Whitney

16

WRS

Wilcoxon Rank Sum

WSR

Wilcoxon Signed Rank

X

p

p

th

percentile of a distribution

<

Less than

>

Greater than

≥

Greater than or equal to

≤

Less than or equal to

Δ

Greek letter denoting the width of the gray region associated with hypothesis testing

Σ

Greek letter representing the summation of several mathematical quantities, numbers

%

Percent

α

Type I error rate

β

Type II error rate

Ө

Scale parameter of the gamma distribution

Σ

Standard deviation of the log-transformed data

^

carat sign over a parameter, indicates that it represents a statistic/estimate computed

using the sampled data

17

GLOSSARY

Anderson-Darling (A-D) test: The Anderson-Darling test assesses whether known data come from a

specified distribution. In ProUCL the A-D test is used to test the null hypothesis that a sample data set, x

1

,

..., x

n

came from a gamma distributed population.

Background Measurements: Measurements that are not site-related or impacted by site activities.

Background sources can be naturally occurring or anthropogenic (man-made).

Bias: The systematic or persistent distortion of a measured value from its true value (this can occur

during sampling design, the sampling process, or laboratory analysis).

Bootstrap Method: The bootstrap method is a computer-based method for assigning measures of

accuracy to sample estimates. This technique allows estimation of the sample distribution of almost any

statistic using only very simple methods. Bootstrap methods are generally superior to ANOVA for small

data sets or where sample distributions are non-normal.

Central Limit Theorem (CLT): The central limit theorem states that given a distribution with a mean, μ,

and variance, σ

2

, the sampling distribution of the mean approaches a normal distribution with a mean (μ)

and a variance σ

2

/N as N, the sample size, increases.

Censored Data Sets: Data sets that contain one or more observations which are nondetects.

Coefficient of Variation (CV): A dimensionless quantity used to measure the spread of data relative to

the size of the numbers. For a normal distribution, the coefficient of variation is given by s/xBar. It is also

known as the relative standard deviation (RSD).

Confidence Coefficient (CC): The confidence coefficient (a number in the closed interval [0, 1])

associated with a confidence interval for a population parameter is the probability that the random interval

constructed from a random sample (data set) contains the true value of the parameter. The confidence

coefficient is related to the significance level of an associated hypothesis test by the equality: level of

significance = 1 – confidence coefficient.

Confidence Interval: Based upon the sampled data set, a confidence interval for a parameter is a random

interval within which the unknown population parameter, such as the mean, or a future observation, x

0

,

falls.

Confidence Limit: The lower or an upper boundary of a confidence interval. For example, the 95% upper

confidence limit (UCL) is given by the upper bound of the associated confidence interval.

Coverage, Coverage Probability: The coverage probability (e.g., = 0.95) of an upper confidence limit

(UCL) of the population mean represents the confidence coefficient associated with the UCL.

Critical Value: The critical value for a hypothesis test is a threshold to which the value of the test

statistic is compared to determine whether or not the null hypothesis is rejected. The critical value for any

hypothesis test depends on the sample size, the significance level, α at which the test is carried out, and

whether the test is one-sided or two-sided.

18

Data Quality Objectives (DQOs): Qualitative and quantitative statements derived from the DQO

process that clarify study technical and quality objectives, define the appropriate type of data, and specify

tolerable levels of potential decision errors that will be used as the basis for establishing the quality and

quantity of data needed to support decisions.

Detection Limit: A measure of the capability of an analytical method to distinguish samples that do not

contain a specific analyte from samples that contain low concentrations of the analyte. It is the lowest

concentration or amount of the target analyte that can be determined to be different from zero by a single

measurement at a stated level of probability. Detection limits are analyte and matrix-specific and may be

laboratory-dependent.

Empirical Distribution Function (EDF): In statistics, an empirical distribution function is a cumulative

probability distribution function that concentrates probability 1/n at each of the n numbers in a sample.

Estimate: A numerical value computed using a random data set (sample), and is used to guess (estimate)

the population parameter of interest (e.g., mean). For example, a sample mean represents an estimate of

the unknown population mean.

Expectation Maximization (EM): The EM algorithm is used to approximate a probability density

function (PDF). EM is typically used to compute maximum likelihood estimates given incomplete

samples.

Exposure Point Concentration (EPC): The constituent concentration within an exposure unit to which

the receptors are exposed. Estimates of the EPC represent the concentration term used in exposure

assessment.

Extreme Values: Values that are well-separated from the majority of the data set coming from the

far/extreme tails of the data distribution.

Goodness-of-Fit (GOF): In general, the level of agreement between an observed set of values and a set

wholly or partly derived from a model of the data.

Gray Region: A range of values of the population parameter of interest (such as mean constituent

concentration) within which the consequences of making a decision error are relatively minor. The gray

region is bounded on one side by the action level. The width of the gray region is denoted by the Greek

letter delta, Δ, in this guidance.

H-Statistic: Land's statistic used to compute UCL of mean of a lognormal population

H-UCL: UCL based on Land’s H-Statistic.

Hypothesis: Hypothesis is a statement about the population parameter(s) that may be supported or

rejected by examining the data set collected for this purpose. There are two hypotheses: a null hypothesis,

(H

0

), representing a testable presumption (often set up to be rejected based upon the sampled data), and an

alternative hypothesis (H

A

), representing the logical opposite of the null hypothesis.

Jackknife Method: A statistical procedure in which, in its simplest form, estimates are formed of a

parameter based on a set of N observations by deleting each observation in turn to obtain, in addition to

the usual estimate based on N observations, N estimates each based on N-1 observations.

19

Kolmogorov-Smirnov (KS) test: The Kolmogorov-Smirnov test is used to decide if a data set comes

from a population with a specific distribution. The Kolmogorov-Smirnov test is based on the empirical

distribution function (EDF). ProUCL uses the KS test to test the null hypothesis if a data set follows a

gamma distribution.

Left-censored Data Set: An observation is left-censored when it is below a certain value (detection limit)

but it is unknown by how much; left-censored observations are also called nondetect (ND) observations.

A data set consisting of left-censored observations is called a left-censored data set. In environmental

applications trace concentrations of chemicals may indeed be present in an environmental sample (e.g.,

groundwater, soil, sediment) but cannot be detected and are reported as less than the detection limit of the

analytical instrument or laboratory method used.

Level of Significance (α): The error probability (also known as false positive error rate) tolerated of

falsely rejecting the null hypothesis and accepting the alternative hypothesis.

Lilliefors test: A goodness-of-fit test that tests for normality of large data sets when population mean and

variance are unknown.

Maximum Likelihood Estimates (MLE): MLE is a popular statistical method used to make inferences

about parameters of the underlying probability distribution of a given data set.

Mean: The sum of all the values of a set of measurements divided by the number of values in the set; a

measure of central tendency.

Median: The middle value for an ordered set of n values. It is represented by the central value when n is

odd or by the average of the two most central values when n is even. The median is the 50th percentile.

Minimum Detectable Difference (MDD): The MDD is the smallest difference in means that the

statistical test can resolve. The MDD depends on sample-to-sample variability, the number of samples,

and the power of the statistical test.

Minimum Variance Unbiased Estimates (MVUE): A minimum variance unbiased estimator (MVUE or

MVU estimator) is an unbiased estimator of parameters, whose variance is minimized for all values of the

parameters. If an estimator is unbiased, then its mean squared error is equal to its variance.

Nondetect (ND) values: Censored data values. Typically, in environmental applications, concentrations

or measurements that are less than the analytical/instrument method detection limit or reporting limit.

Nonparametric: A term describing statistical methods that do not assume a particular population

probability distribution, and are therefore valid for data from any population with any probability

distribution, which can remain unknown.

Optimum: An interval is optimum if it possesses optimal properties as defined in the statistical literature.

This may mean that it is the shortest interval providing the specified coverage (e.g., 0.95) to the

population mean. For example, for normally distributed data sets, the UCL of the population mean based

upon Student’s t distribution is optimum.

20

Outlier: Measurements (usually larger or smaller than the majority of the data values in a sample) that

are not representative of the population from which they were drawn. The presence of outliers distorts

most statistics if used in any calculations.

Probability - Values (p-value): In statistical hypothesis testing, the p-value associated with an observed

value, t

observed

of some random variable T used as a test statistic is the probability that, given that the null

hypothesis is true, T will assume a value as or more unfavorable to the null hypothesis as the observed

value t

observed

. The null hypothesis is rejected for all levels of significance, α greater than or equal to the p-

value.

Parameter: A parameter is an unknown or known constant associated with the distribution used to model

the population.

Parametric: A term describing statistical methods that assume a probability distribution such as a

normal, lognormal, or a gamma distribution.

Population: The total collection of N objects, media, or people to be studied and from which a sample is

to be drawn. It is the totality of items or units under consideration.

Prediction Interval: The interval (based upon historical data, background data) within which a newly

and independently obtained (often labeled as a future observation) site observation (e.g., onsite,

compliance well) of the predicted variable (e.g., lead) falls with a given probability (or confidence

coefficient).

Probability of Type II (2) Error (β): The probability, referred to as β (beta), that the null hypothesis will

not be rejected when in fact it is false (false negative).

Probability of Type I (1) Error = Level of Significance (α): The probability, referred to as α (alpha),

that the null hypothesis will be rejected when in fact it is true (false positive).

p

th

Percentile or p

th

Quantile: The specific value, X

p

of a distribution that partitions a data set of

measurements in such a way that the p percent (a number between 0 and 100) of the measurements fall at

or below this value, and (100-p) percent of the measurements exceed this value, X

p

.

Quality Assurance (QA): An integrated system of management activities involving planning,

implementation, assessment, reporting, and quality improvement to ensure that a process, item, or service

is of the type and quality needed and expected by the client.

Quality Assurance Project Plan: A formal document describing, in comprehensive detail, the necessary

QA, quality control (QC), and other technical activities that must be implemented to ensure that the

results of the work performed will satisfy the stated performance criteria.

Quantile Plot: A graph that displays the entire distribution of a data set, ranging from the lowest to the

highest value. The vertical axis represents the measured concentrations, and the horizontal axis is used to

plot the percentiles/quantiles of the distribution.

Range: The numerical difference between the minimum and maximum of a set of values.

21

Regression on Order Statistics (ROS): A regression line is fit to the normal scores of the order statistics

for the uncensored observations and is used to fill in values imputed from the straight line for the

observations below the detection limit.

Resampling: The repeated process of obtaining representative samples and/or measurements of a

population of interest.

Reliable UCL: see Stable UCL.

Robustness: Robustness is used to compare statistical tests. A robust test is the one with good

performance (that is not unduly affected by outliers and underlying assumptions) for a wide variety of

data distributions.

Resistant Estimate: A test/estimate which is not affected by outliers is called a resistant test/estimate

Sample: Represents a random sample (data set) obtained from the population of interest (e.g., a site area,

a reference area, or a monitoring well). The sample is supposed to be a representative sample of the

population under study. The sample is used to draw inferences about the population parameter(s).

Shapiro-Wilk (SW) test: Shapiro-Wilk test is a goodness-of-fit test that tests the null hypothesis that a

sample data set, x

1

, ..., x

n

came from a normally distributed population.

Skewness: A measure of asymmetry of the distribution of the parameter under study (e.g., lead

concentrations). It can also be measured in terms of the standard deviation of log-transformed data. The

greater the standard deviation, the greater is the skewness.

Stable UCL: The UCL of a population mean is a stable UCL if it represents a number of practical merit

(e.g., a realistic value which can actually occur at a site), which also has some physical meaning. That is,

a stable UCL represents a realistic number (e.g., constituent concentration) that can occur in practice.

Also, a stable UCL provides the specified (at least approximately, as much as possible, as close as

possible to the specified value) coverage (e.g., ~0.95) to the population mean.

Standard Deviation (sd, sd, SD): A measure of variation (or spread) from an average value of the

sample data values.

Standard Error (SE): A measure of an estimate's variability (or precision). The greater the standard

error in relation to the size of the estimate, the less reliable is the estimate. Standard errors are needed to

construct confidence intervals for the parameters of interests such as the population mean and population

percentiles.

Substitution Method: The substitution method is a method for handling NDs in a data set, where the ND

is replaced by a defined value such as 0, DL/2 or DL prior to statistical calculations or graphical analyses.

This method has been included in ProUCL 5.1 for historical comparative purposes but is not

recommended for use. The bias introduced by applying the substitution method cannot be quantified

with any certainty. ProUCL 5.1 will provide a warning when this option is chosen.

Uncensored Data Set: A data set without any censored (nondetects) observations.

22

Unreliable UCL, Unstable UCL, Unrealistic UCL: The UCL of a population mean is unstable,

unrealistic, or unreliable if it is orders of magnitude higher than the other UCLs of a population mean. It

represents an impractically large value that cannot be achieved in practice. For example, the use of Land’s

H-statistic often results in an impractically large inflated UCL value. Some other UCLs, such as the

bootstrap-t UCL and Hall’s UCL, can be inflated by outliers resulting in an impractically large and

unstable value. All such impractically large UCL values are called unstable, unrealistic, unreliable, or

inflated UCLs.

Upper Confidence Limit (UCL): The upper boundary (or limit) of a confidence interval of a parameter

of interest such as the population mean.

Upper Prediction Limit (UPL): The upper boundary of a prediction interval for an independently

obtained observation (or an independent future observation).

Upper Tolerance Limit (UTL): A confidence limit on a percentile of the population rather than a

confidence limit on the mean. For example, a 95% one-sided UTL for 95% coverage represents the value

below which 95% of the population values are expected to fall with 95 % confidence. In other words, a

95% UTL with coverage coefficient 95% represents a 95% UCL for the 95

th

percentile.

Upper Simultaneous Limit (USL): The upper boundary of the largest value.

xBar: arithmetic average of computed using the sampled data values

23

ACKNOWLEDGEMENTS

We wish to express our gratitude and thanks to our friends and colleagues who have contributed during

the development of past versions of ProUCL and to all of the many people who reviewed, tested, and

gave helpful suggestions throughout the development of the ProUCL software package. We wish to

especially acknowledge EPA scientists including Deana Crumbling, Nancy Rios-Jafolla, Tim Frederick,

Dr. Maliha Nash, Kira Lynch, and Marc Stiffleman; James Durant of ATSDR, Dr. Steve Roberts of

University of Florida, Dr. Elise A. Striz of the National Regulatory Commission (NRC), and Drs. Phillip

Goodrum and John Samuelian of Integral Consulting Inc. for testing and reviewing ProUCL 5.0 and its

associated guidance documents, and for providing helpful comments and suggestions. We also wish to

thank Dr. D. Beal of Leidos for reviewing ProUCL 5.0.

Special thanks go to Ms. Donna Getty and Mr. Richard Leuser of Lockheed Martin for providing a

thorough technical and editorial review of ProUCL 5.1 and also ProUCL 5.0 User Guide and Technical

Guide. A special note of thanks is due to Ms. Felicia Barnett of EPA ORD Site Characterization and

Monitoring Technical Support Center (SCMTSC), without whose assistance the development of the

ProUCL 5.1 software and associated guidance documents would not have been possible.

Finally, we wish to dedicate the ProUCL 5.1 (and ProUCL 5.0) software package to our friend and

colleague, John M. Nocerino who had contributed significantly in the development of ProUCL and Scout

software packages.

24

Table of Contents

NOTICE ..................................................................................................................................... 1

Minimum Hardware Requirements ......................................................................................... 2

Software Requirements ........................................................................................................... 2

Installation Instructions when Downloading ProUCL 5.1 from the EPA Web Site .............. 3

ProUCL 5.1 ............................................................................................................................... 4

Contact Information for all Versions of ProUCL .................................................................... 4

EXECUTIVE SUMMARY ........................................................................................................... 7

GLOSSARY ..............................................................................................................................17

ACKNOWLEDGEMENTS .........................................................................................................23

Table of Contents ....................................................................................................................24

INTRODUCTION OVERVIEW OF ProUCL VERSION 5.1 SOFTWARE ..................................29

The Need for ProUCL Software .................................................................................................... 34

ProUCL 5.1 Capabilities ................................................................................................................ 37

ProUCL 5.1 Technical Guide ........................................................................................................ 44

Chapter 1 Guidance on the Use of Statistical Methods in ProUCL Software .....................45

1.1 Background Data Sets ....................................................................................................... 45

1.2 Site Data Sets .................................................................................................................... 46

1.3 Discrete Samples or Composite Samples? ........................................................................ 47

1.4 Upper Limits and Their Use ............................................................................................. 48

1.5 Point-by-Point Comparison of Site Observations with BTVs, Compliance Limits and

Other Threshold Values ................................................................................................................. 50

1.6 Hypothesis Testing Approaches and Their Use ................................................................ 51

1.6.1 Single Sample Hypotheses (Pre-established BTVs and Not-to-Exceed Values are

Known) ................................................................................................................ 51

1.6.2 Two-Sample Hypotheses (BTVs and Not-to-Exceed Values are Unknown) ...... 52

1.7 Minimum Sample Size Requirements and Power Evaluations ......................................... 53

1.7.1 Why a data set of minimum size, n = 8-10? ........................................................ 54

1.7.2 Sample Sizes for Bootstrap Methods ................................................................... 55

1.8 Statistical Analyses by a Group ID ................................................................................... 56

1.9 Statistical Analyses for Many Constituents/Variables ...................................................... 56

1.10 Use of Maximum Detected Value as Estimates of Upper Limits ..................................... 56

1.10.1 Use of Maximum Detected Value to Estimate BTVs and Not-to-Exceed Values

............................................................................................................................. 57

1.10.2 Use of Maximum Detected Value to Estimate EPC Terms ................................. 57

1.11 Samples with Nondetect Observations ............................................................................. 58

1.11.1 Avoid the Use of the DL/2 Substitution Method to Compute UCL95 ................ 58

1.11.2 ProUCL Does Not Distinguish between Detection Limits, Reporting limits, or

Method Detection Limits ..................................................................................... 59

1.12 Samples with Low Frequency of Detection ...................................................................... 59

1.13.1 Identification of COPCs ....................................................................................... 60

1.13.2 Identification of Non-Compliance Monitoring Wells .......................................... 60

25

1.13.3 Verification of the Attainment of Cleanup Standards, C

s

.................................... 60

1.13.4 Using BTVs (Upper Limits) to Identify Hot Spots .............................................. 61

1.14 Some General Issues, Suggestions and Recommendations made by ProUCL ................. 61

1.14.1 Handling of Field Duplicates ............................................................................... 61

1.14.2 ProUCL Recommendation about ROS Method and Substitution (DL/2) Method

............................................................................................................................. 61

1.14.3 Unhandled Exceptions and Crashes in ProUCL .................................................. 61

1.15 The Unofficial User Guide to ProUCL4 (Helsel and Gilroy 2012) .................................. 62

1.16 Box and Whisker Plots ..................................................................................................... 69

Chapter 2 Entering and Manipulating Data ..........................................................................74

2.1 Creating a New Data Set ................................................................................................... 74

2.2 Opening an Existing Data Set ........................................................................................... 74

2.3 Input File Format .............................................................................................................. 75

2.4 Number Precision ............................................................................................................. 76

2.6 Saving Files....................................................................................................................... 78

2.7 Editing 79

2.8 Handling Nondetect Observations and Generating Files with Nondetects ....................... 79

2.9 Caution 80

2.10 Summary Statistics for Data Sets with Nondetect Observations ...................................... 81

2.11 Warning Messages and Recommendations for Data Sets with an Insufficient Amount of

Data 82

2.12 Handling Missing Values .................................................................................................. 84

2.13 User Graphic Display Modification .................................................................................. 86

2.13.1 Graphics Tool Bar ................................................................................................ 86

2.13.2 Drop-Down Menu Graphics Tools ...................................................................... 86

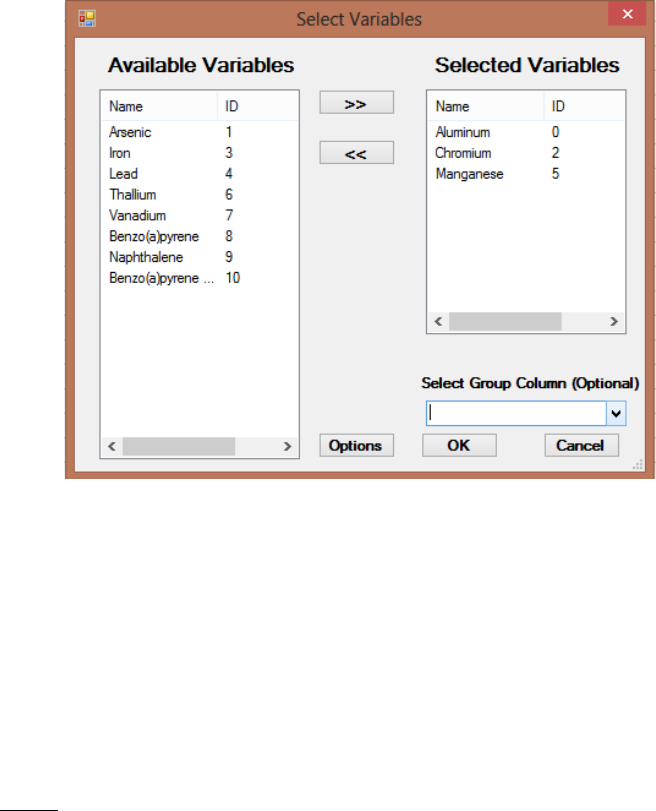

Chapter 3 Select Variables Screen .......................................................................................88

3.1 Select Variables Screen..................................................................................................... 88

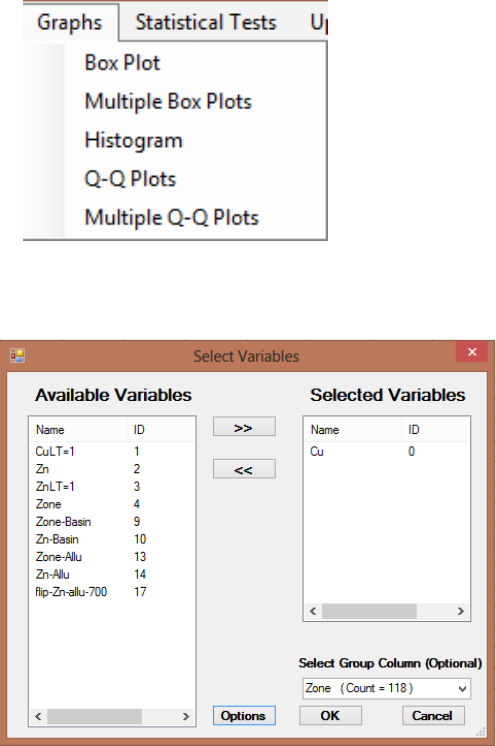

3.1.1 Graphs by Groups ................................................................................................ 90

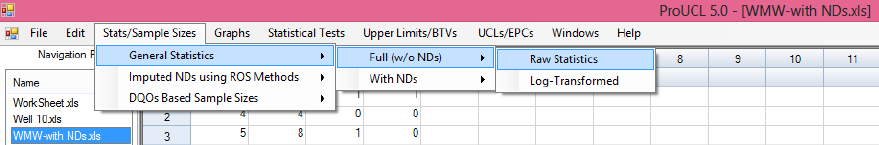

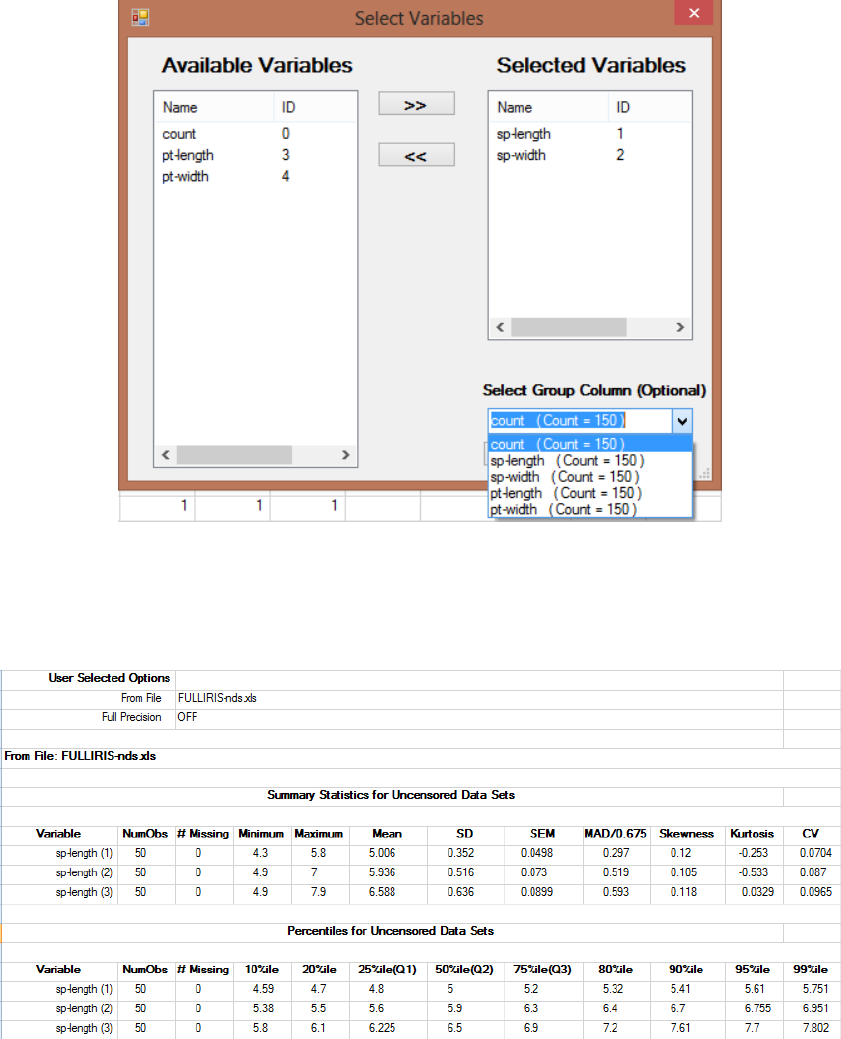

Chapter 4 General Statistics .................................................................................................93

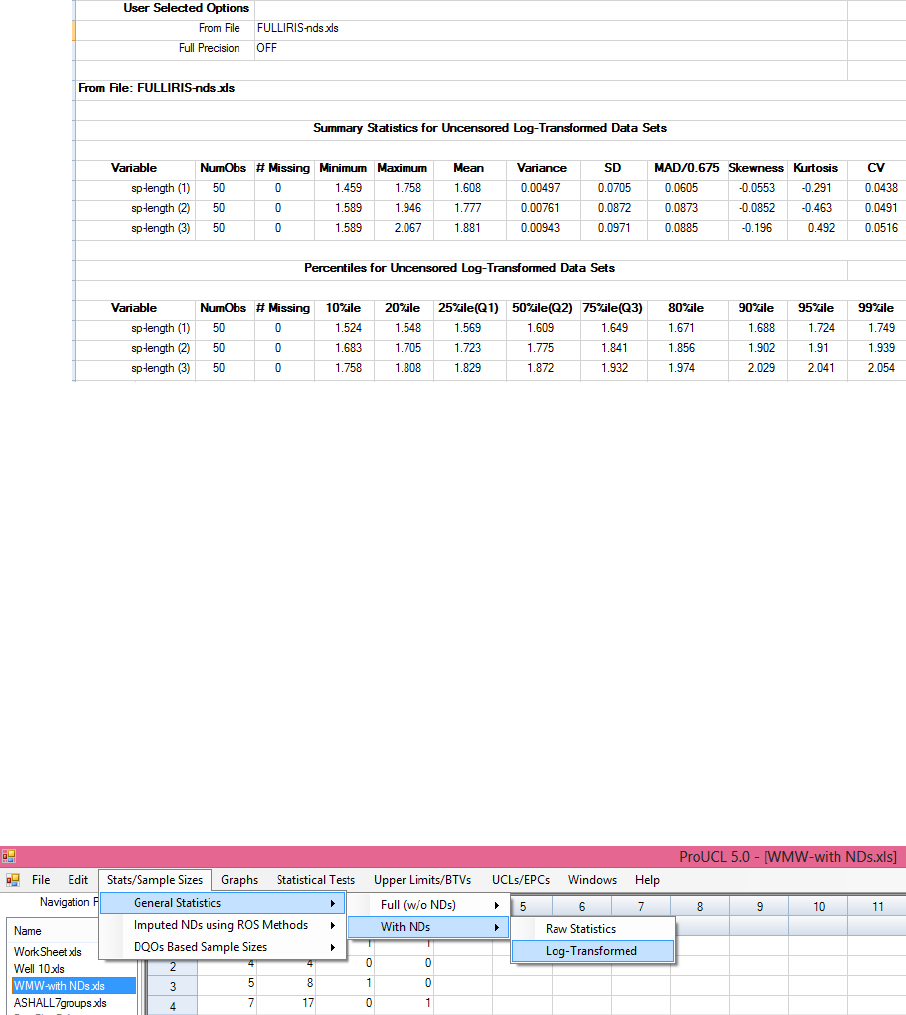

4.1 General Statistics for Full Data Sets without NDs ............................................................ 93

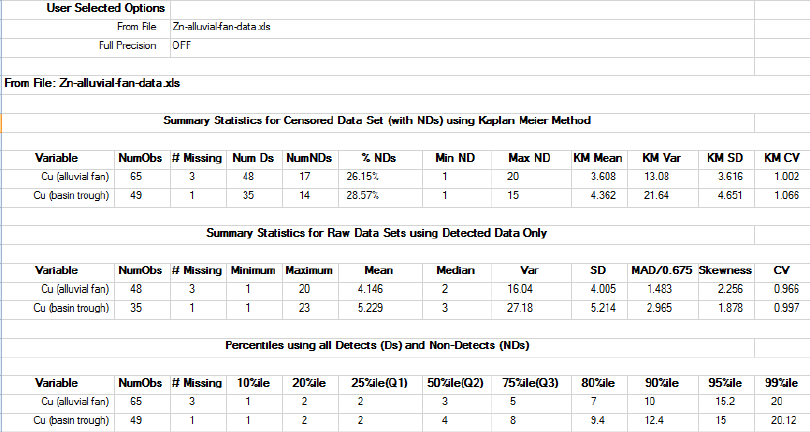

4.2 General Statistics with NDs .............................................................................................. 95

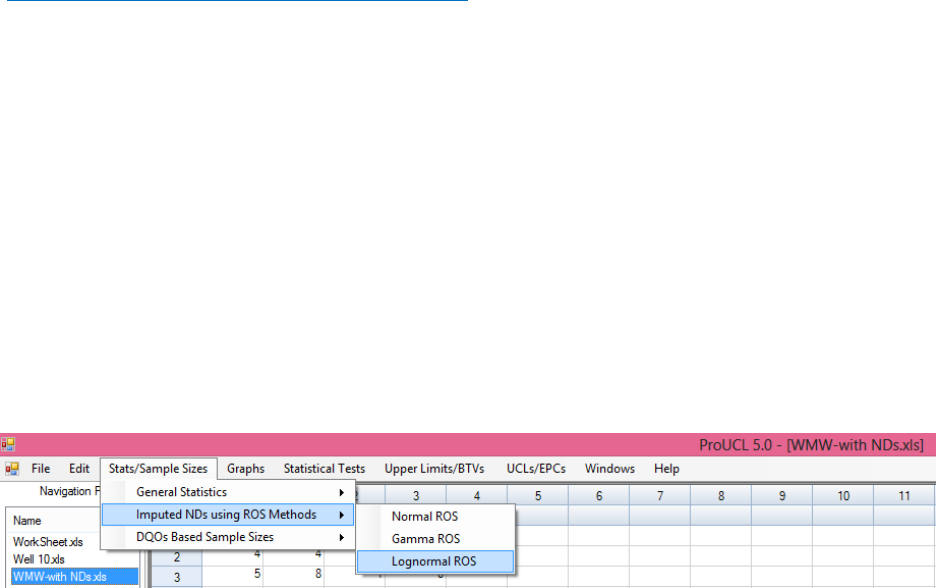

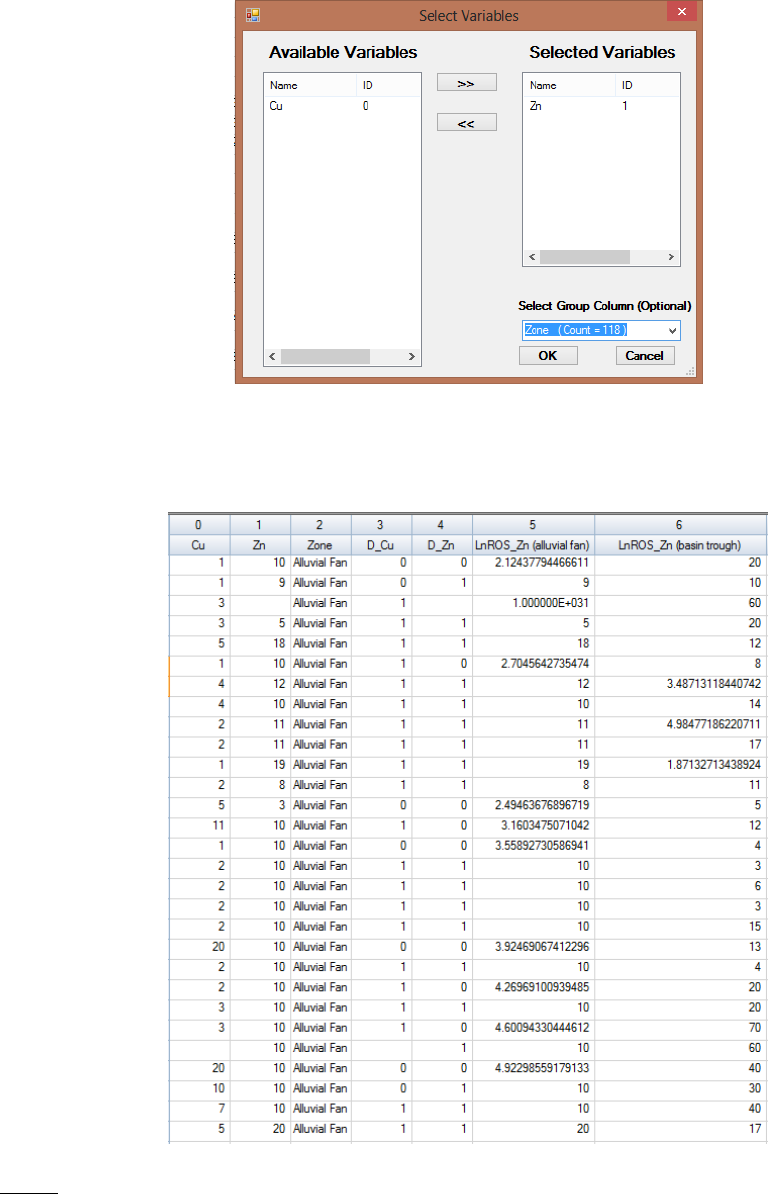

Chapter 5 Imputing Nondetects Using ROS Methods .........................................................97

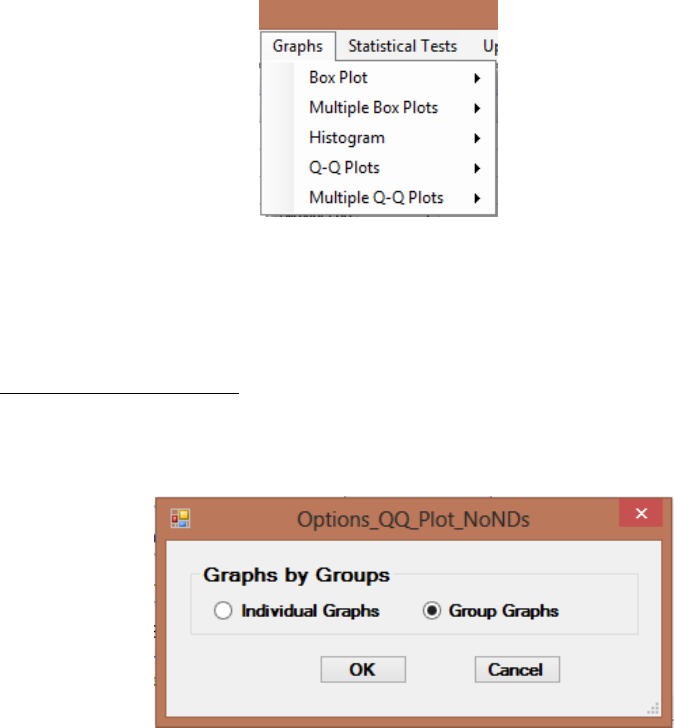

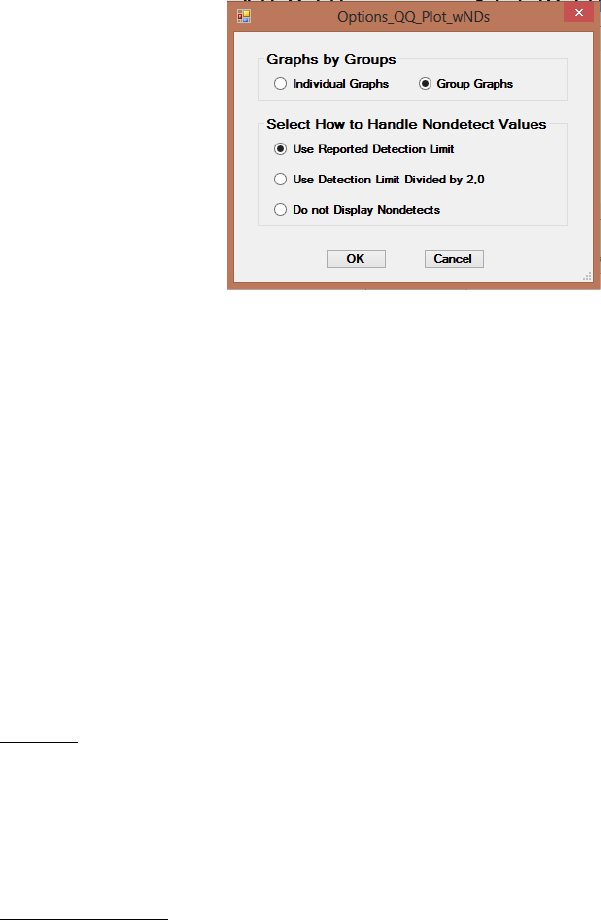

Chapter 6 Graphical Methods (Graph) ..................................................................................99

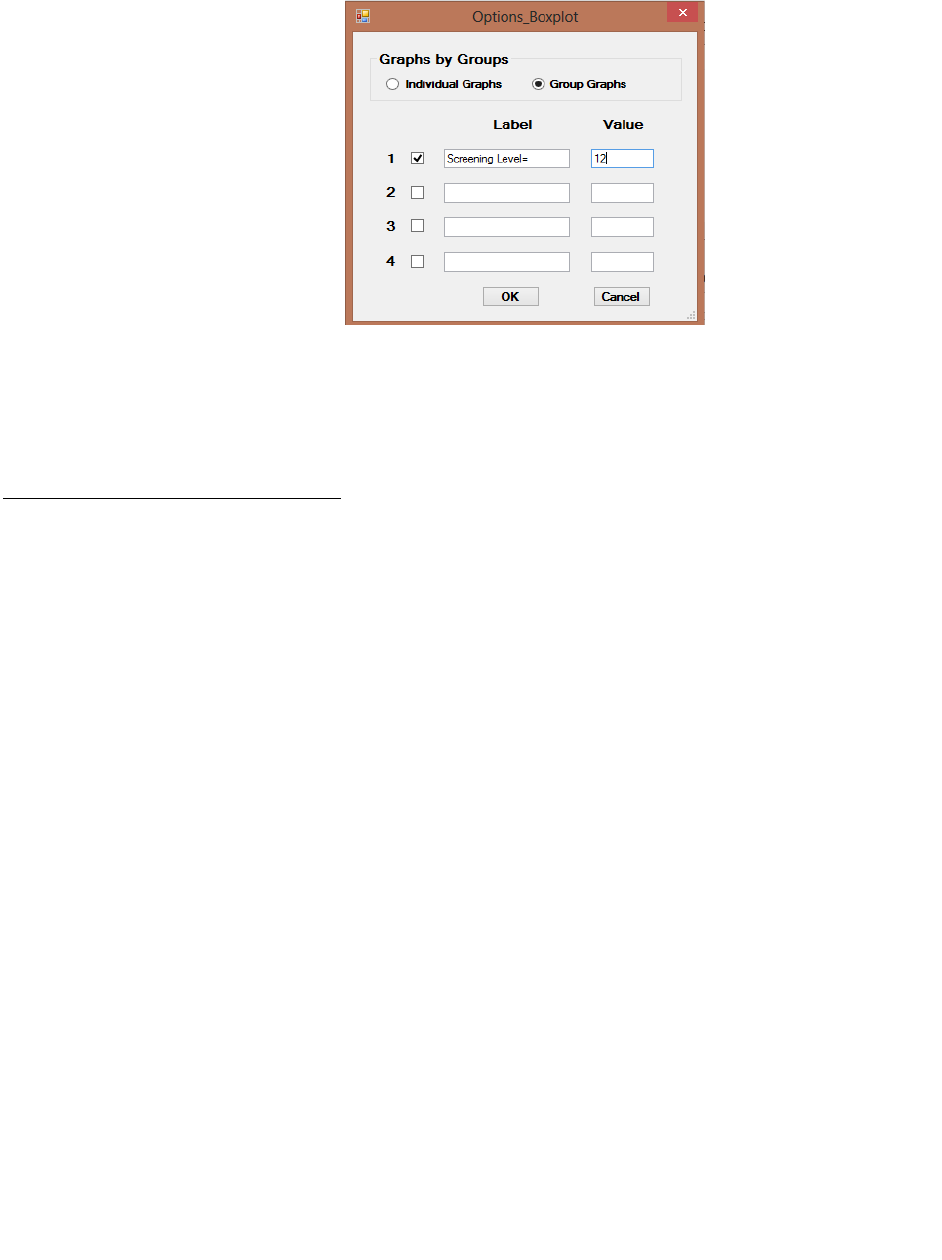

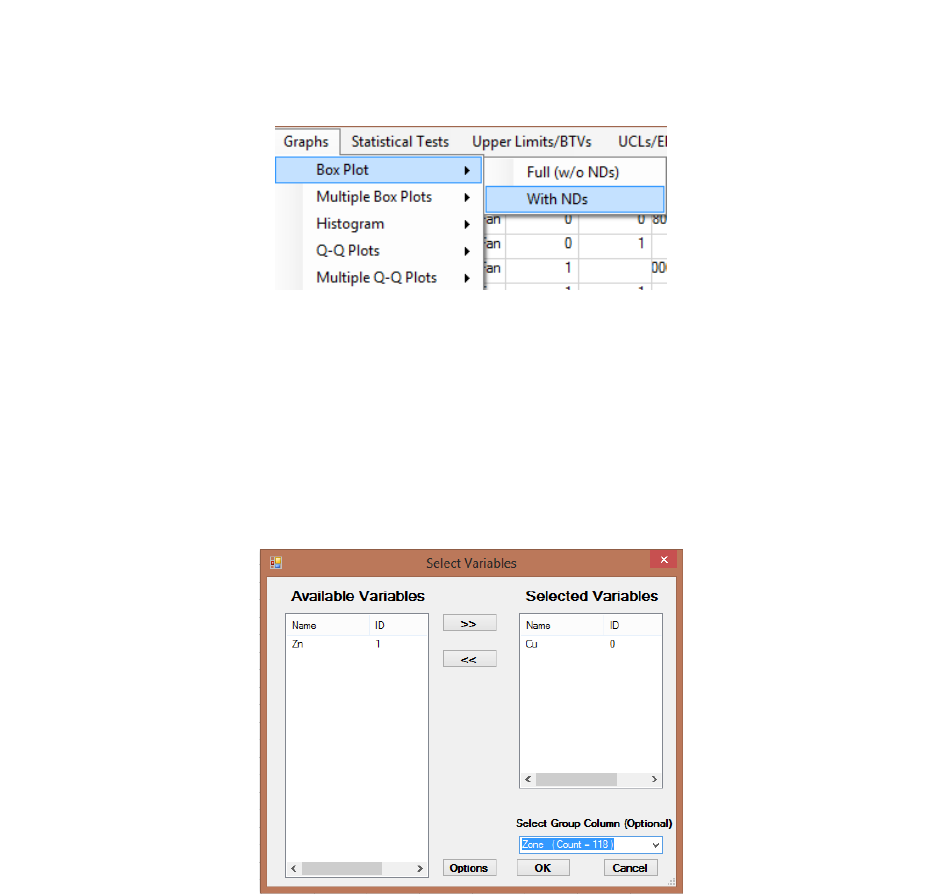

6.1 Box Plot .......................................................................................................................... 101

6.2 Histogram........................................................................................................................ 106

6.3 Q-Q Plots ........................................................................................................................ 107

6.4 Multiple Q-Q Plots .......................................................................................................... 109

6.4.1 Multiple Q-Q plots (Uncensored data sets) ....................................................... 109

6.5 Multiple Box Plots .......................................................................................................... 110

6.5.1 Multiple Box plots (Uncensored data sets) ........................................................ 110

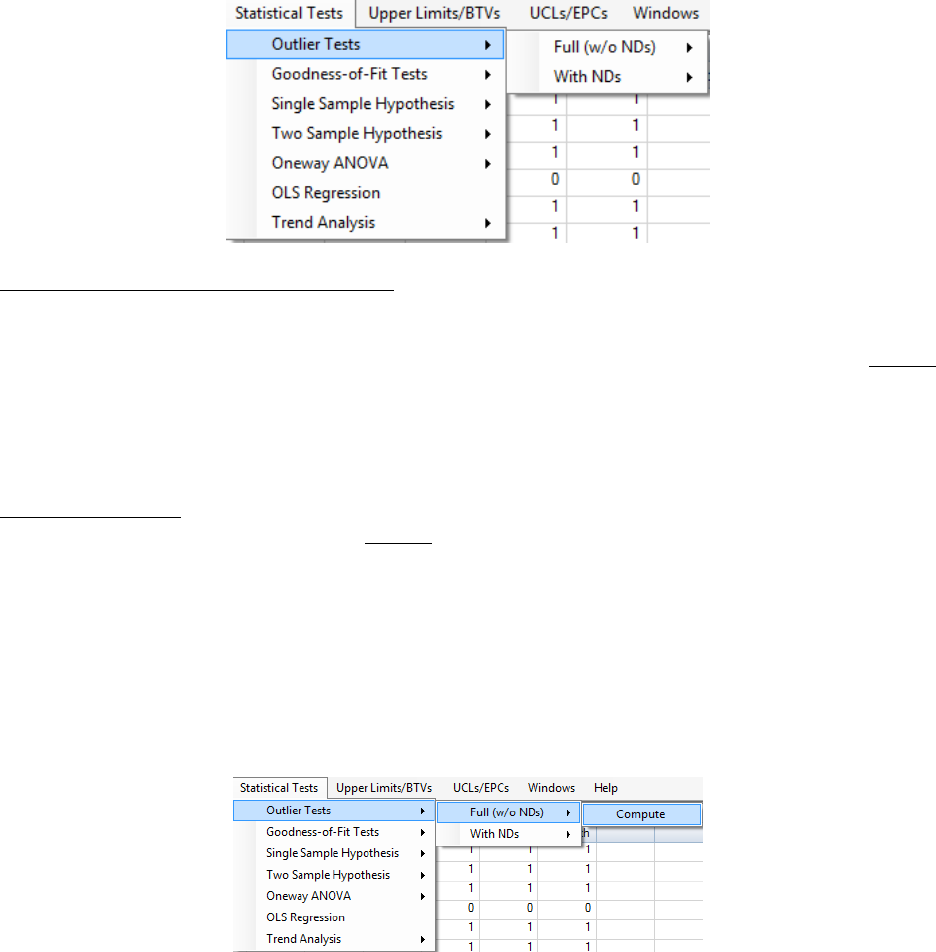

Chapter 7 Classical Outlier Tests ....................................................................................... 112

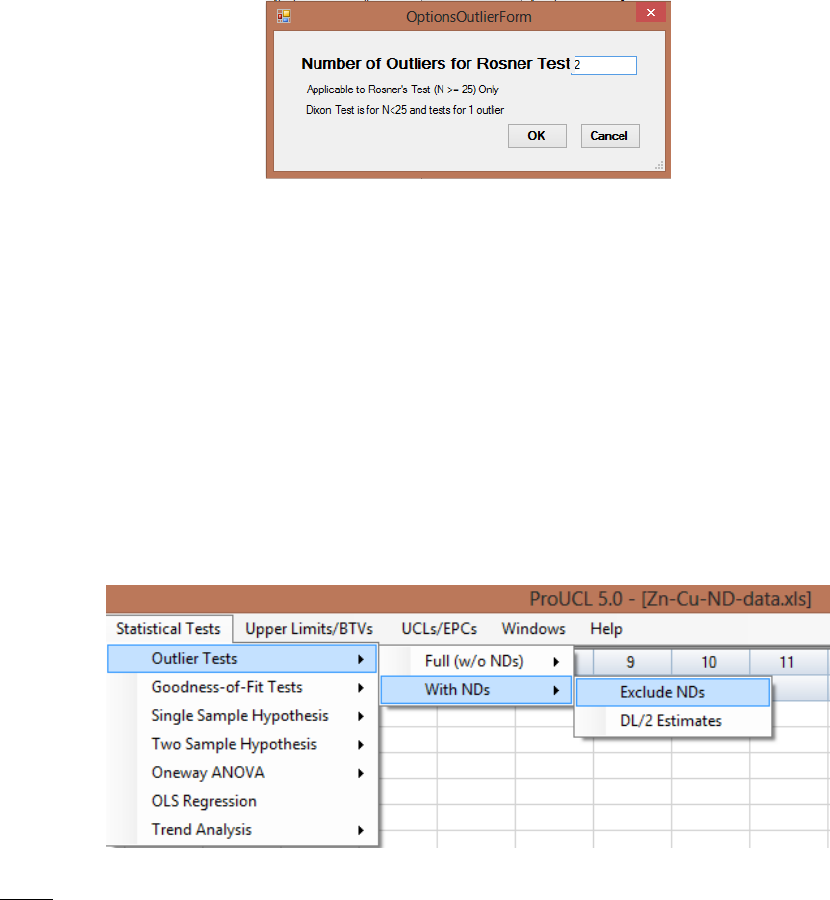

7.1 Outlier Test for Full Data Set.......................................................................................... 113

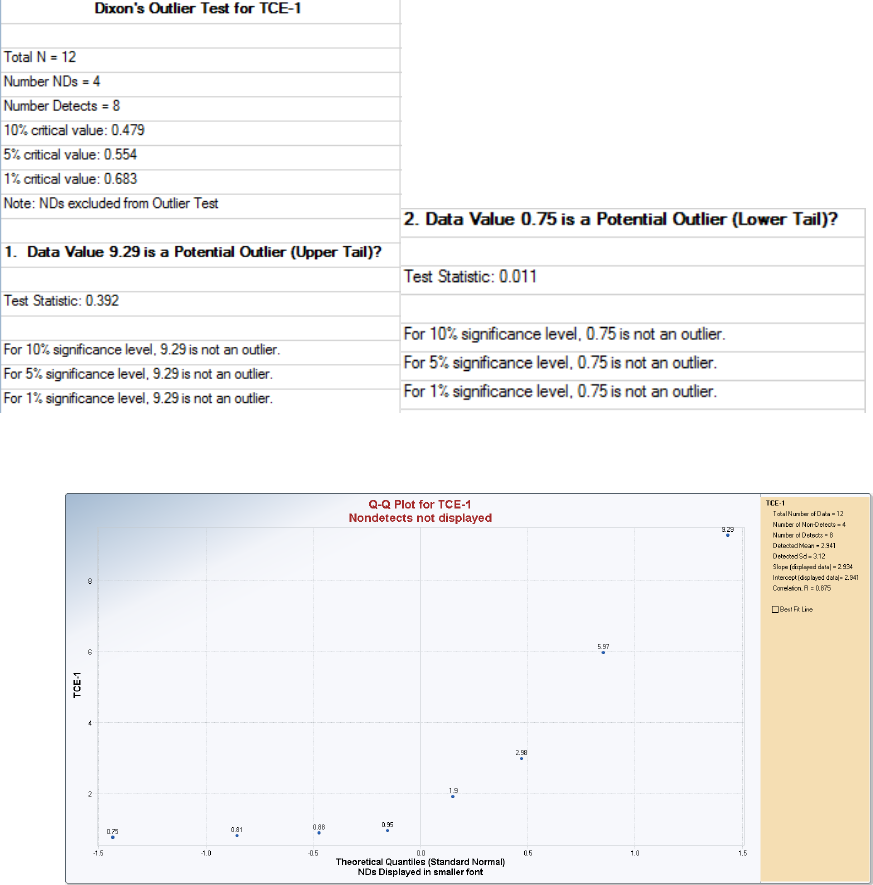

7.2 Outlier Test for Data Sets with NDs ............................................................................... 114

Chapter 8 Goodness-of-Fit (GOF) Tests for Uncensored and Left-Censored Data Sets . 119

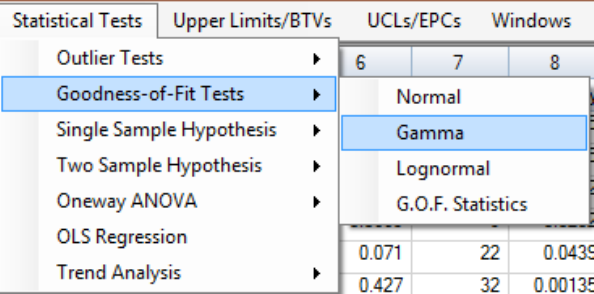

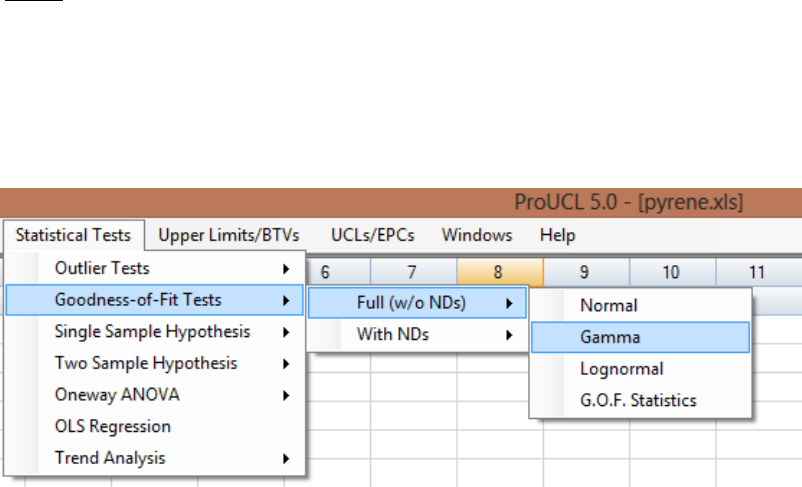

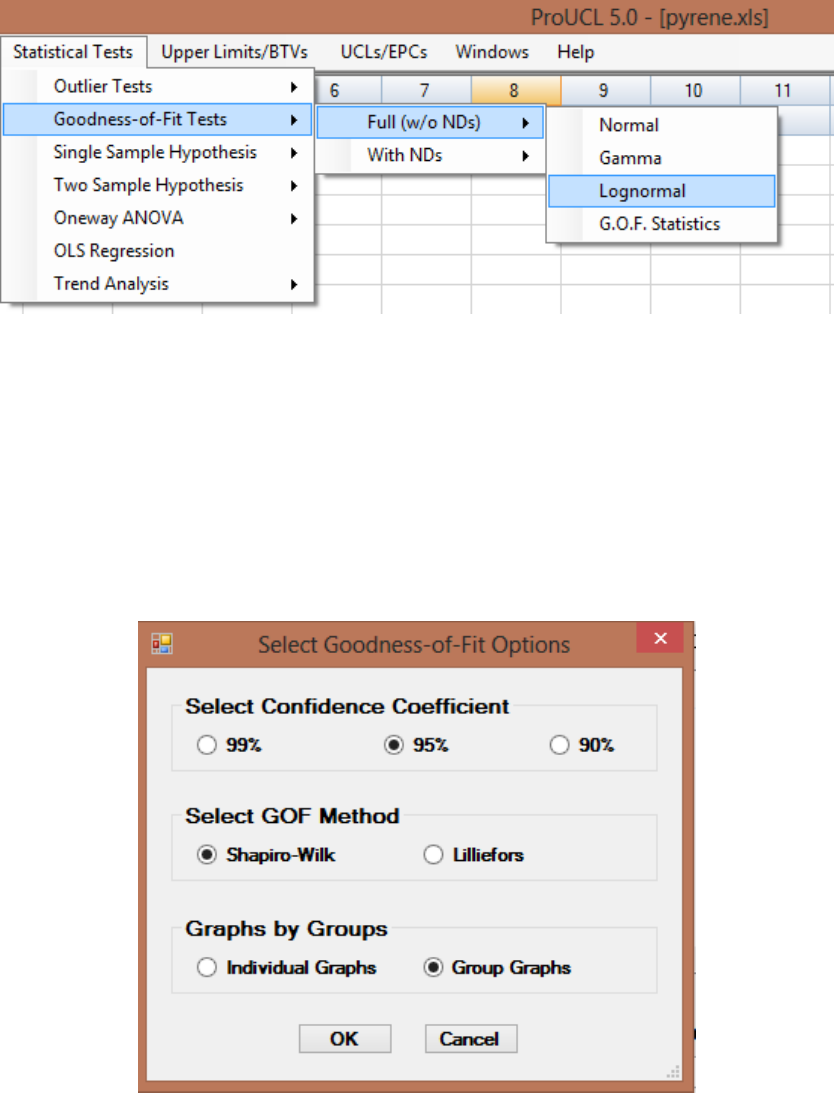

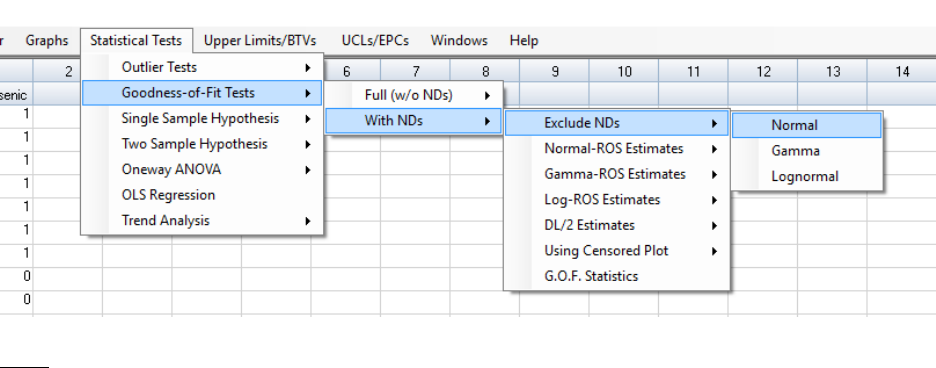

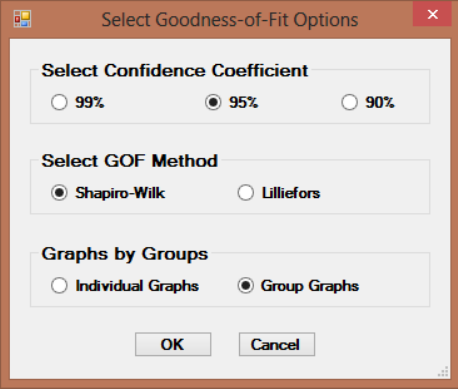

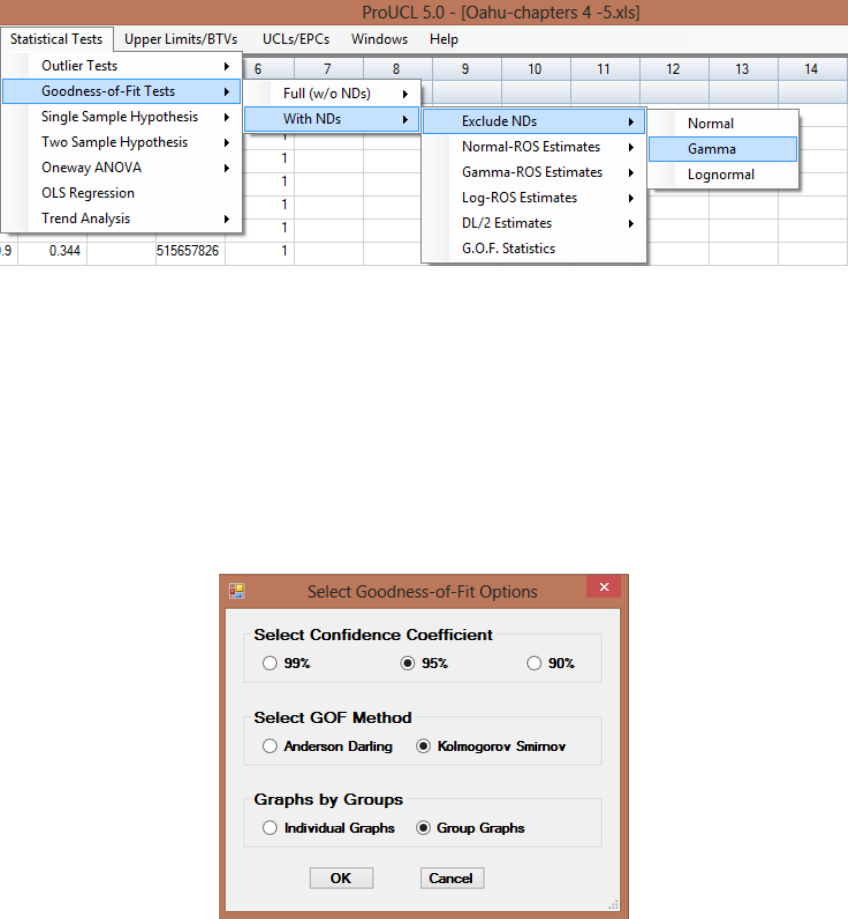

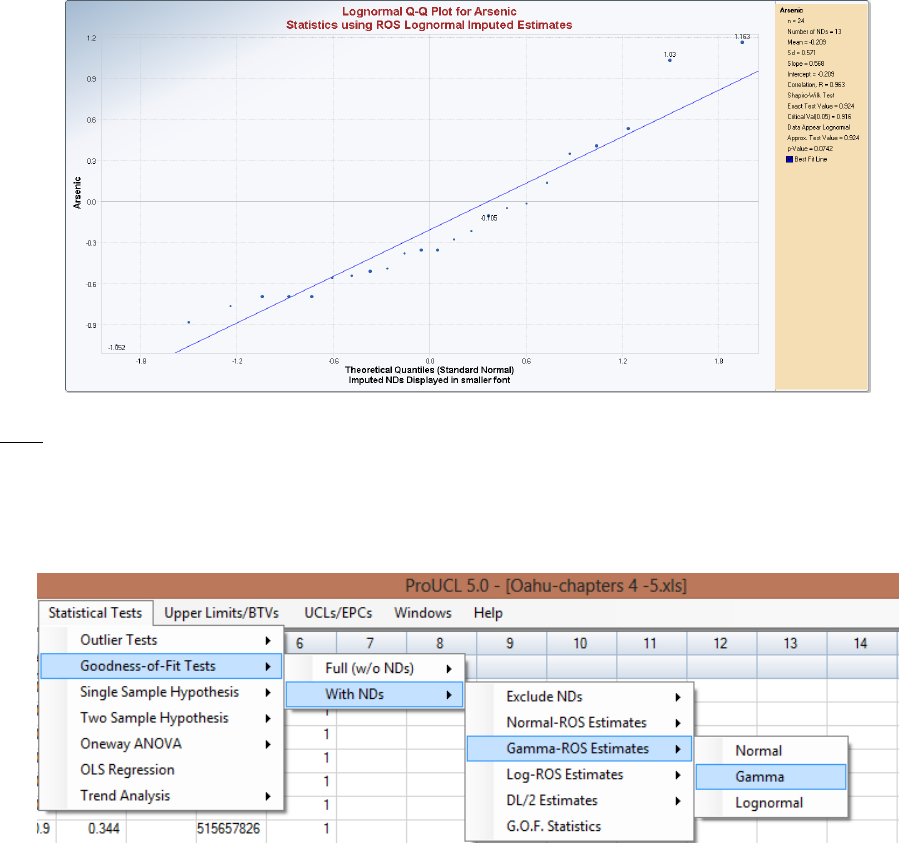

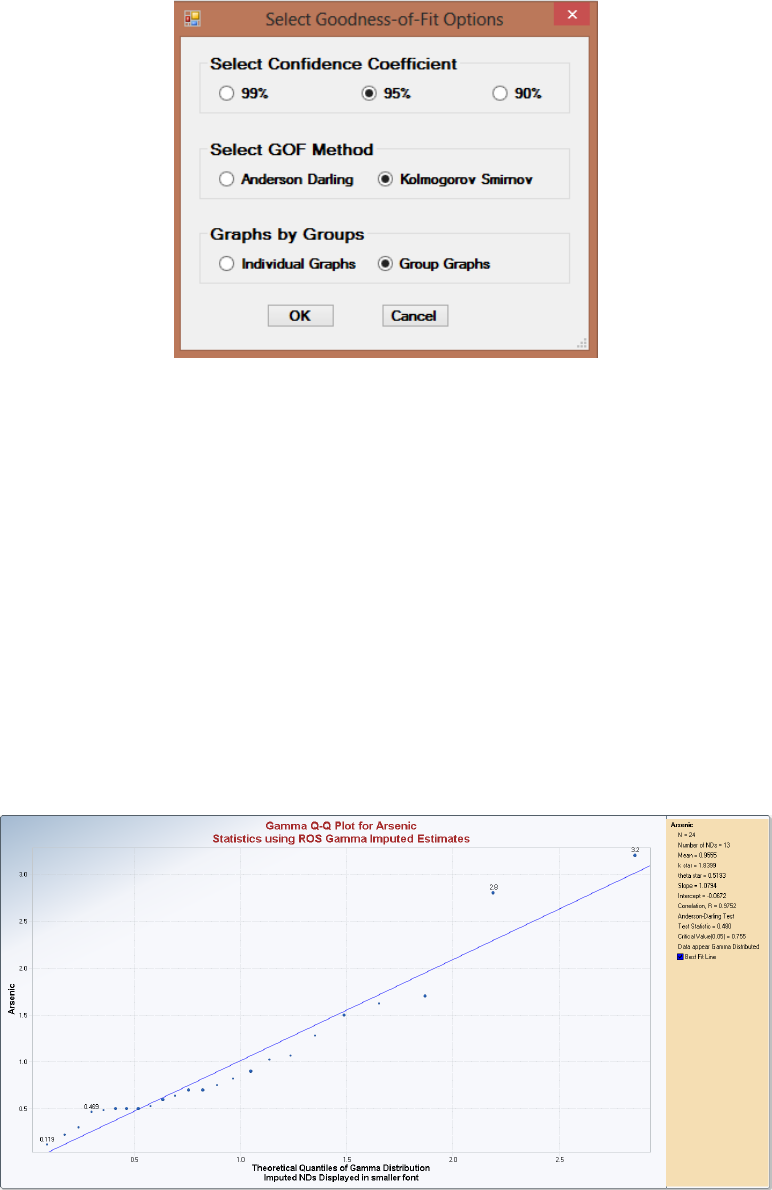

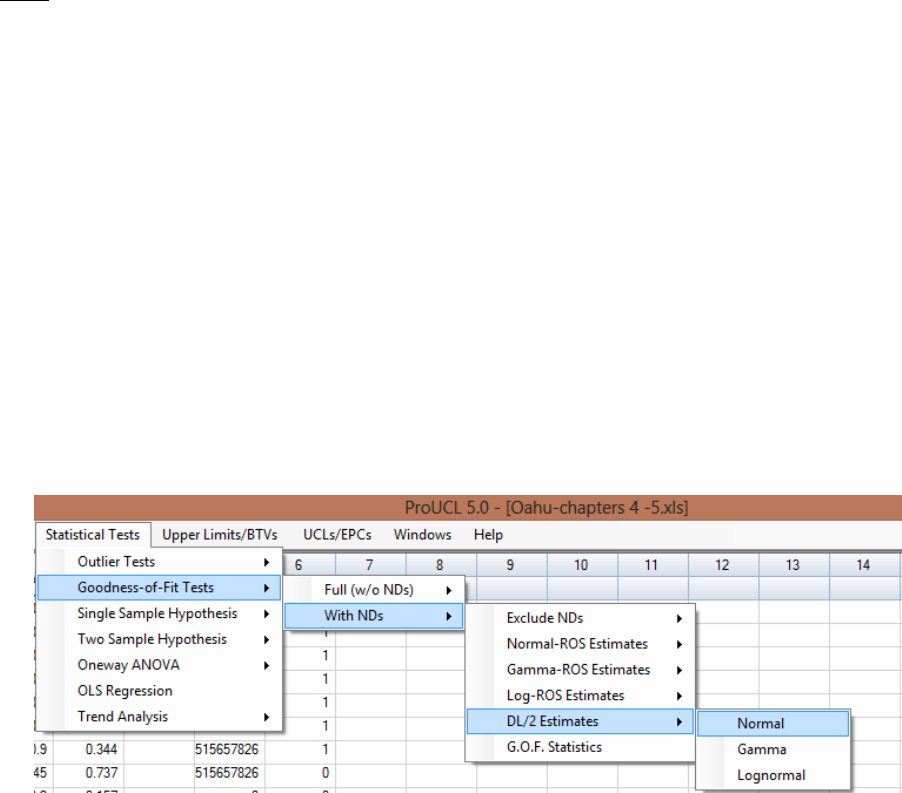

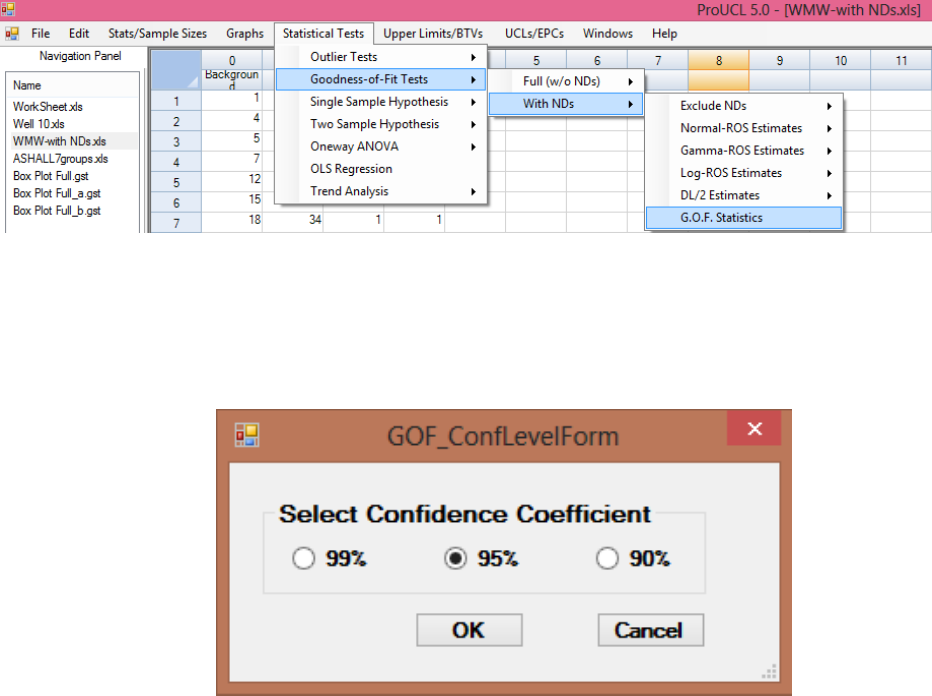

8.1 Goodness-of-Fit test in ProUCL ..................................................................................... 119

8.2 Goodness-of-Fit Tests for Uncensored Full Data Sets .................................................... 122

26

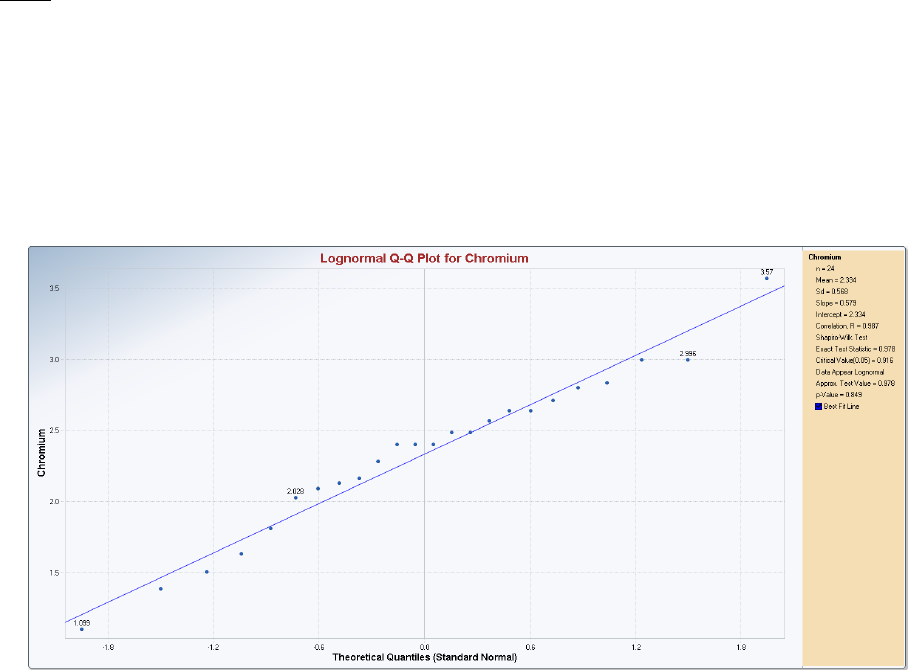

8.2.1 GOF Tests for Normal and Lognormal Distribution ......................................... 123

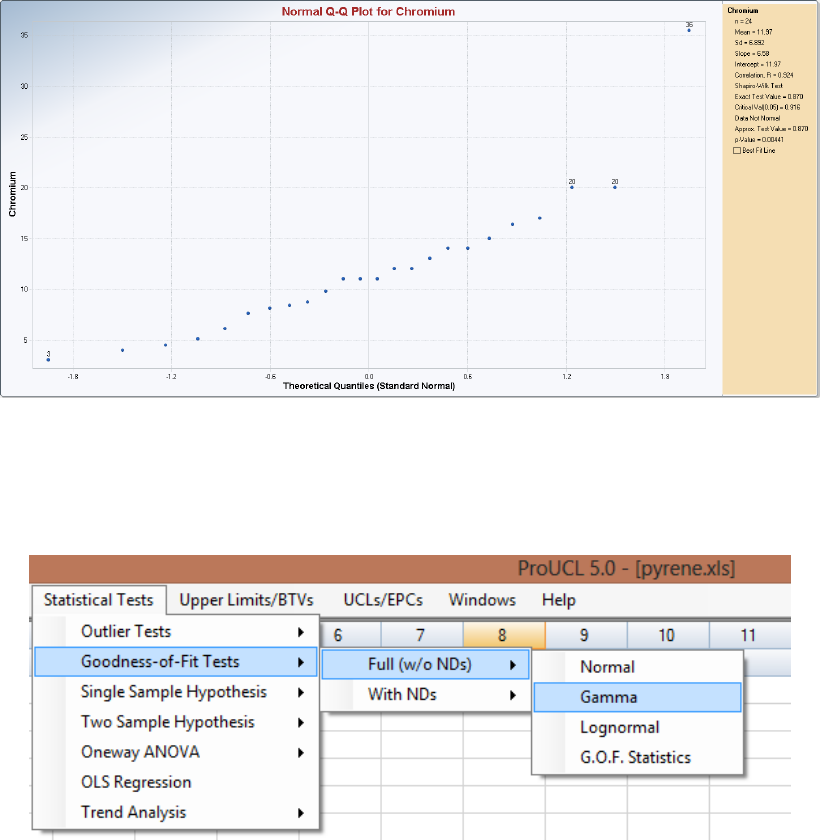

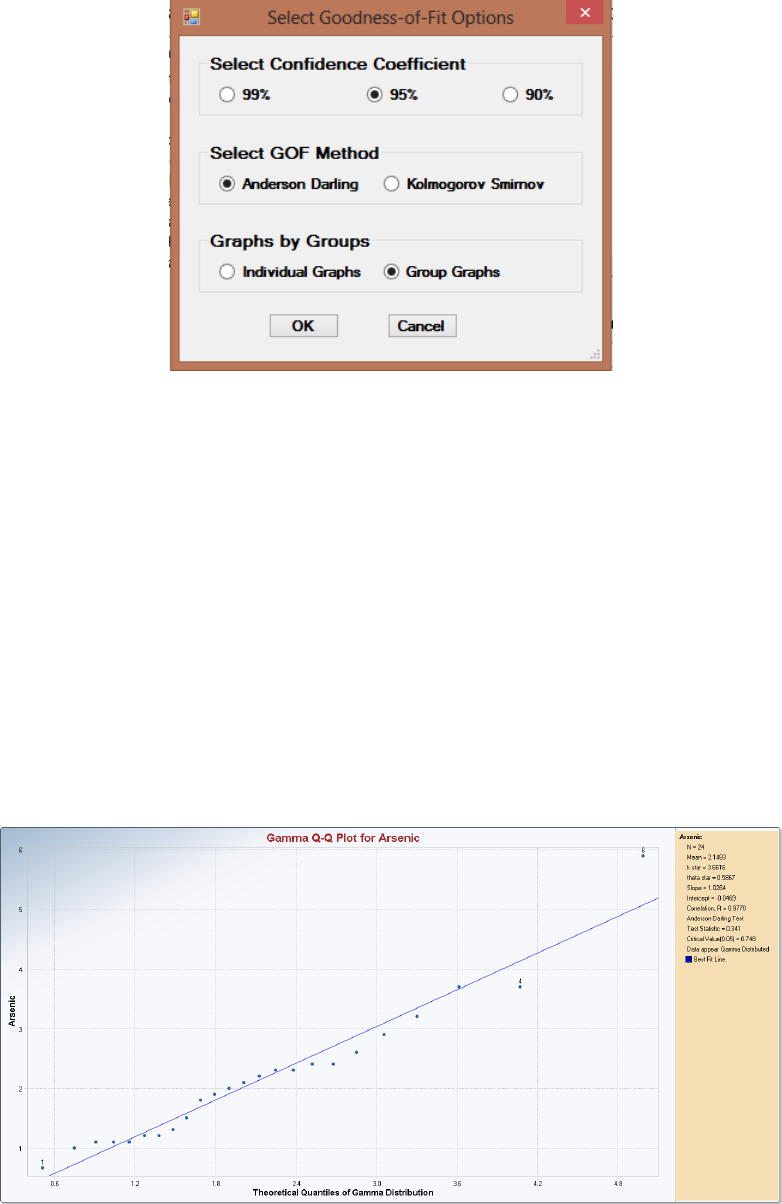

8.2.2 GOF Tests for Gamma Distribution .................................................................. 125

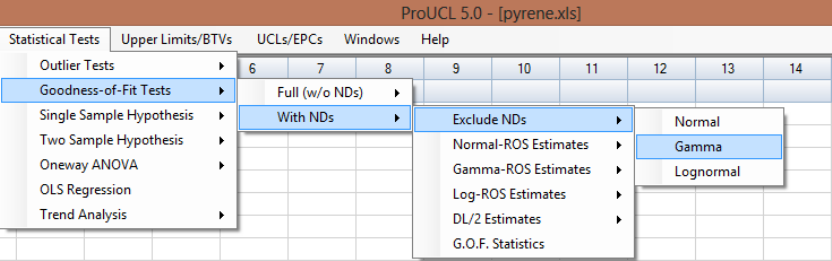

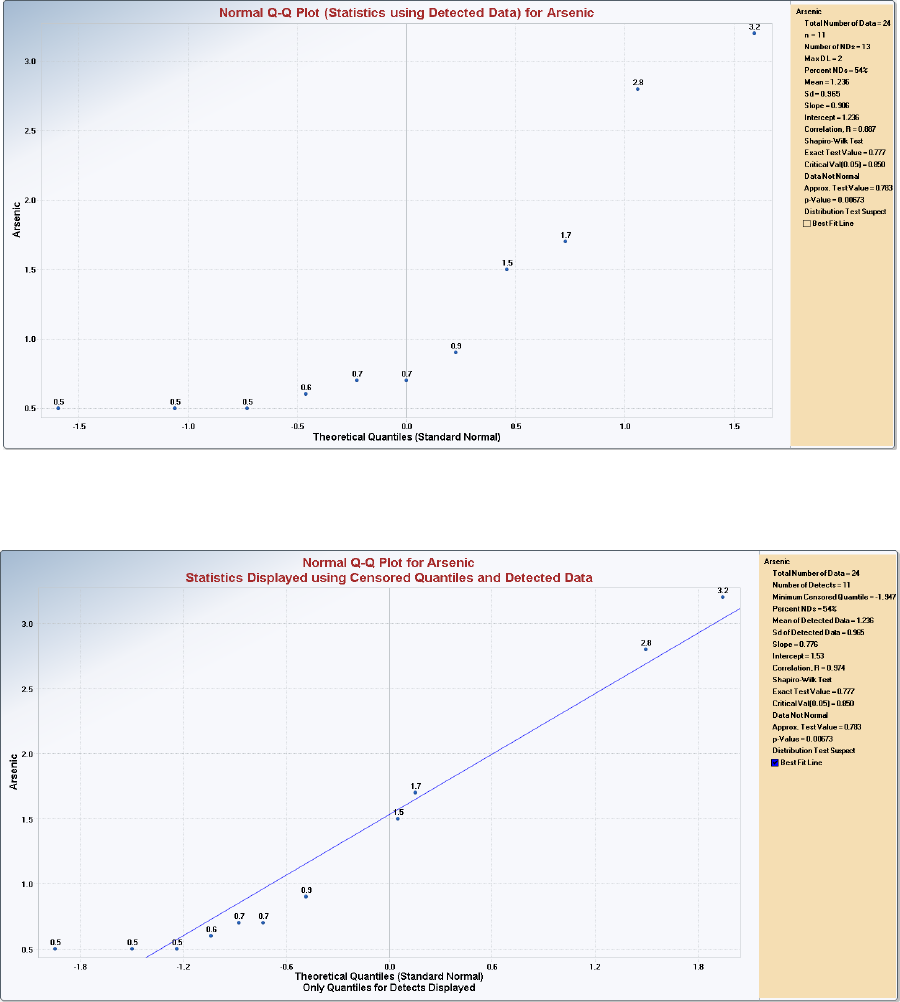

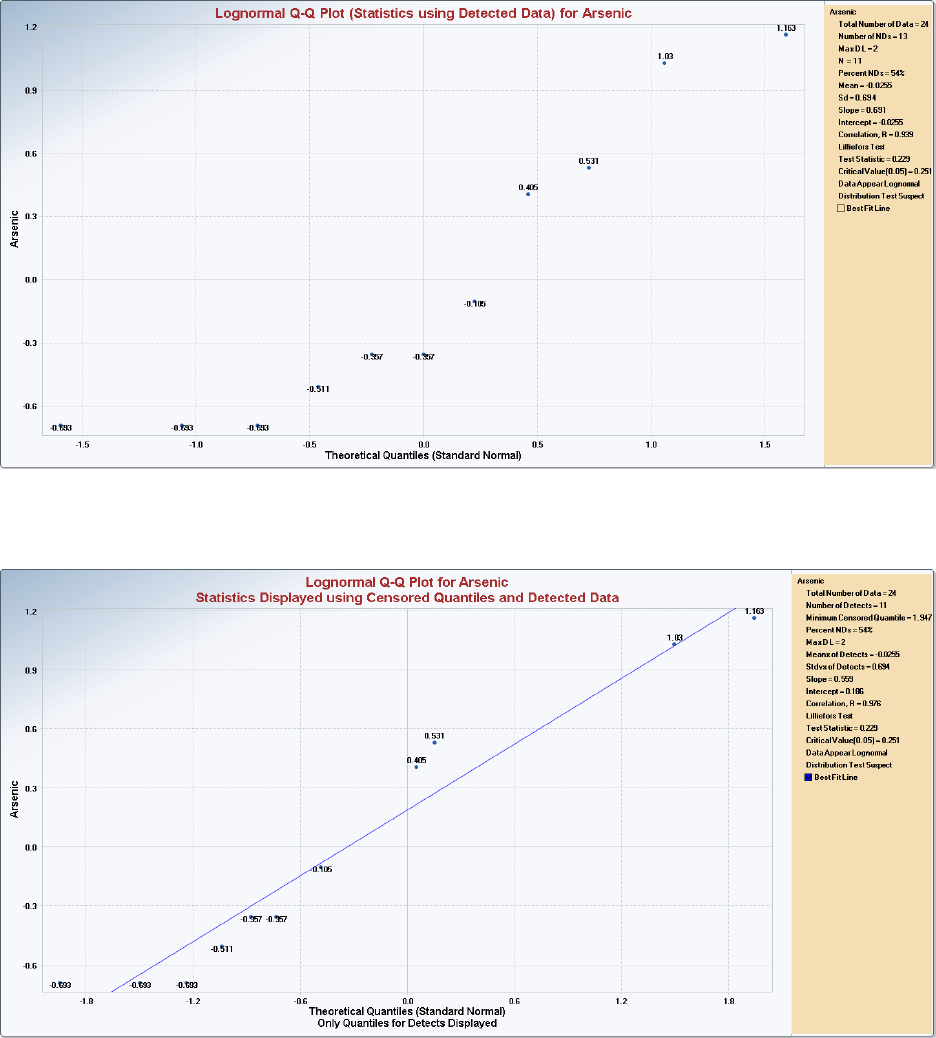

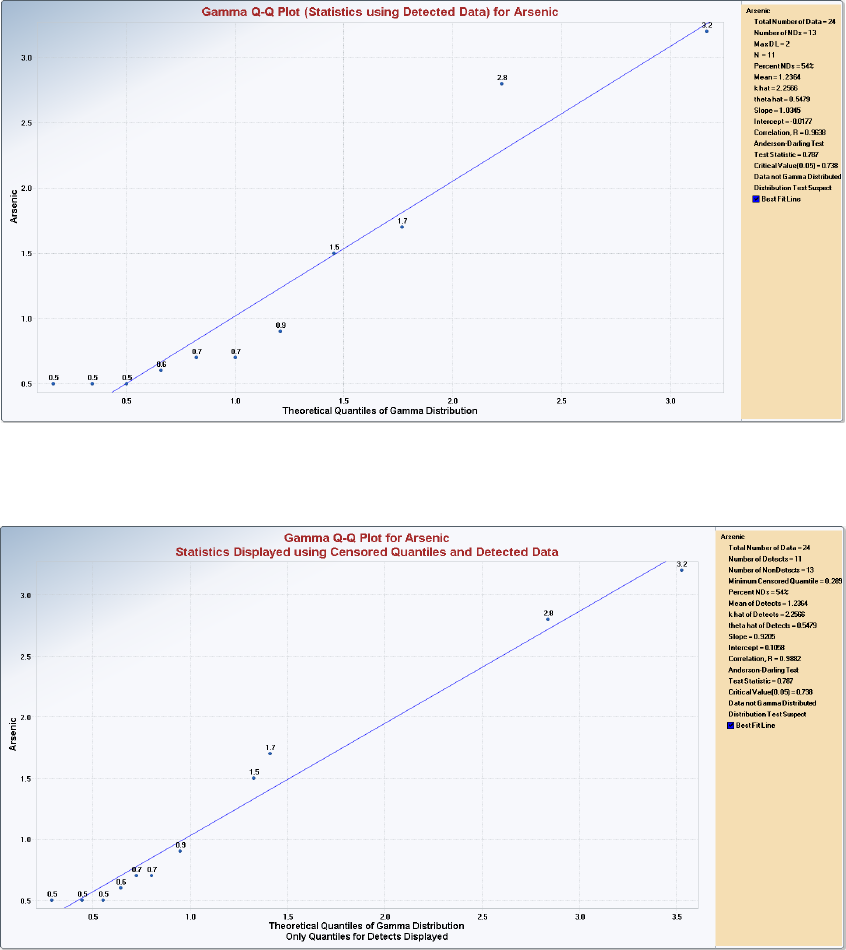

8.3 Goodness-of-Fit Tests Excluding NDs ........................................................................... 127

8.3.1 Normal and Lognormal Options ........................................................................ 127

8.3.2 Gamma Distribution Option .............................................................................. 131

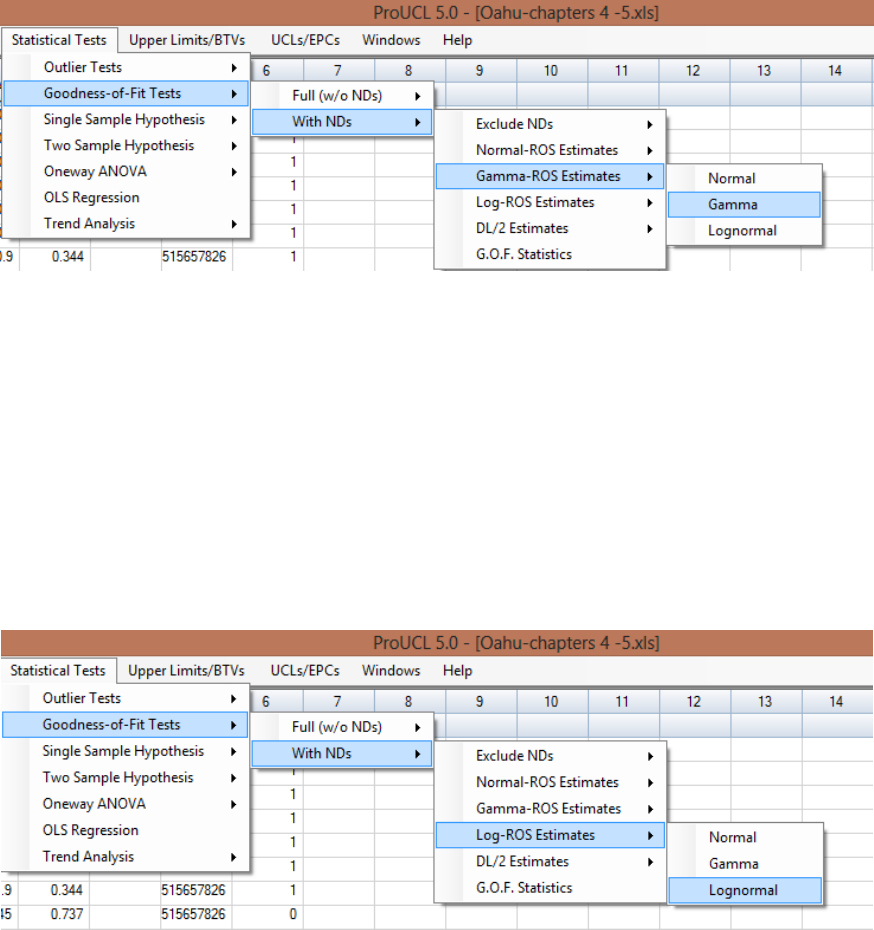

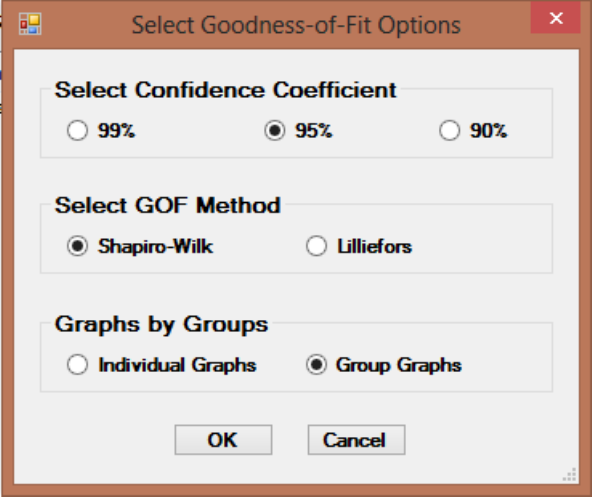

8.4 Goodness-of-Fit Tests with ROS Methods ..................................................................... 133

8.4.1 Normal or Lognormal Distribution (Log-ROS Estimates) ................................ 133

8.4.2 Gamma Distribution (Gamma-ROS Estimates) ................................................. 135

8.5 Goodness-of-Fit Tests with DL/2 Estimates ................................................................... 137

8.5.1Normal or Lognormal Distribution (DL/2 Estimates) ............................................ 137

8.6 Goodness-of-Fit Test Statistics ....................................................................................... 137

Chapter 9 Single-Sample and Two-Sample Hypotheses Testing Approaches ................ 141

9.1 Single-Sample Hypotheses Tests .................................................................................... 141

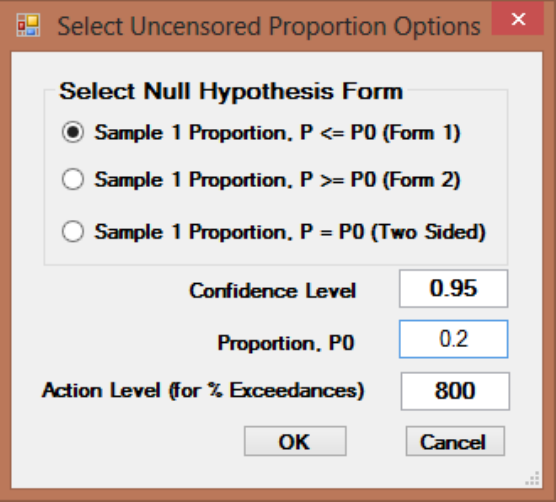

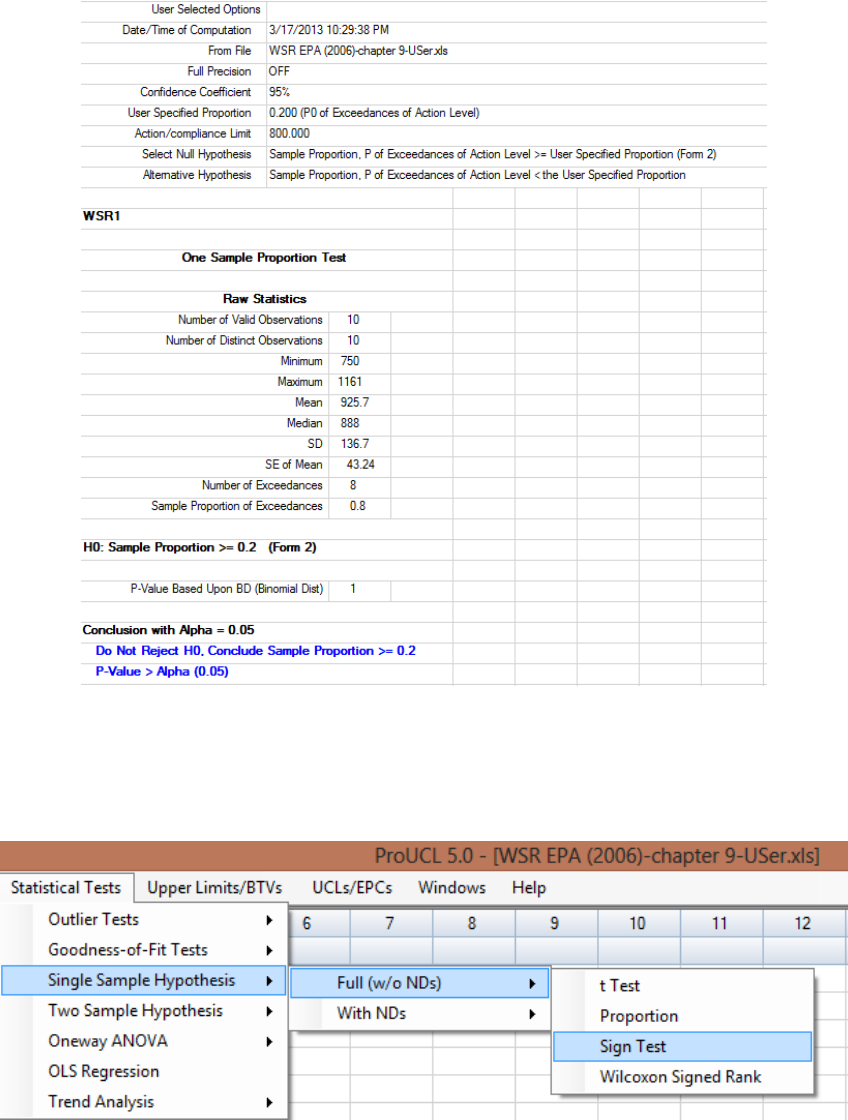

9.1.1 Single-Sample Hypothesis Testing for Full Data without Nondetects .............. 142

9.1.1.1 Single-Sample t-Test ................................................................... 143

9.1.1.2 Single-Sample Proportion Test ................................................... 144

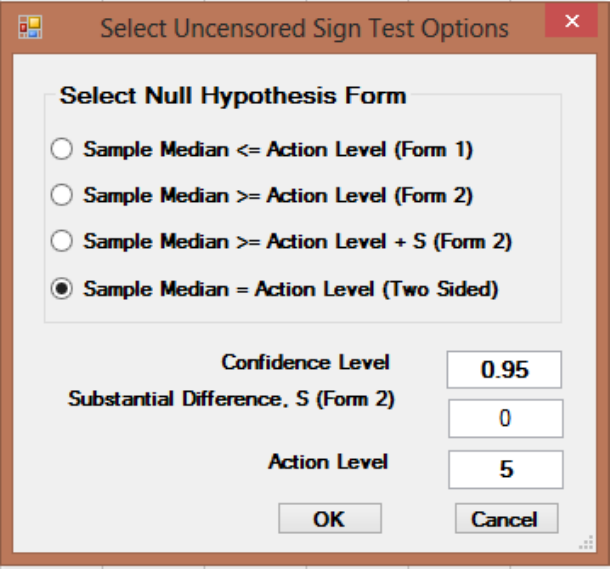

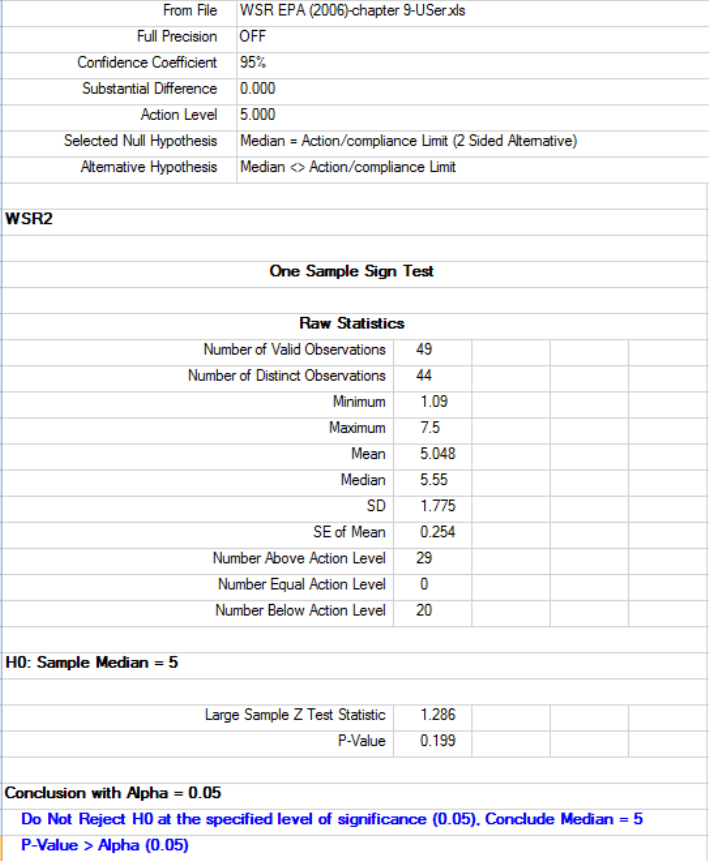

9.1.1.3 Single-Sample Sign Test .............................................................. 146

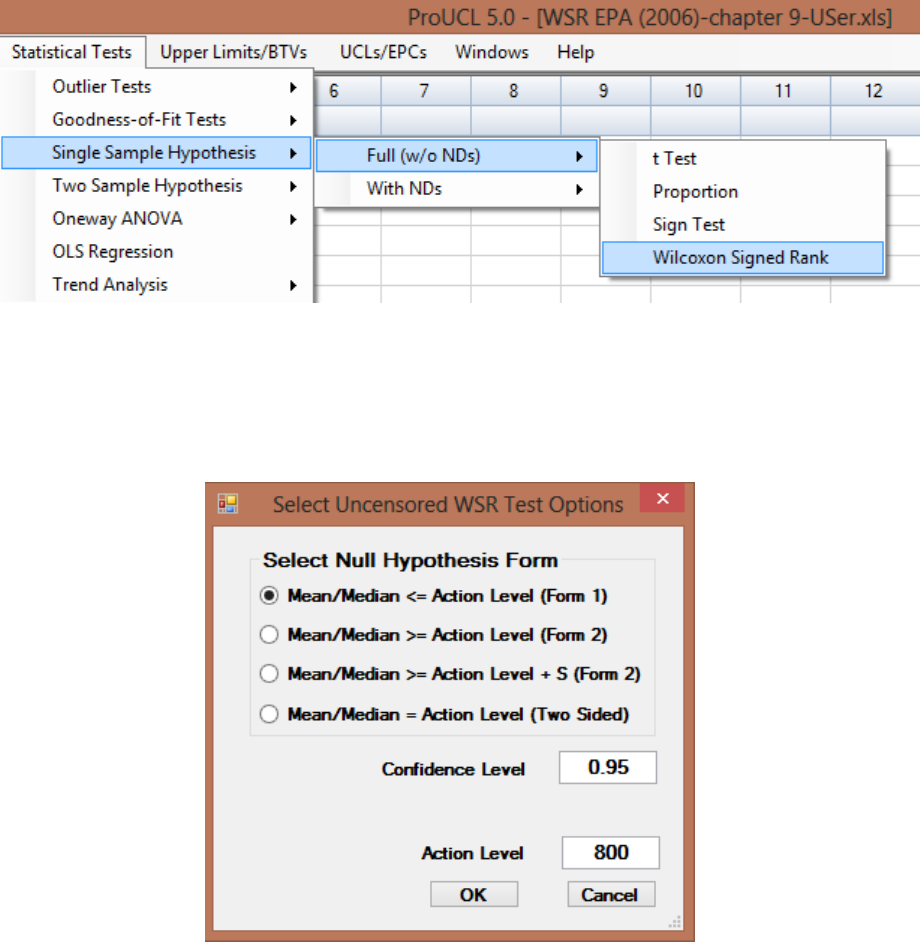

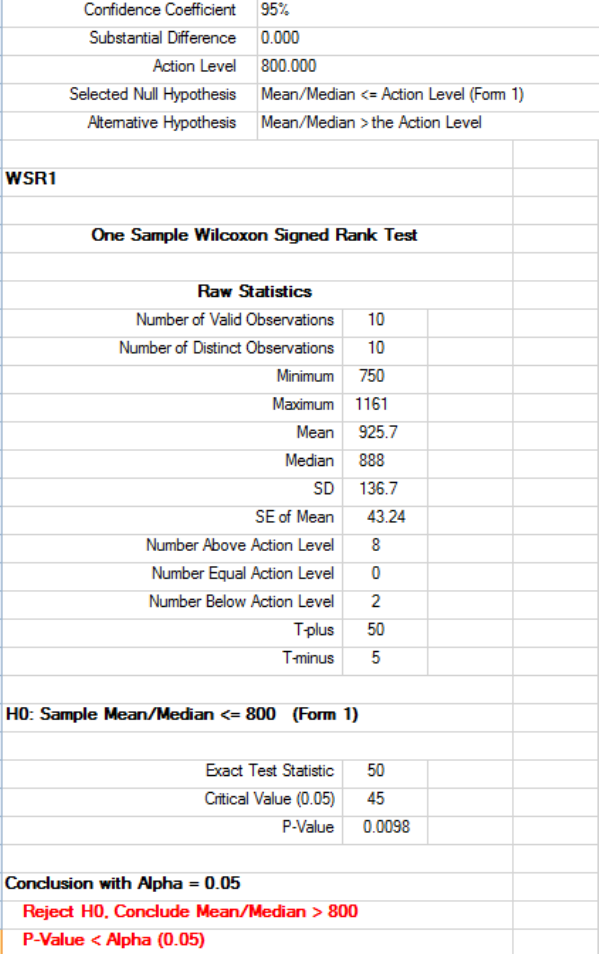

9.1.1.4 Single-Sample Wilcoxon Signed Rank (WSR) Test ..................... 149

9.1.2 Single-Sample Hypothesis Testing for Data Sets with Nondetects ................... 151

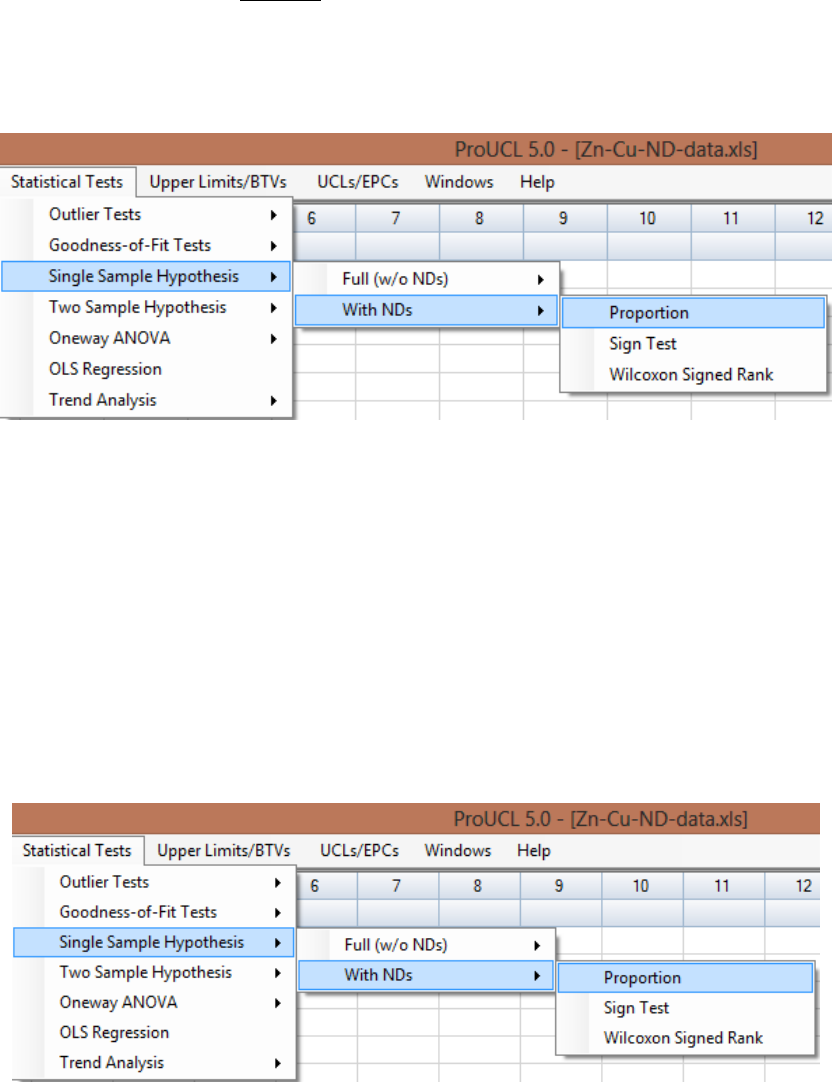

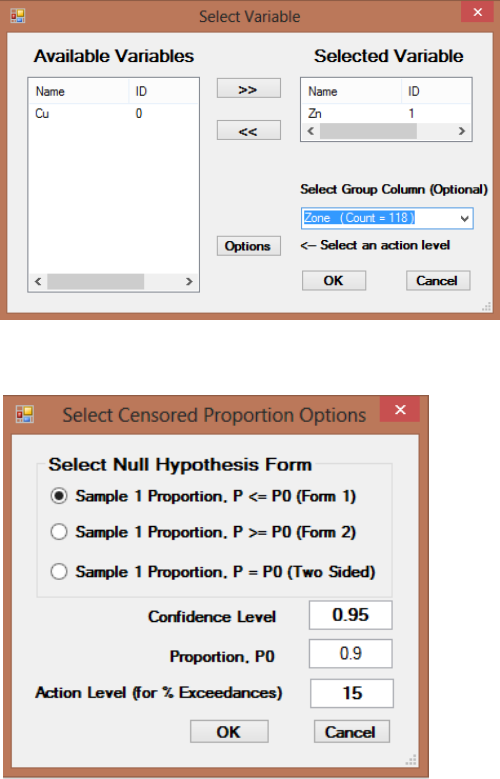

9.1.2.1 Single Proportion Test on Data Sets with NDs ........................... 151

9.1.2.2 Single-Sample Sign Test with NDs .............................................. 154

9.1.2.3 Single-Sample Wilcoxon Signed Rank Test with NDs ................. 155

9.2 Two-Sample Hypotheses Testing Approaches ............................................................... 157

9.2.1 Two-Sample Hypothesis Tests for Full Data ..................................................... 158

9.2.1.1 Two-Sample t-Test without NDs ................................................. 160

9.2.1.2 Two-Sample Wilcoxon-Mann-Whitney (WMW) Test without NDs

..................................................................................................... 163

9.2.2 Two-Sample Hypothesis Testing for Data Sets with Nondetects ...................... 165

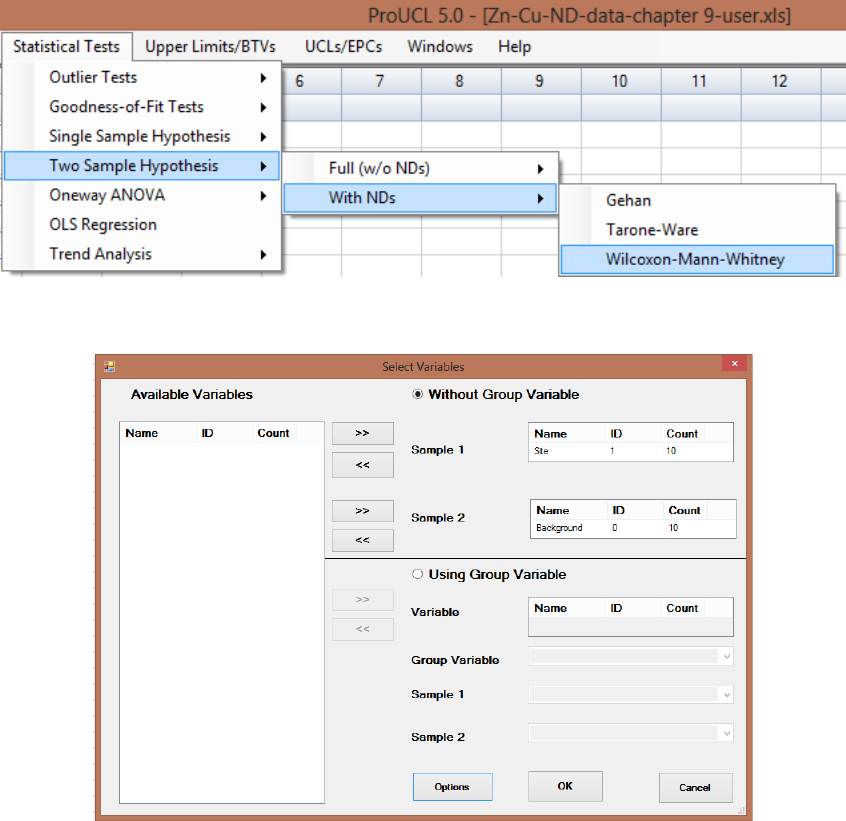

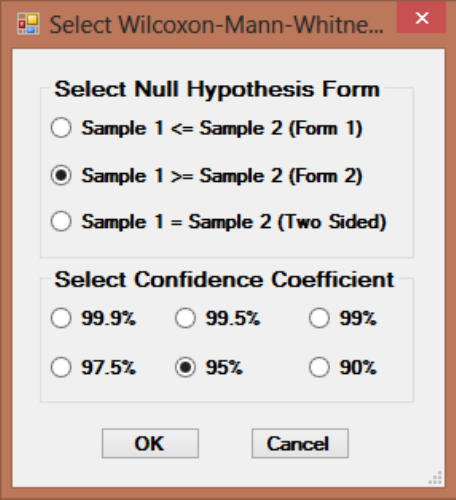

9.2.2.1 Two-Sample Wilcoxon-Mann-Whitney Test with Nondetects ..... 166

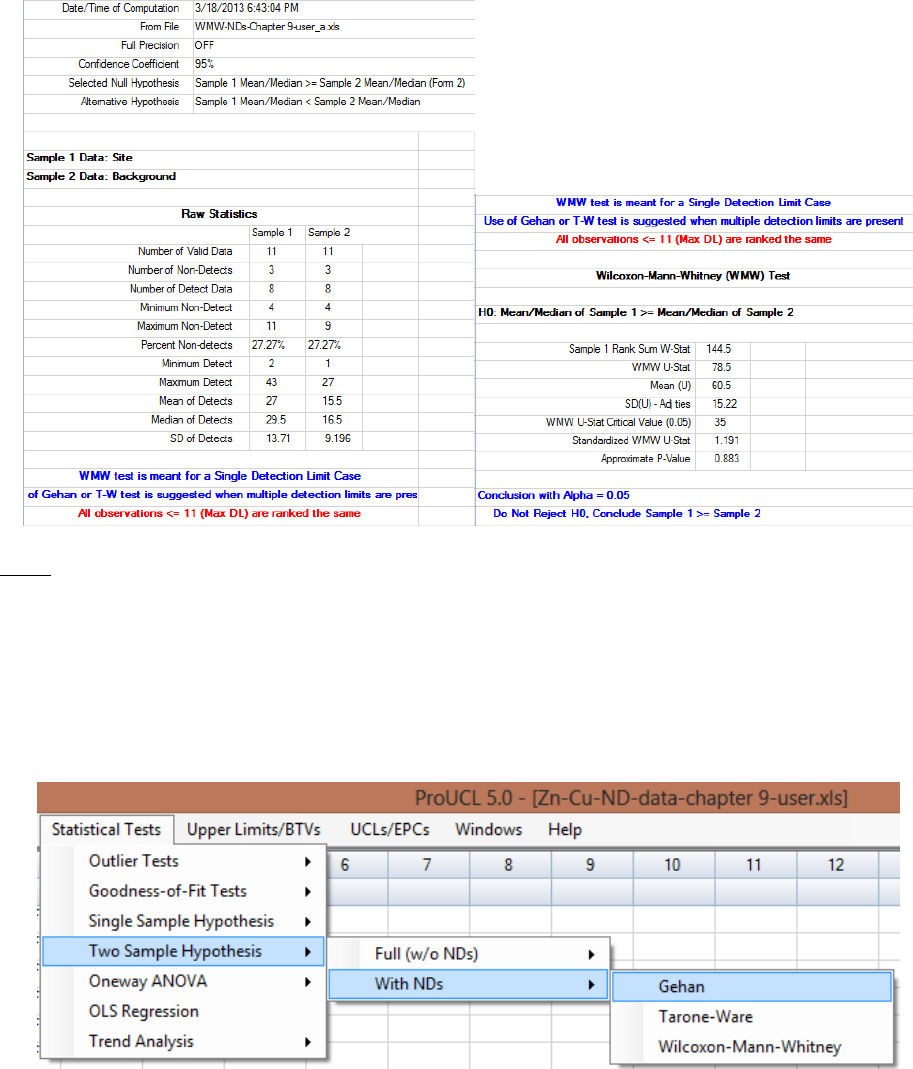

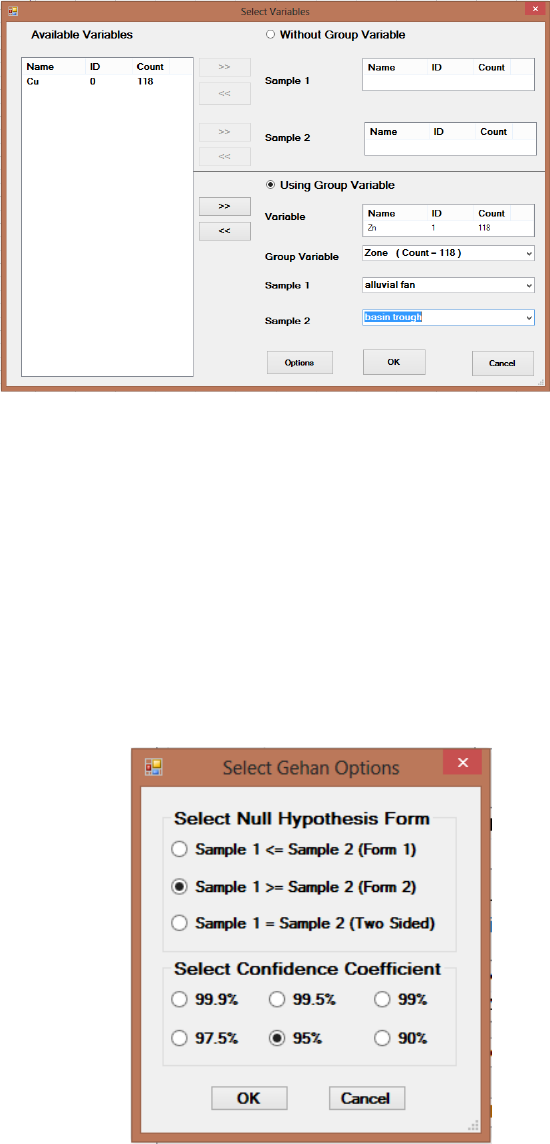

9.2.2.2 Two-Sample Gehan Test for Data Sets with Nondetects ............ 168

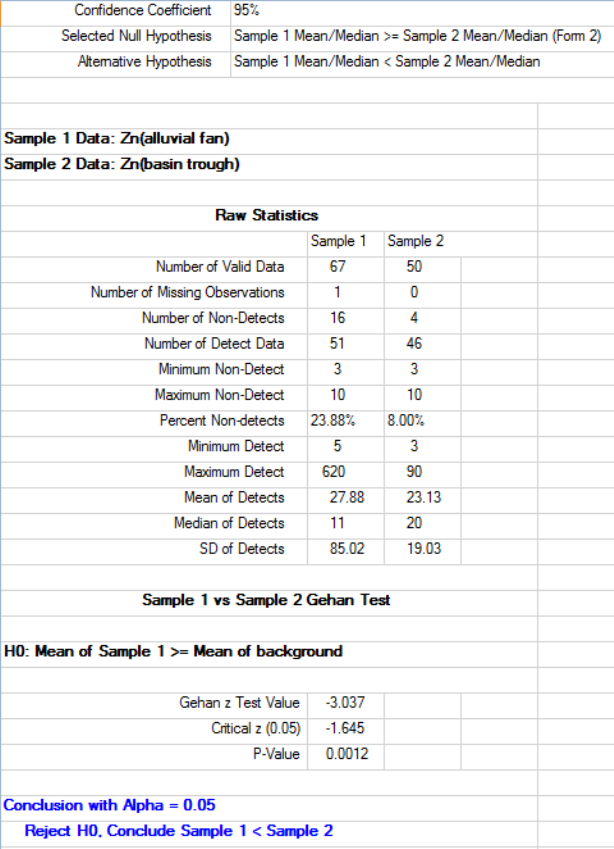

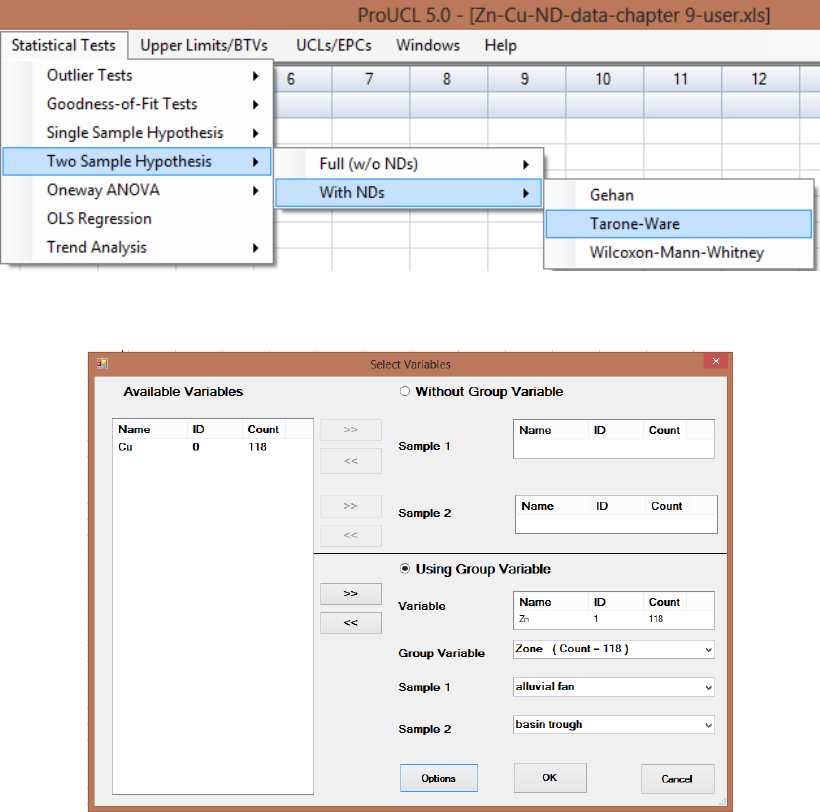

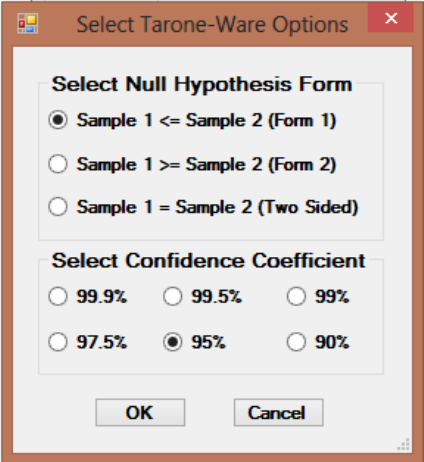

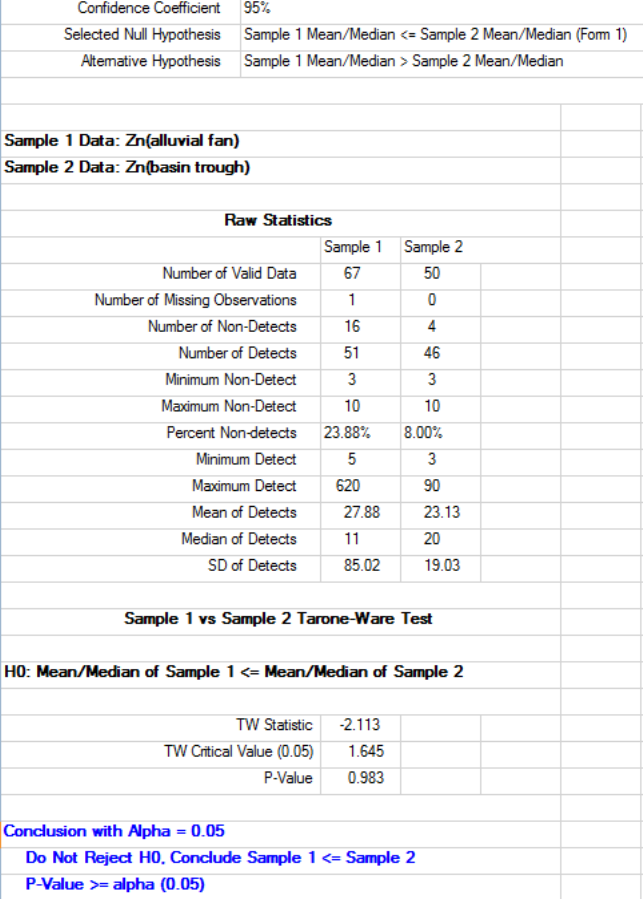

9.2.2.3 Two-Sample Tarone-Ware Test for Data Sets with Nondetects.. 171

Chapter 10 Computing Upper Limits to Estimate Background Threshold Values Based

Upon Full Uncensored Data Sets and Left-Censored Data Sets with Nondetects 175

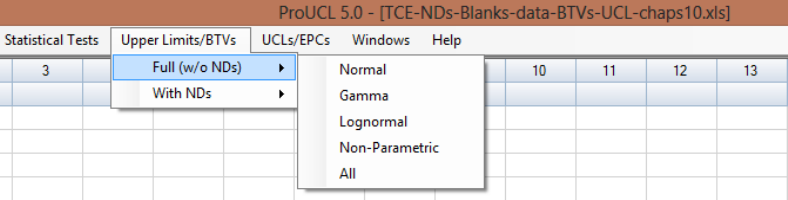

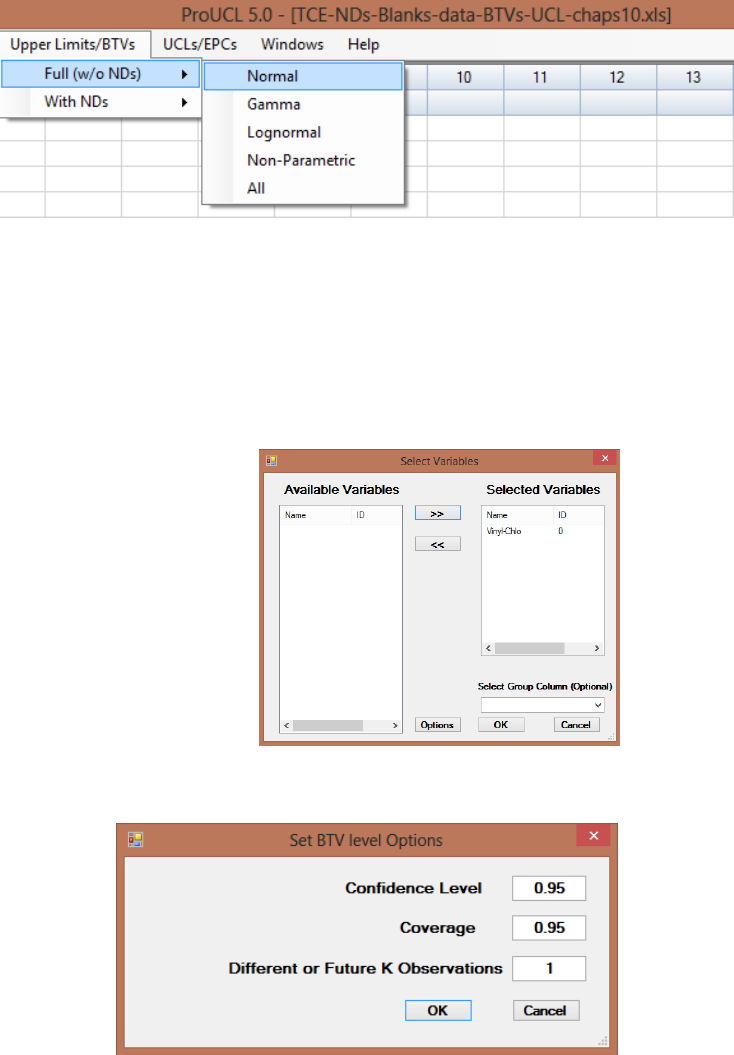

10.1 Background Statistics for Full Data Sets without Nondetects ........................................ 176

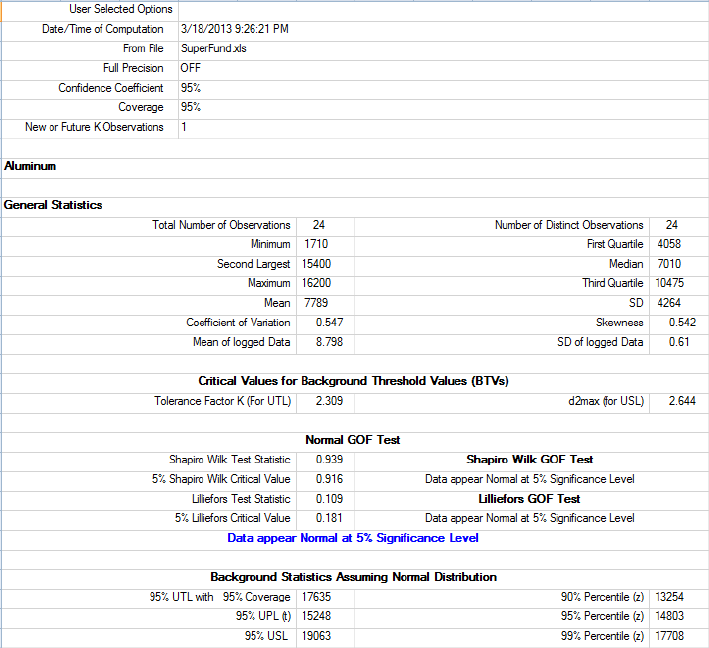

10.1.1 Normal or Lognormal Distribution .................................................................... 177

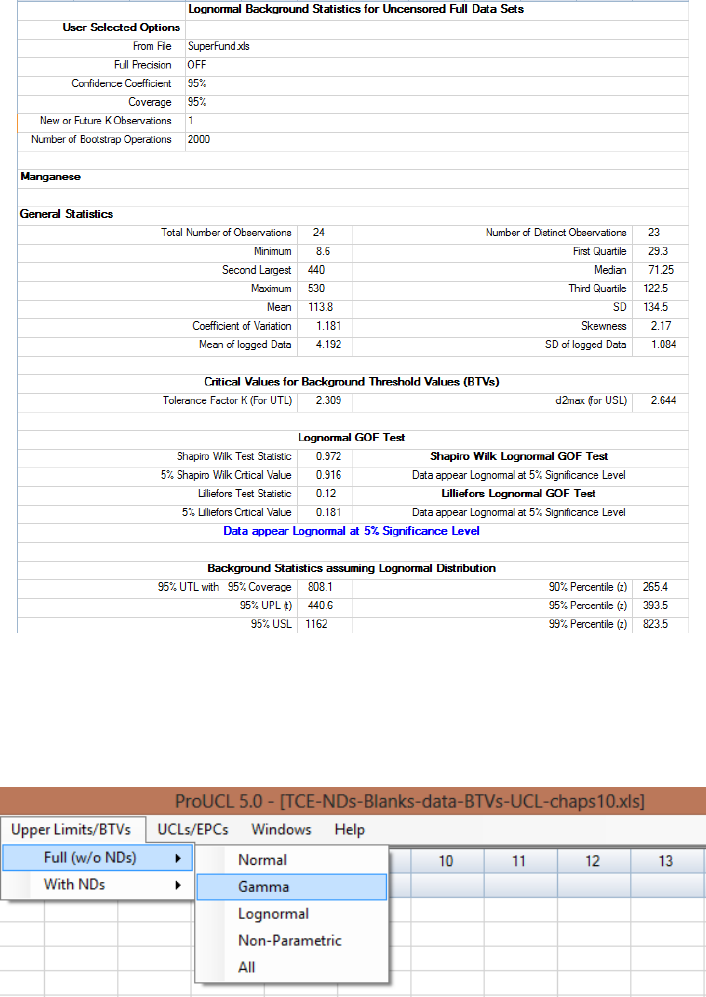

10.1.2 Gamma Distribution .......................................................................................... 179

10.1.3 Nonparametric Methods .................................................................................... 182

10.1.4 All Statistics Option ........................................................................................... 184

10.2 Background Statistics with NDs ..................................................................................... 186

10.2.1 Normal or Lognormal Distribution .................................................................... 187

10.2.2 Gamma Distribution .......................................................................................... 190

10.2.3 Nonparametric Methods (with NDs) ................................................................. 193

10.2.4 All Statistics Option ........................................................................................... 194

Chapter 11 Computing Upper Confidence Limits (UCLs) of Mean Based Upon Full-

Uncensored Data Sets and Left-Censored Data Sets with Nondetects ................. 200

11.1 UCLs for Full (w/o NDs) Data Sets ................................................................................ 202

11.1.1 Normal Distribution (Full Data Sets without NDs) ........................................... 202

27

11.1.2 Gamma, Lognormal, Nonparametric, All Statistics Option (Full Data without

NDs) ................................................................................................................... 204

11.2 UCL for Left-Censored Data Sets with NDs .................................................................. 208

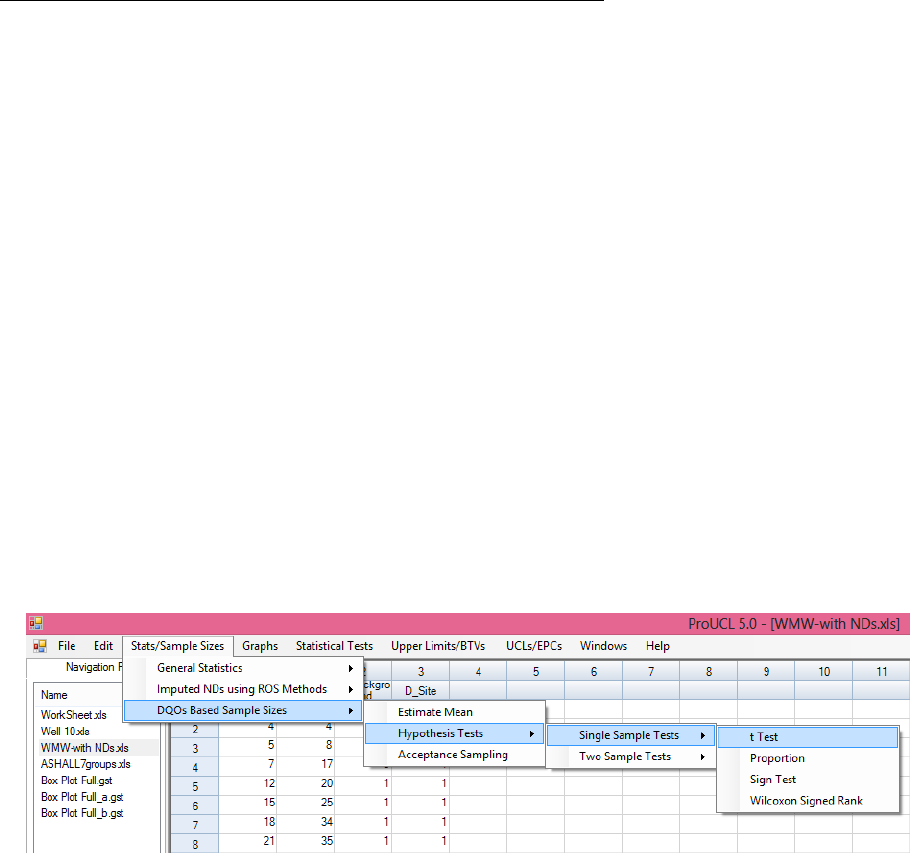

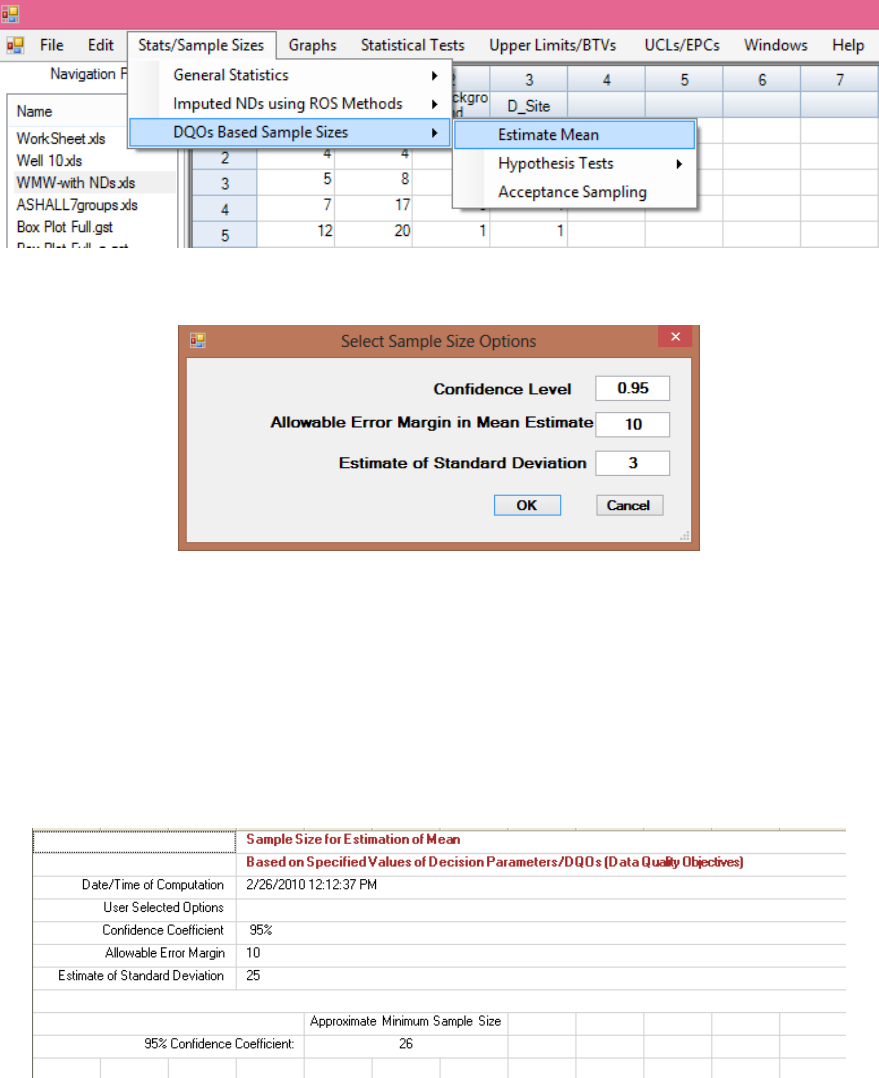

Chapter 12 Sample Sizes Based Upon User Specified Data Quality Objectives (DQOs)

and Power Assessment ............................................................................................ 212

12.1 Estimation of Mean ......................................................................................................... 214

12.2 Sample Sizes for Single-Sample Hypothesis Tests......................................................... 215

12.2.1 Sample Size for Single-Sample t-Test ............................................................... 215

12.2.2 Sample Size for Single-Sample Proportion Test ............................................... 216

12.2.3 Sample Size for Single-Sample Sign Test ......................................................... 217

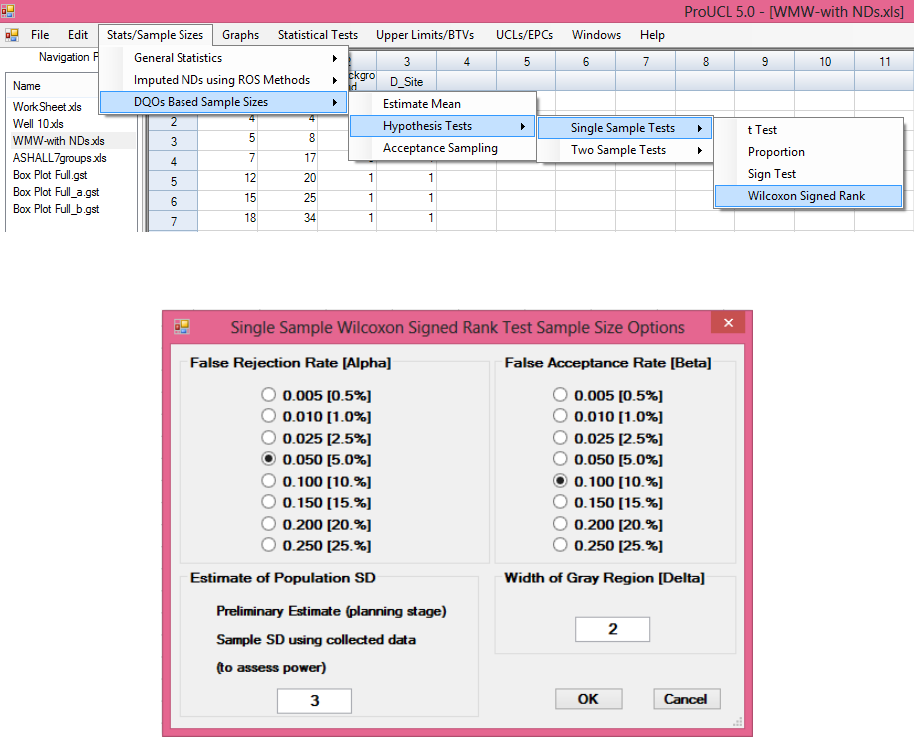

12.2.4 Sample Size for Single-Sample Wilcoxon Signed Rank Test ........................... 219

12.3 Sample Sizes for Two-Sample Hypothesis Tests ........................................................... 220

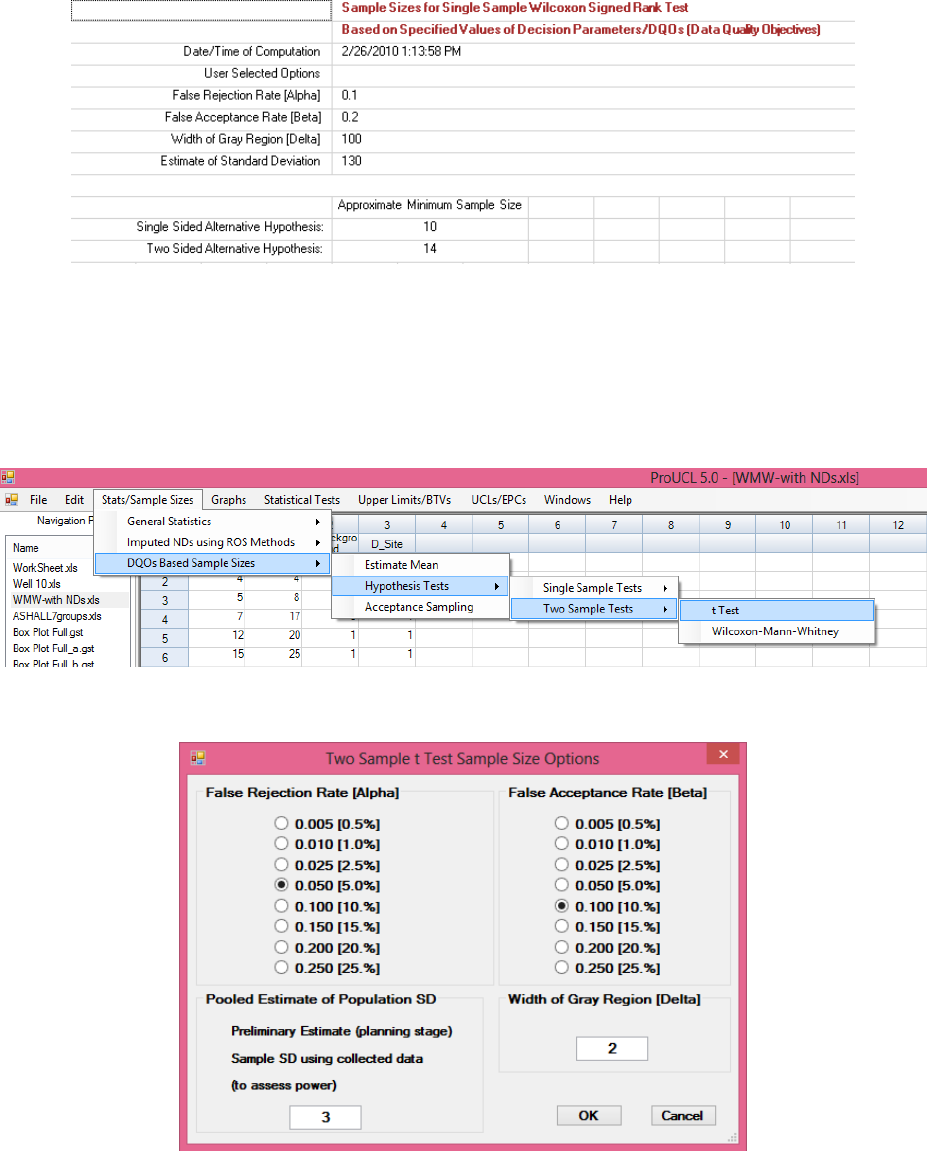

12.3.1 Sample Size for Two-Sample t-Test .................................................................. 220

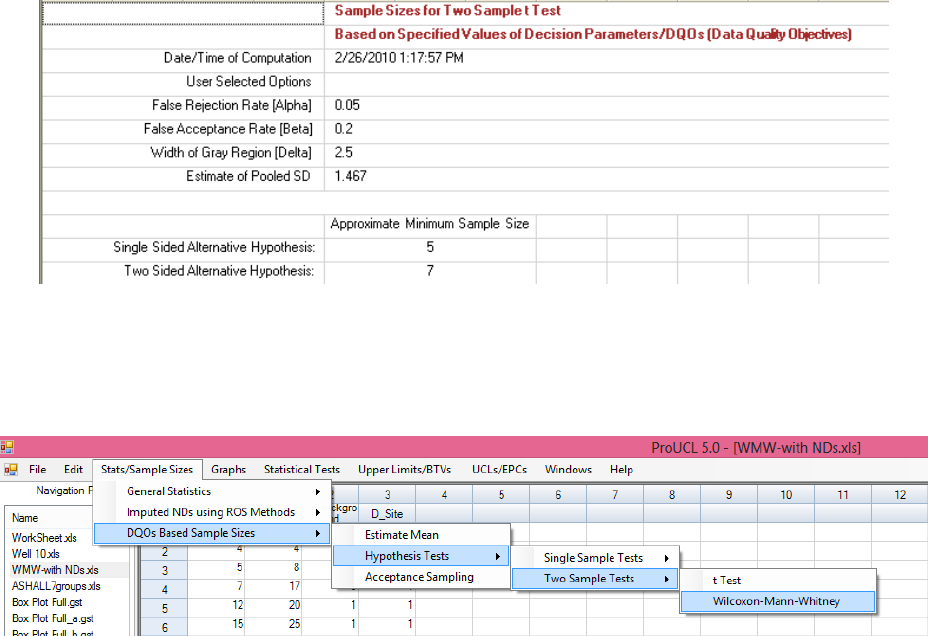

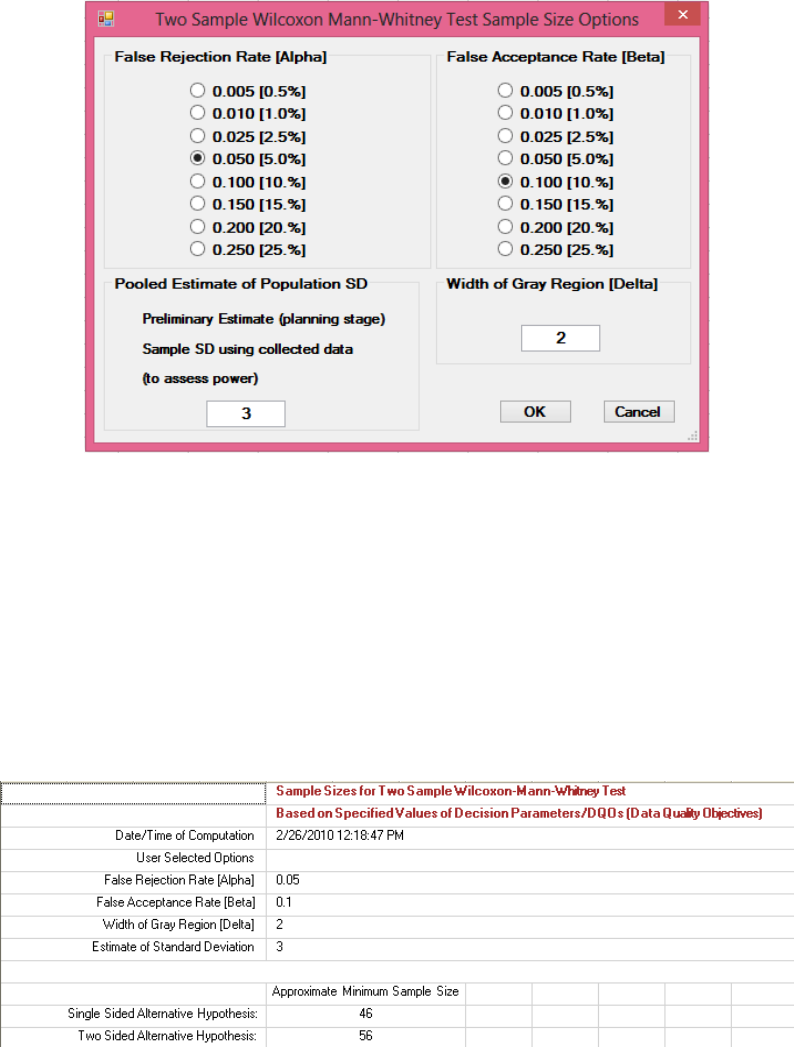

12.3.2 Sample Size for Two-Sample Wilcoxon Mann-Whitney Test .......................... 221

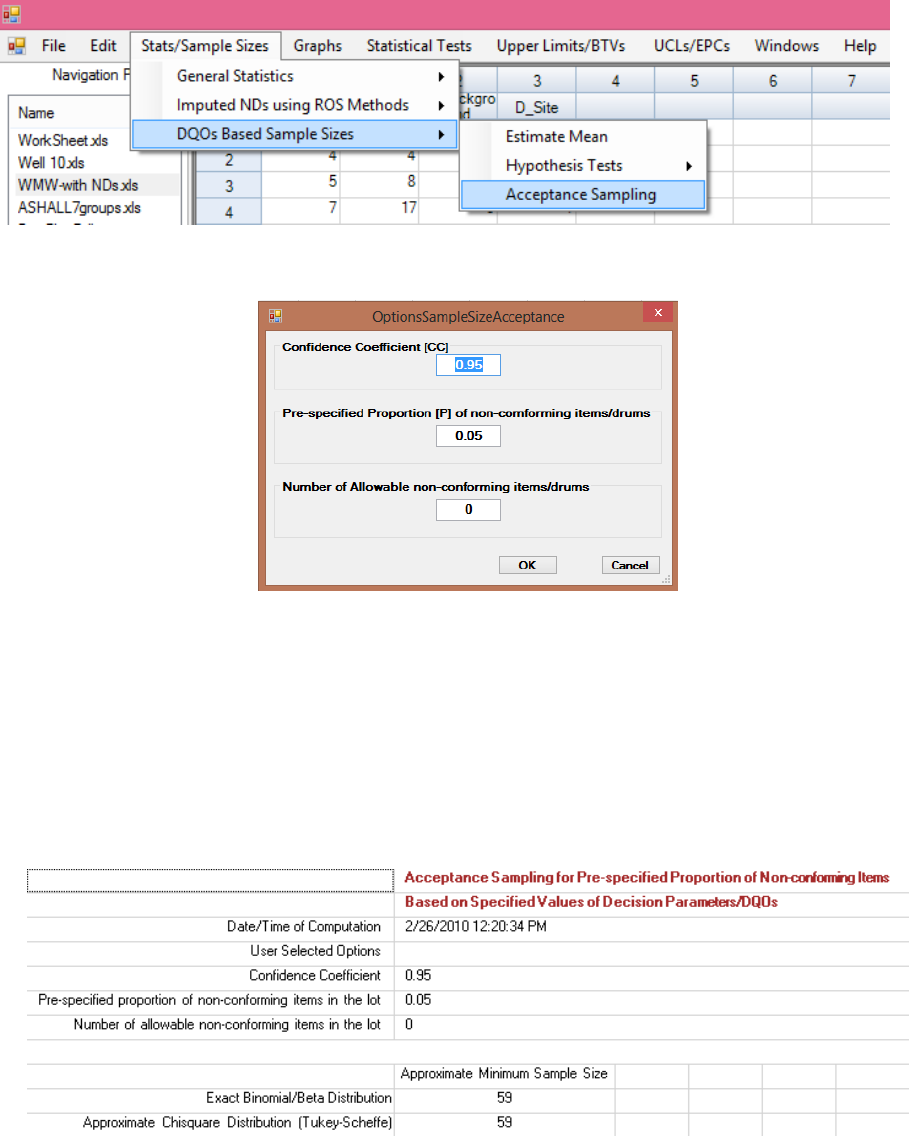

12.4 Sample Sizes for Acceptance Sampling ......................................................................... 223

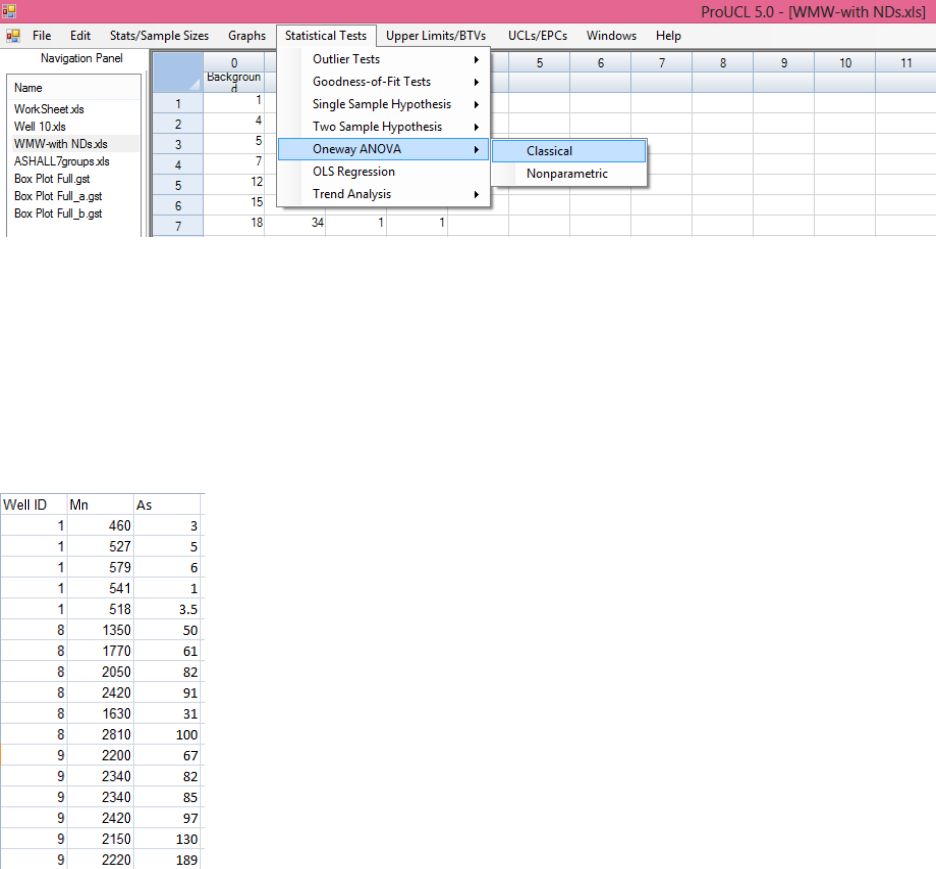

Chapter 13 Analysis of Variance ......................................................................................... 224

13.1 Classical Oneway ANOVA ............................................................................................ 224

13.2 Nonparametric ANOVA ................................................................................................. 226

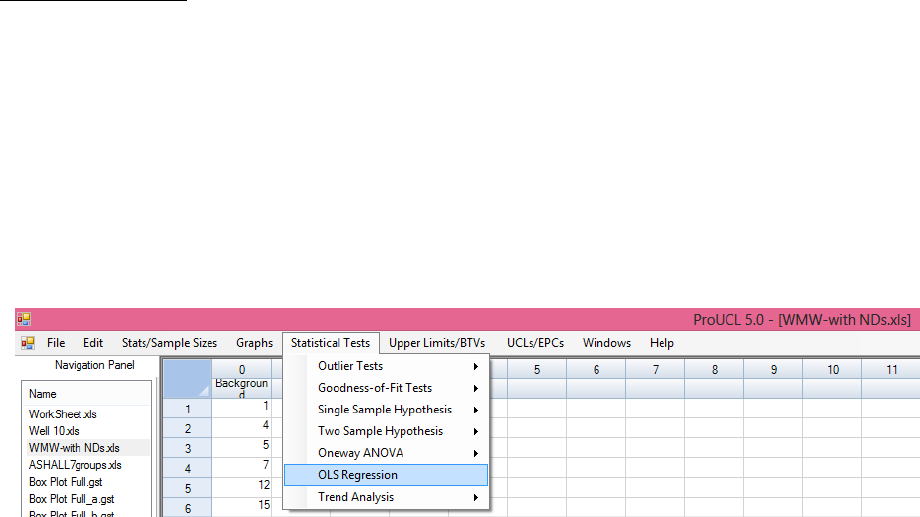

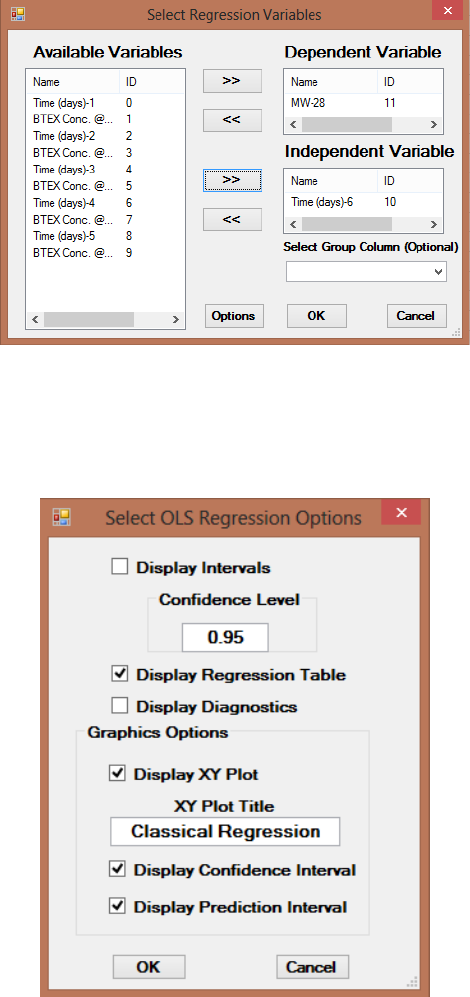

Chapter 14 Ordinary Least Squares of Regression and Trend Analysis ......................... 228

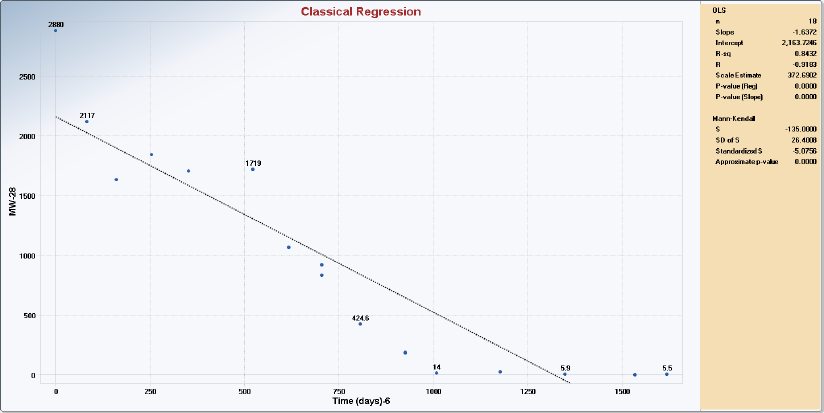

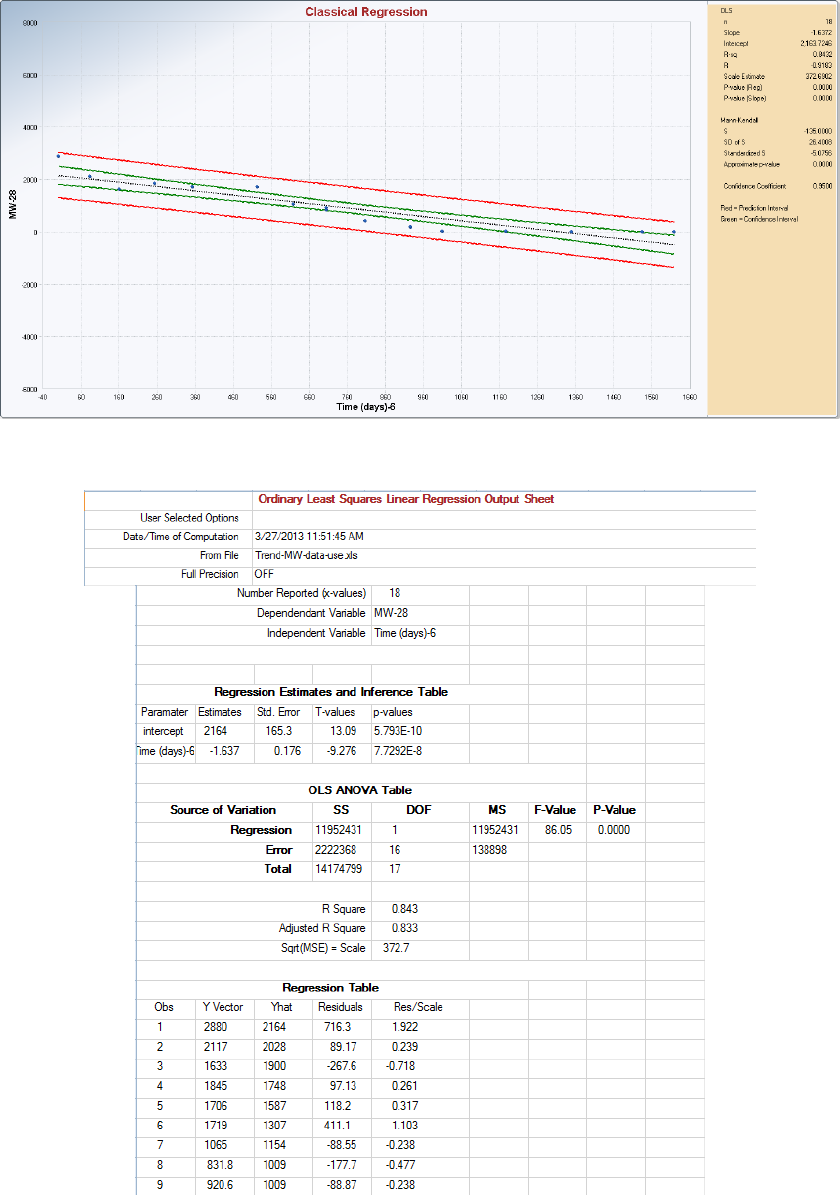

14.1 Simple Linear Regression ............................................................................................... 228

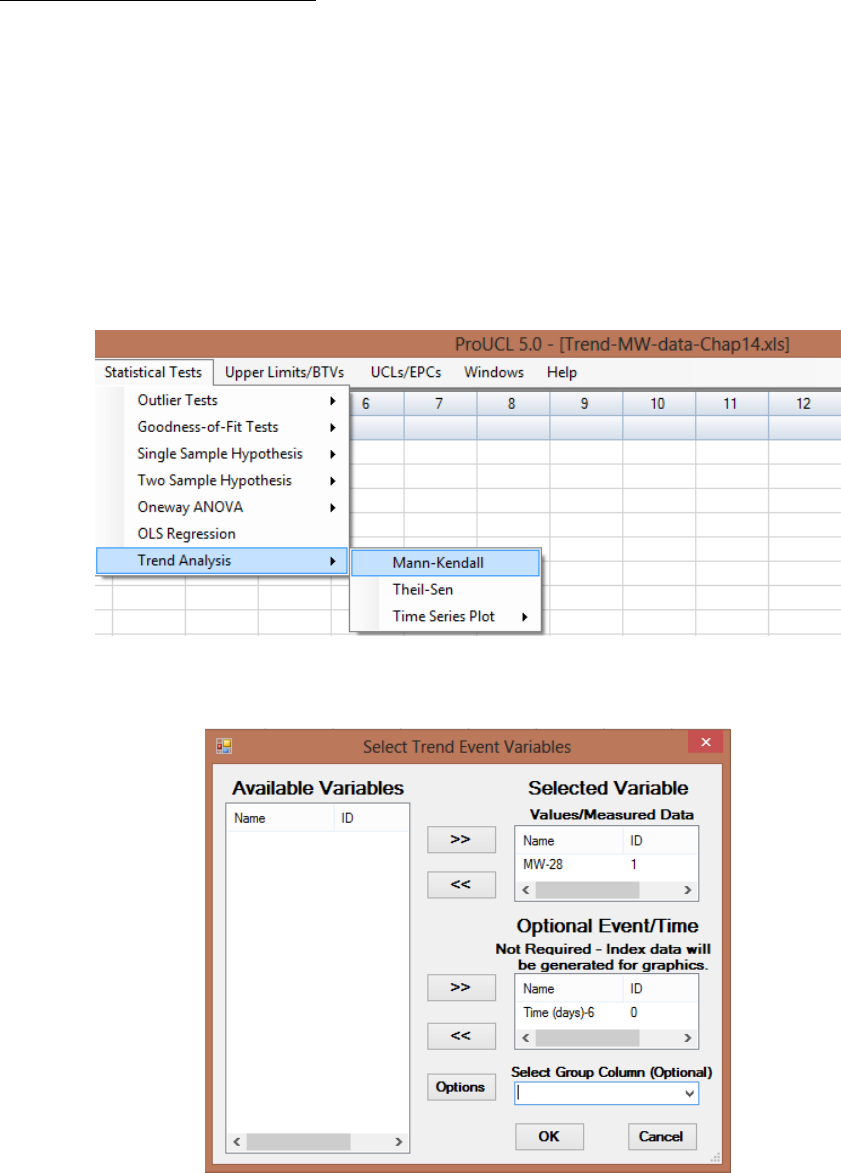

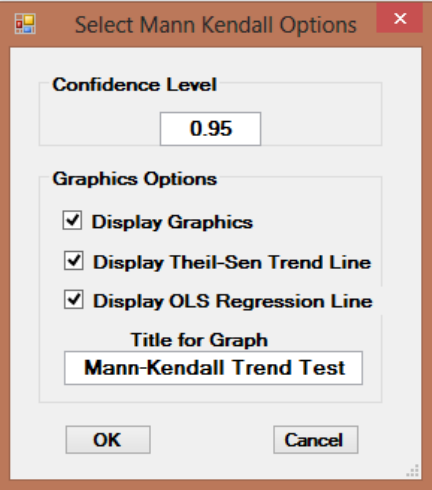

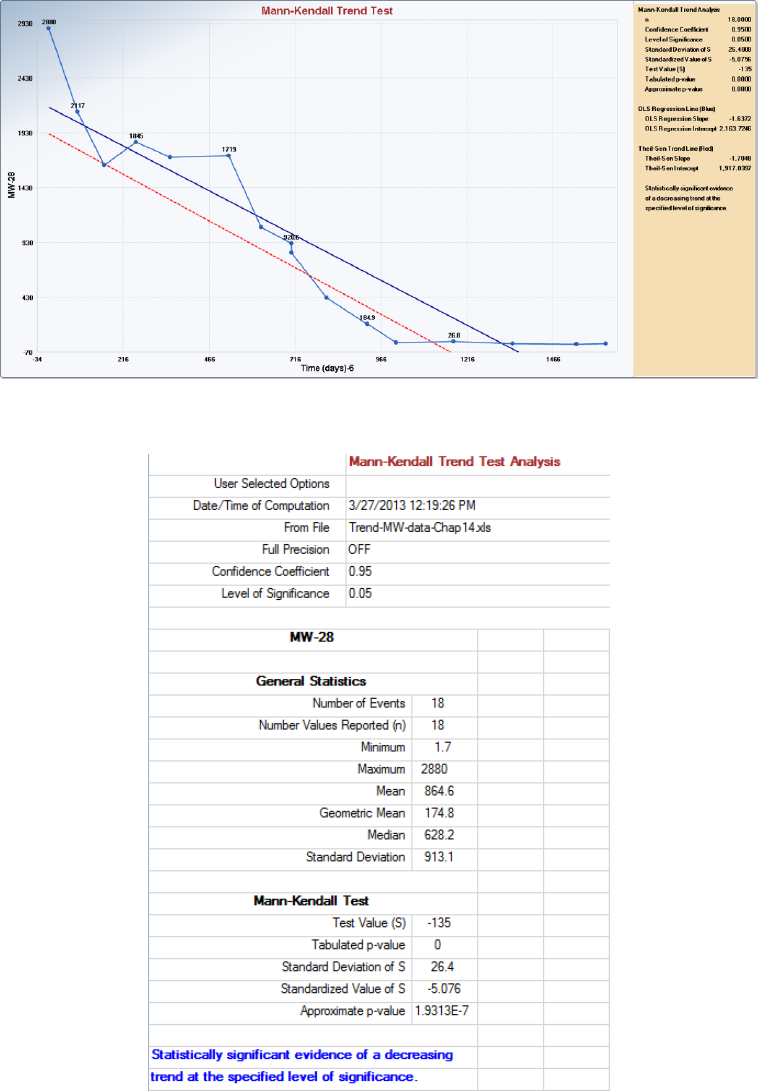

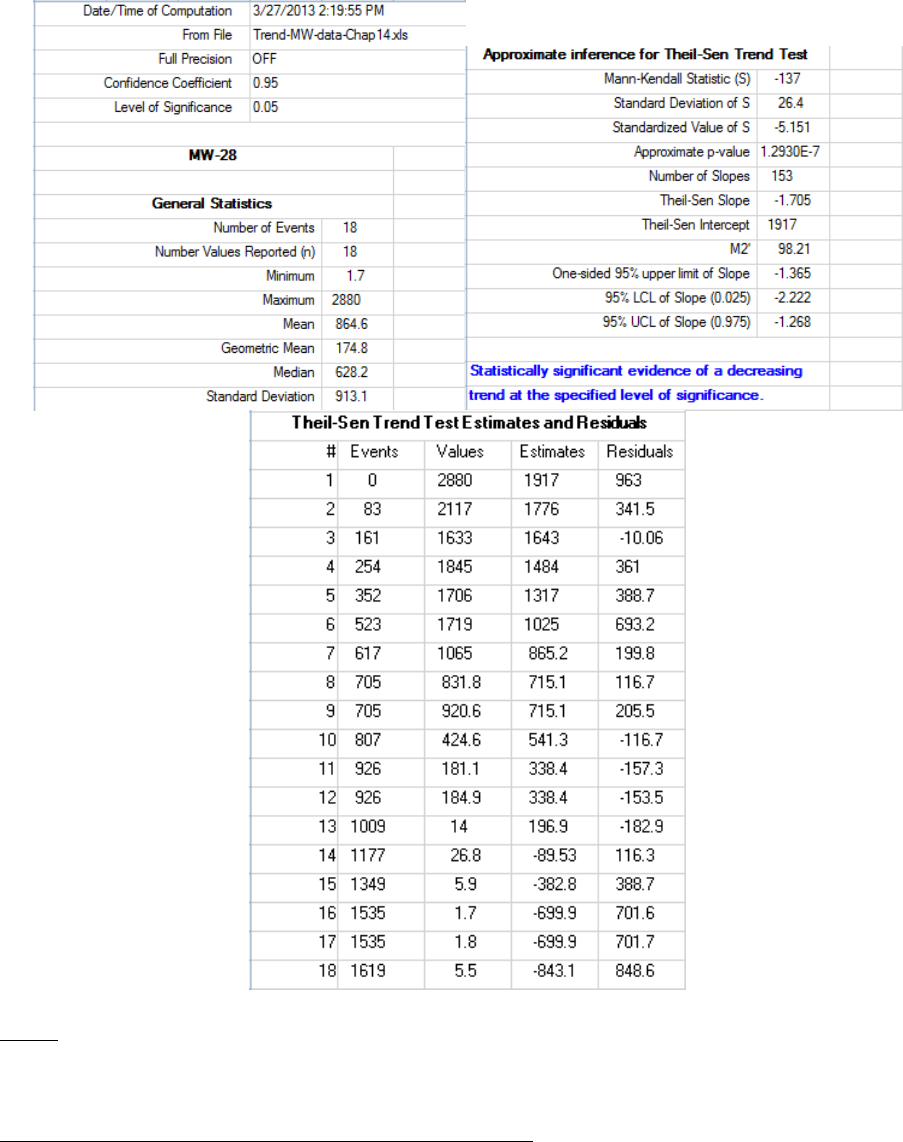

14.2 Mann-Kendall Test ......................................................................................................... 232

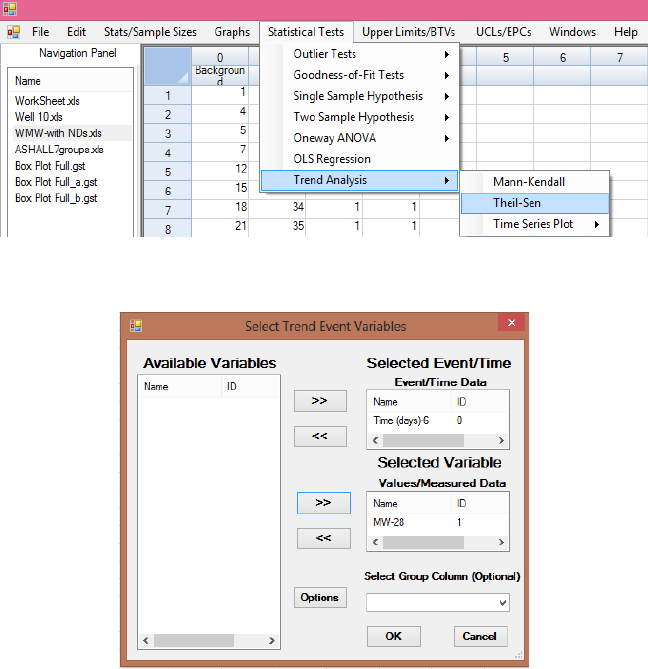

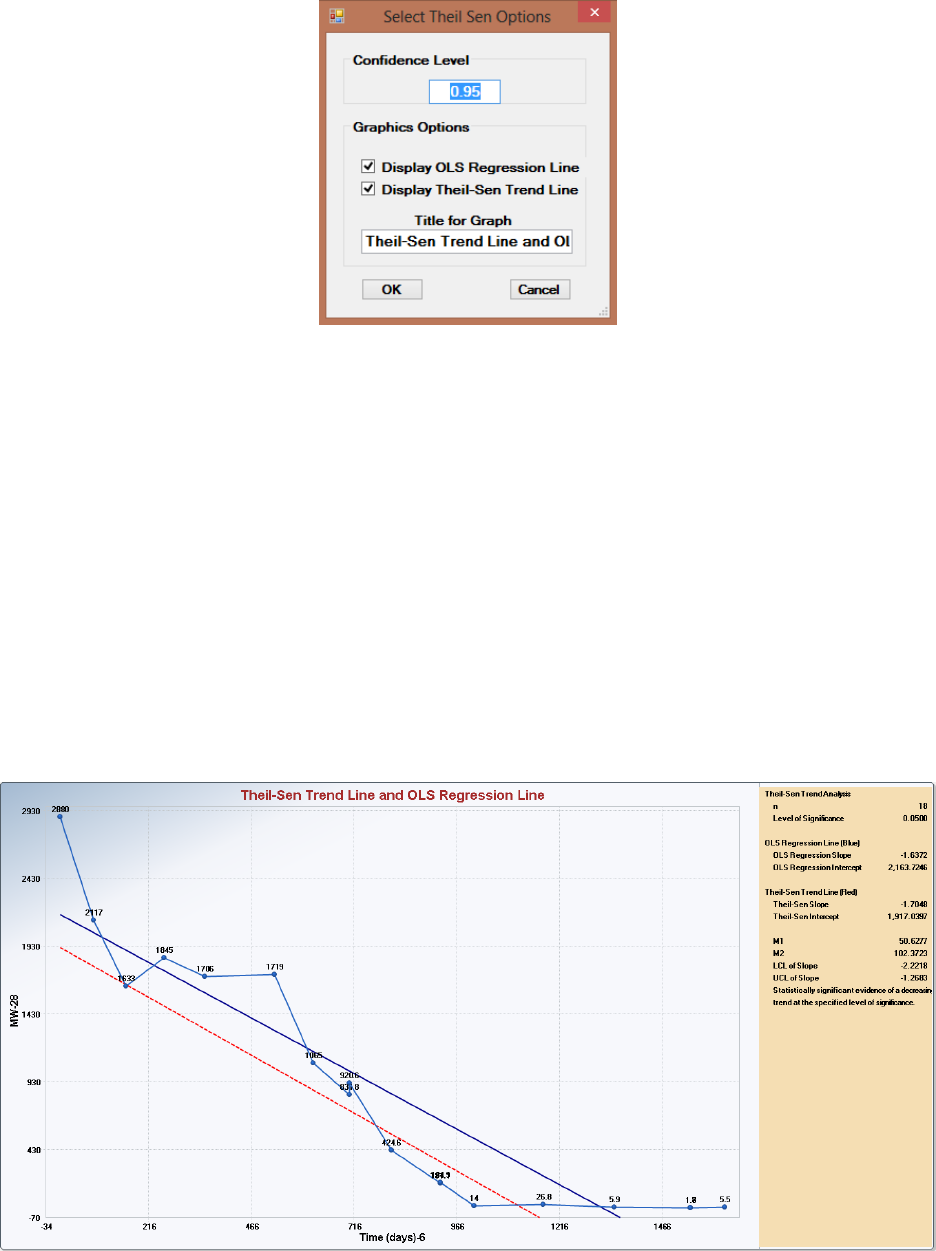

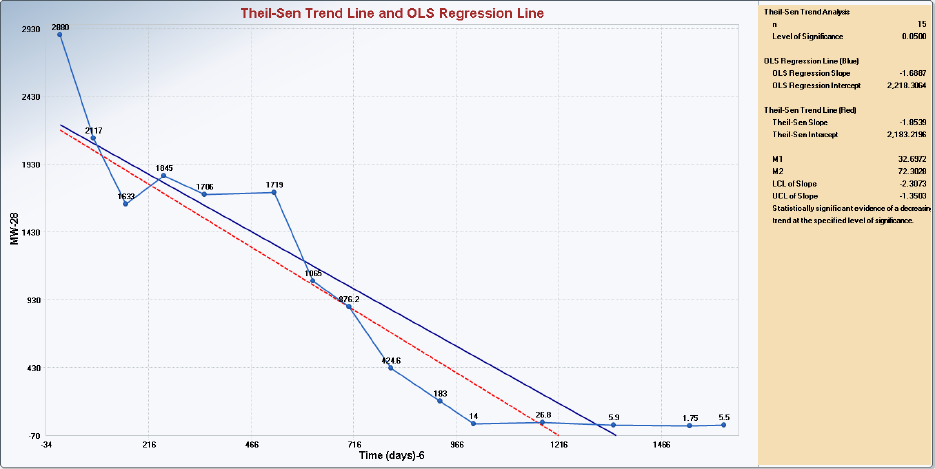

14.3 Theil – Sen Test .............................................................................................................. 235

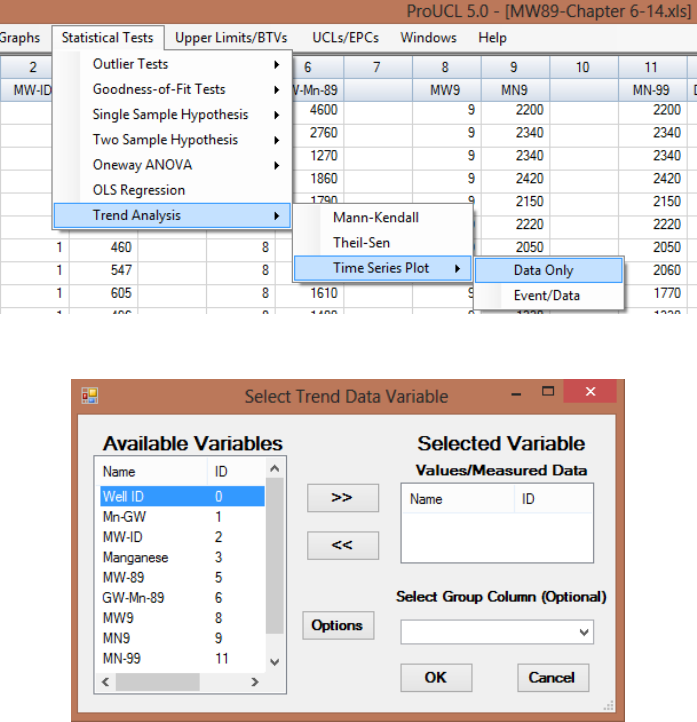

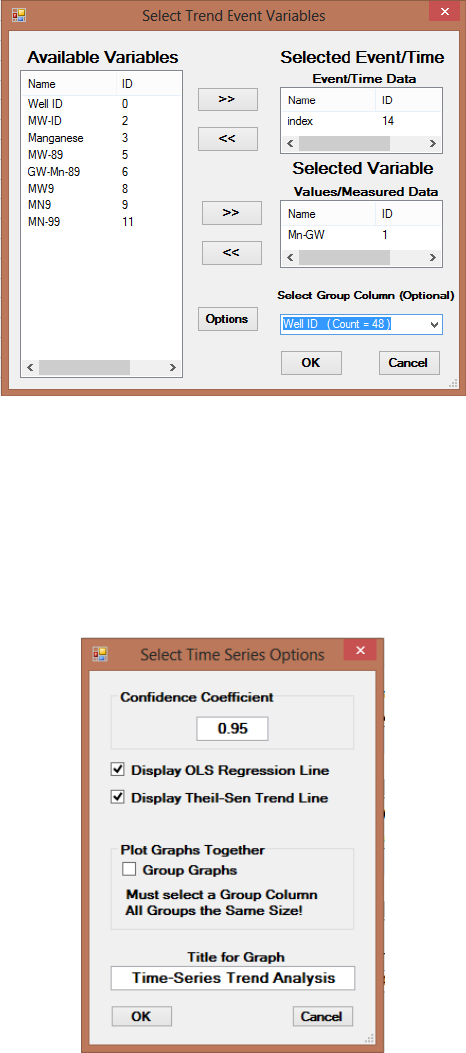

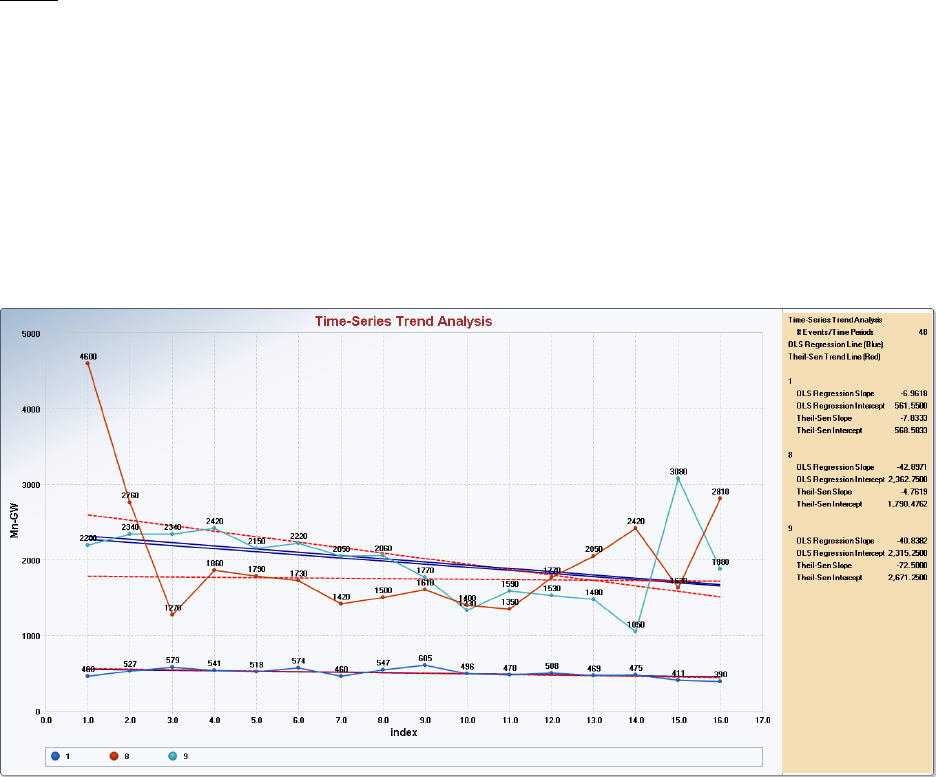

14.4 Time Series Plots ............................................................................................................ 238

Chapter 15 Background Incremental Sample Simulator (BISS) Simulating BISS Data

from a Large Discrete Background Data ................................................................. 244

Chapter 16 Windows ............................................................................................................ 246

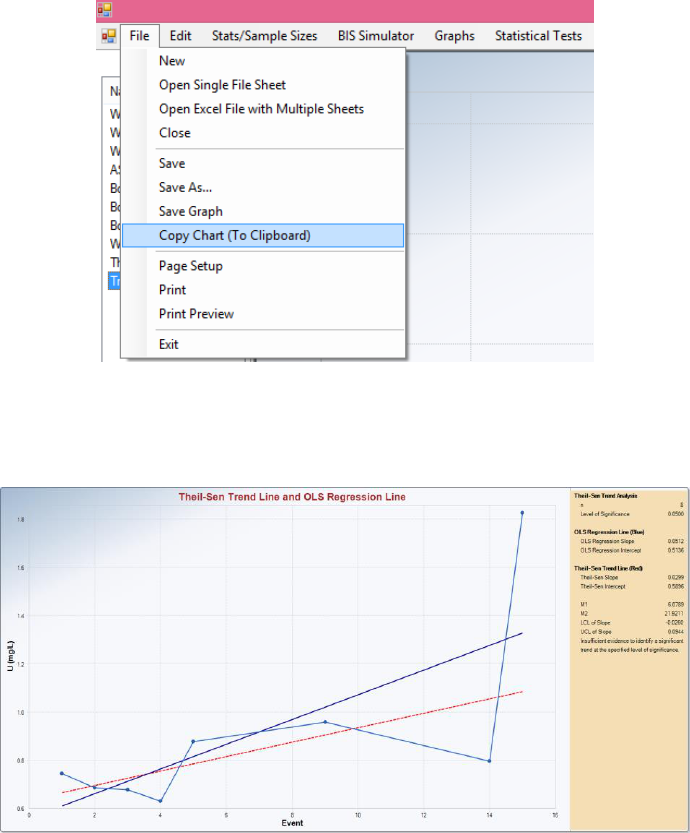

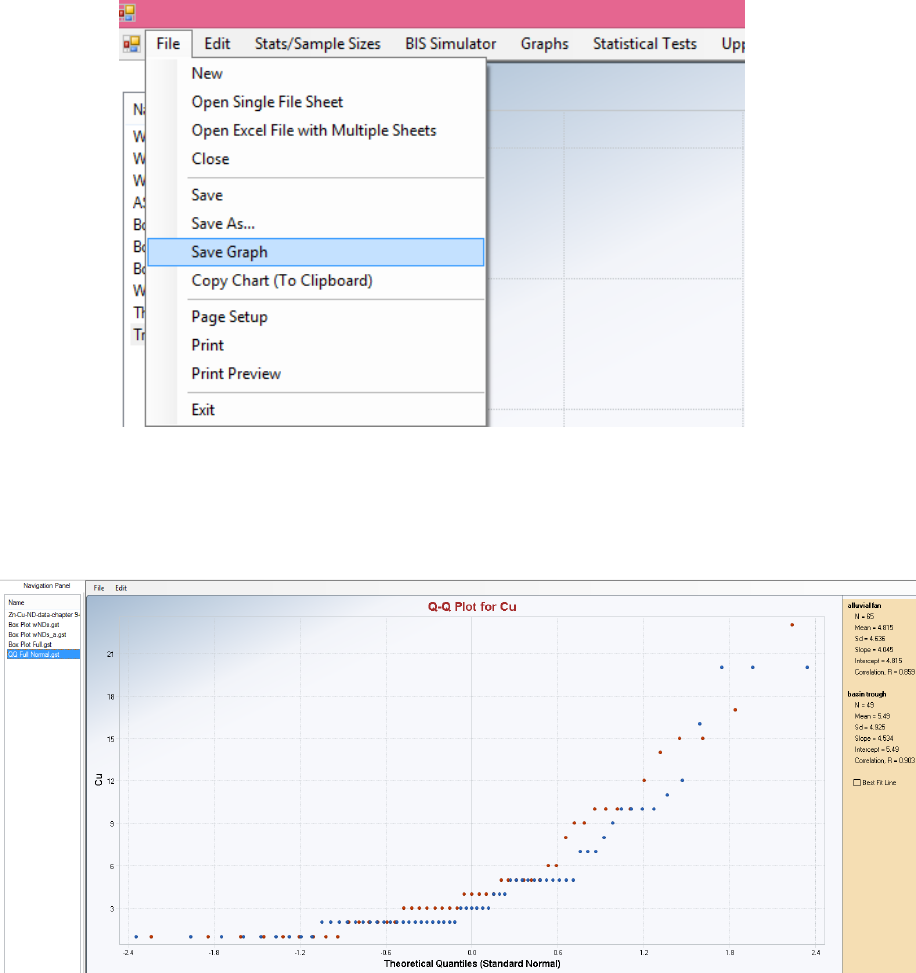

Chapter 17 Handling the Output Screens and Graphs ...................................................... 247

17.1 Copying and Saving Graphs ........................................................................................... 247

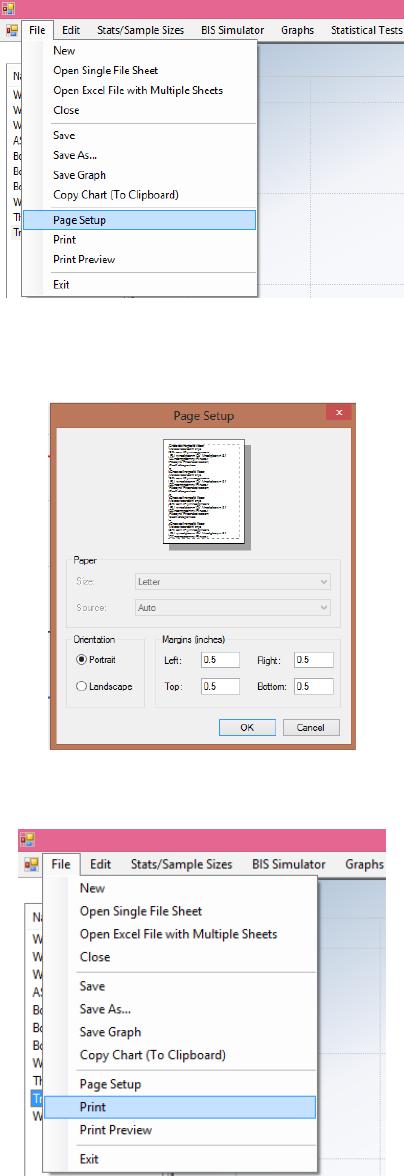

17.2 Printing Graphs ............................................................................................................... 248

17.3 Making Changes in Graphs using Tools and Properties ................................................. 250

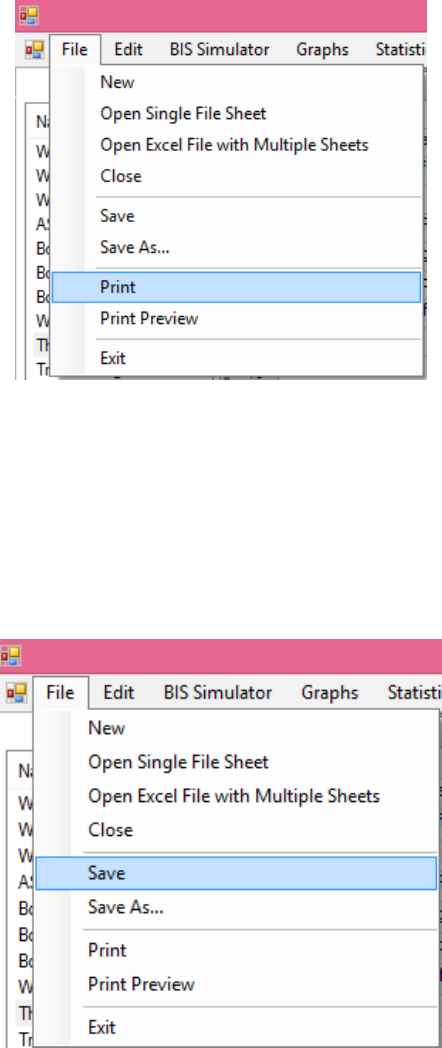

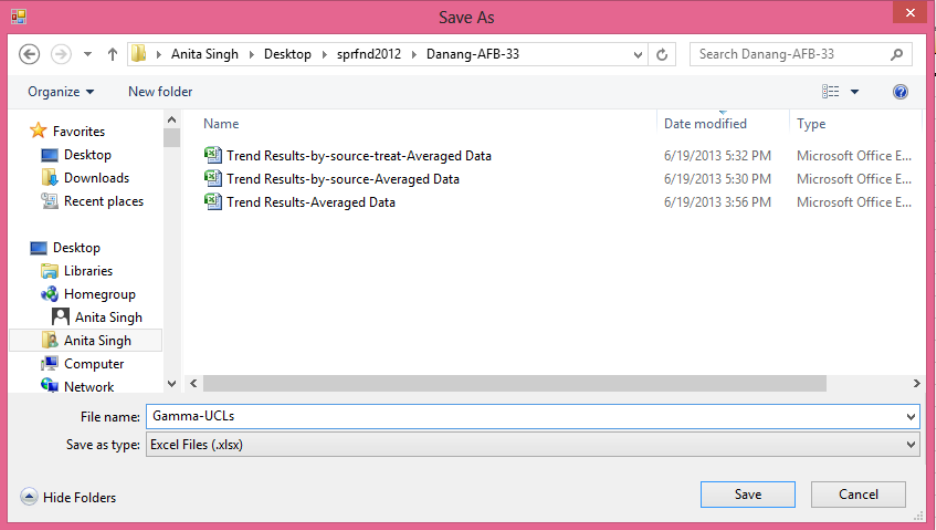

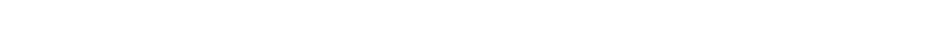

17.4 Printing Non-graphical Outputs ...................................................................................... 250

17.5 Saving Output Screens as Excel Files ............................................................................. 251

Chapter 18 Summary and Recommendations to Compute a 95% UCL for Full

Uncensored and Left-Censored Data Sets with NDs .............................................. 253

18.1 Computing UCL95s of the Mean Based Upon Uncensored Full Data Sets ................... 253

18.2 Computing UCLs Based Upon Left-Censored Data Sets with Nondetects .................... 254

REFERENCES ....................................................................................................................... 256

28

29

INTRODUCTION

OVERVIEW OF ProUCL VERSION 5.1 SOFTWARE

The main objective of the ProUCL software funded by the U.S.EPA is to compute rigorous decision

statistics to help the decision makers in making reliable decisions which are cost-effective, and protective

of human health and the environment. The development of ProUCL software is based upon the

philosophy that rigorous statistical methods can be used to compute representative estimates of population

parameters (e.g., site mean, background percentiles) and accurate decision making statistics (including the

upper confidence limit [UCL] of the mean, upper tolerance limit [UTL], and upper prediction limit

[UPL]) which will assist decision makers and project teams in making sound decisions. The use and

applicability of a statistical method (e.g., student's t-UCL, Central Limit Theorem (CLT)-UCL, adjusted

gamma-UCL, Chebyshev UCL, bootstrap-t UCL) depend upon data size, data variability, data skewness,

and data distribution. ProUCL computes decision statistics using several parametric and nonparametric

methods covering a wide-range of data variability, skewness, and sample size. A couple of text book

methods described in most of the statistical text books (e.g., Hogg and Craig, 1995) based upon the

Student's t-statistic and the CLT alone cannot address all scenarios and situations commonly occurring in

environmental studies. It is incorrect to assume that Student's t-statistic and/or CLT based UCLs of mean

will provide the desired coverage (e.g., 0.95) to the population mean irrespective of the skewness of the

data set/population under consideration. These issues have been discussed in detail in Chapters 2 and 4 of

the accompanying ProUCL 5.1 Technical Guide. Several examples are provided in the Technical Guide

which elaborate on these issues.

The use of a parametric lognormal distribution on a lognormally distributed data set tends to yield

unstable impractically large UCL values, especially when the standard deviation of the log-transformed

data is greater than 1.0 and the data set is of small size such as less than 30-50 (Hardin and Gilbert 1993;

Singh, Singh, and Engelhardt 1997). Many environmental data sets can be modeled by a gamma as well

as a lognormal distribution. Generally, the use of a gamma distribution on gamma distributed data sets

yields UCL values of practical merit (Singh, Singh, and Iaci 2002). Therefore, the use of gamma

distribution based decision statistics such as UCLs, UPL, and UTLs cannot be dismissed just because it is

easier to use a lognormal model to compute these upper limits. The two distributions do not behave in a

similar manner. The advantages of computing the gamma distribution-based decision statistics are

discussed in Chapters 2 through 5 of the ProUCL Technical Guide.

Since many environmental decisions are made based upon a 95% UCL of the population mean, it is

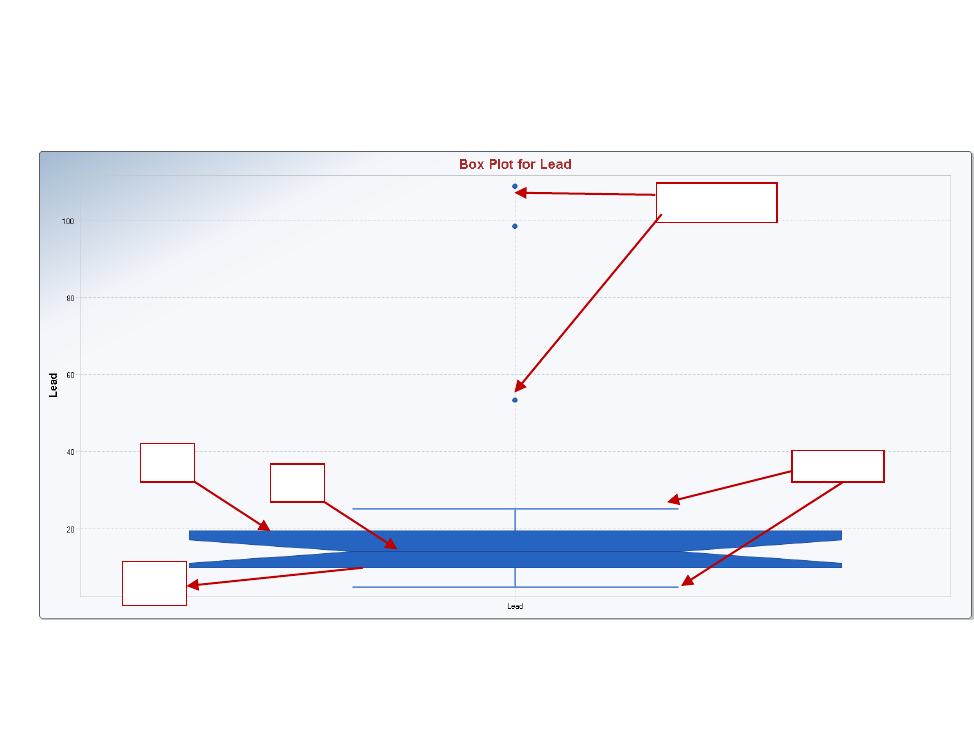

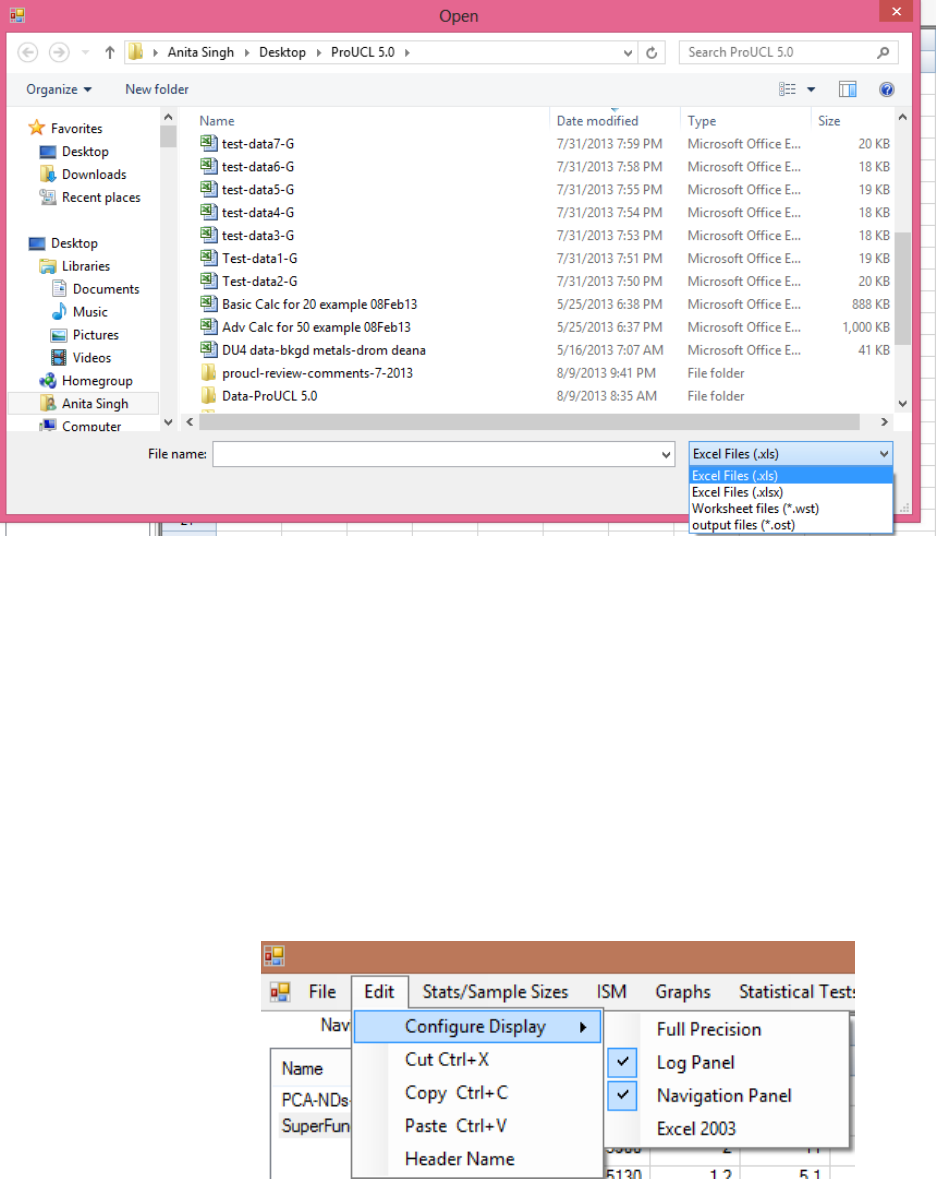

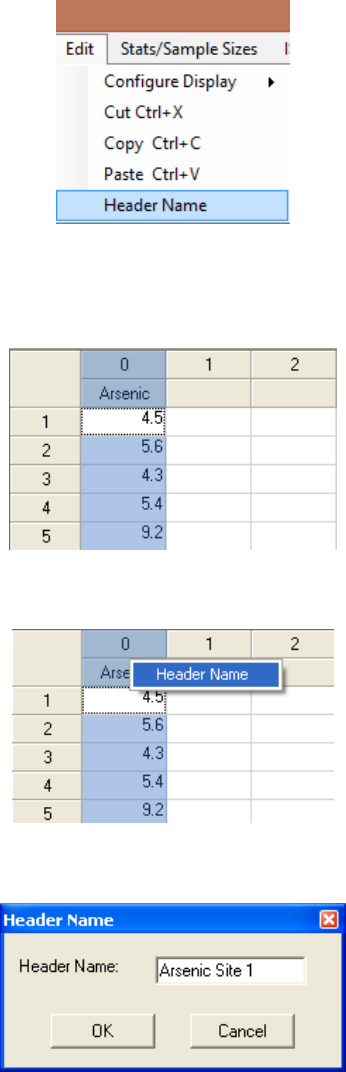

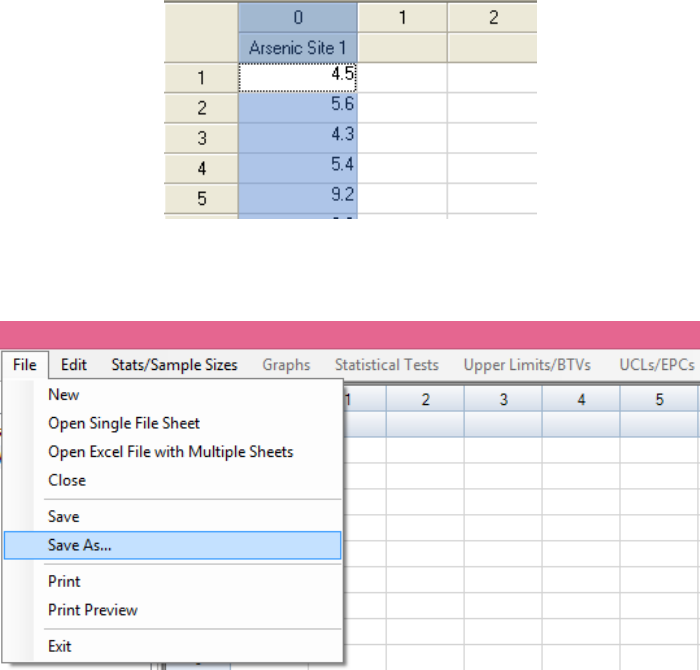

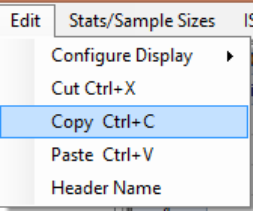

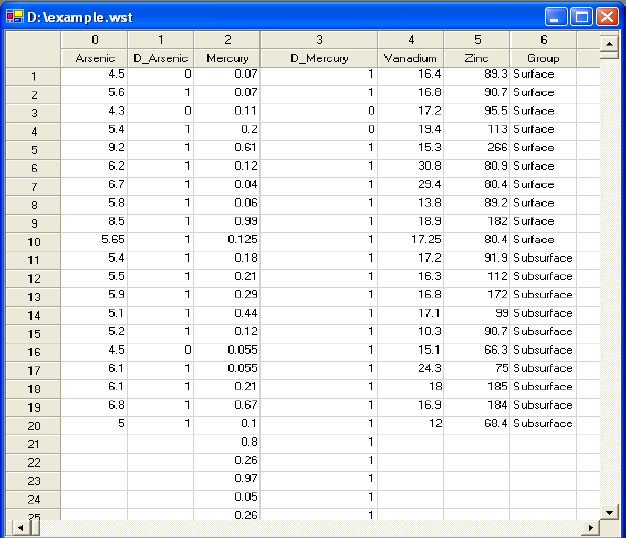

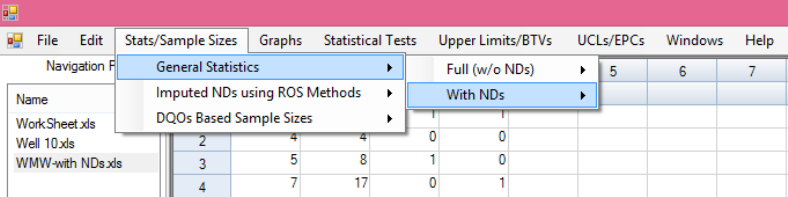

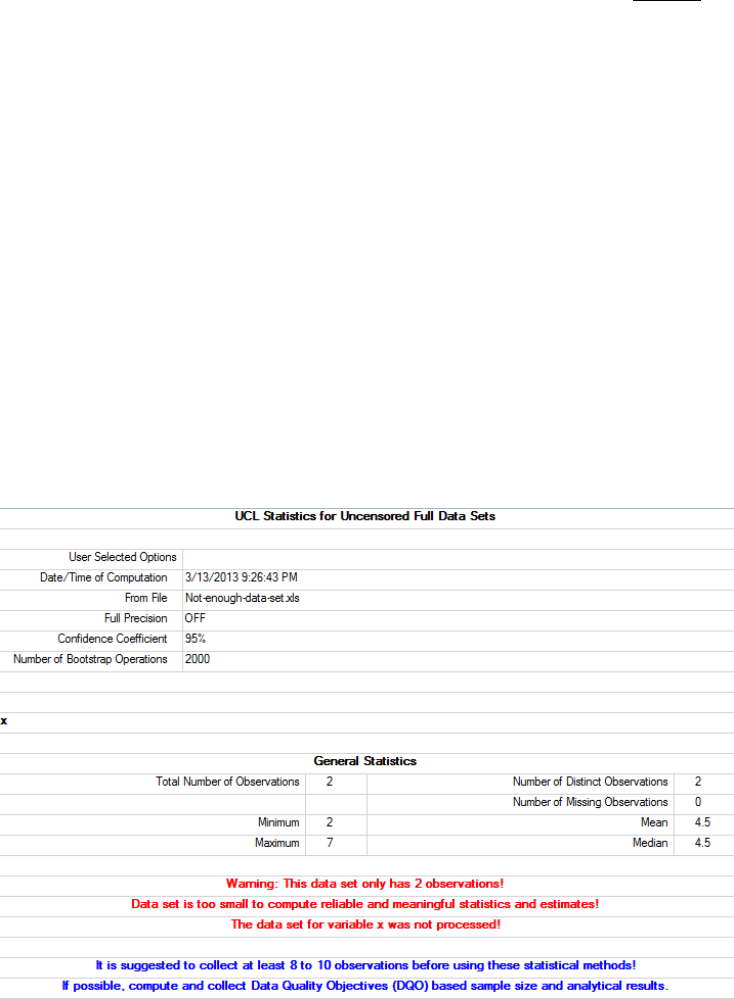

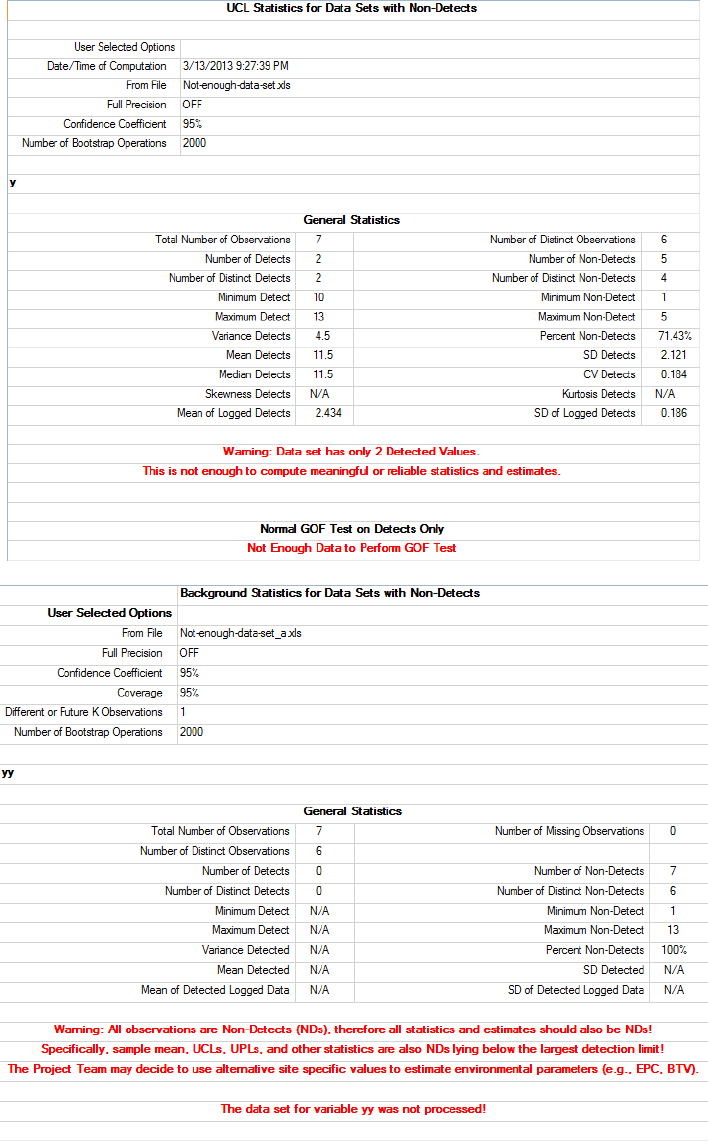

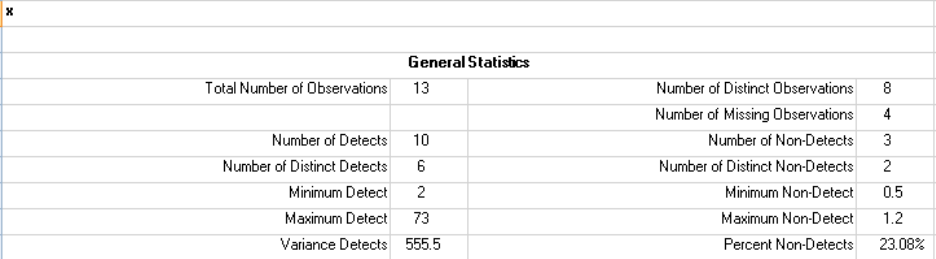

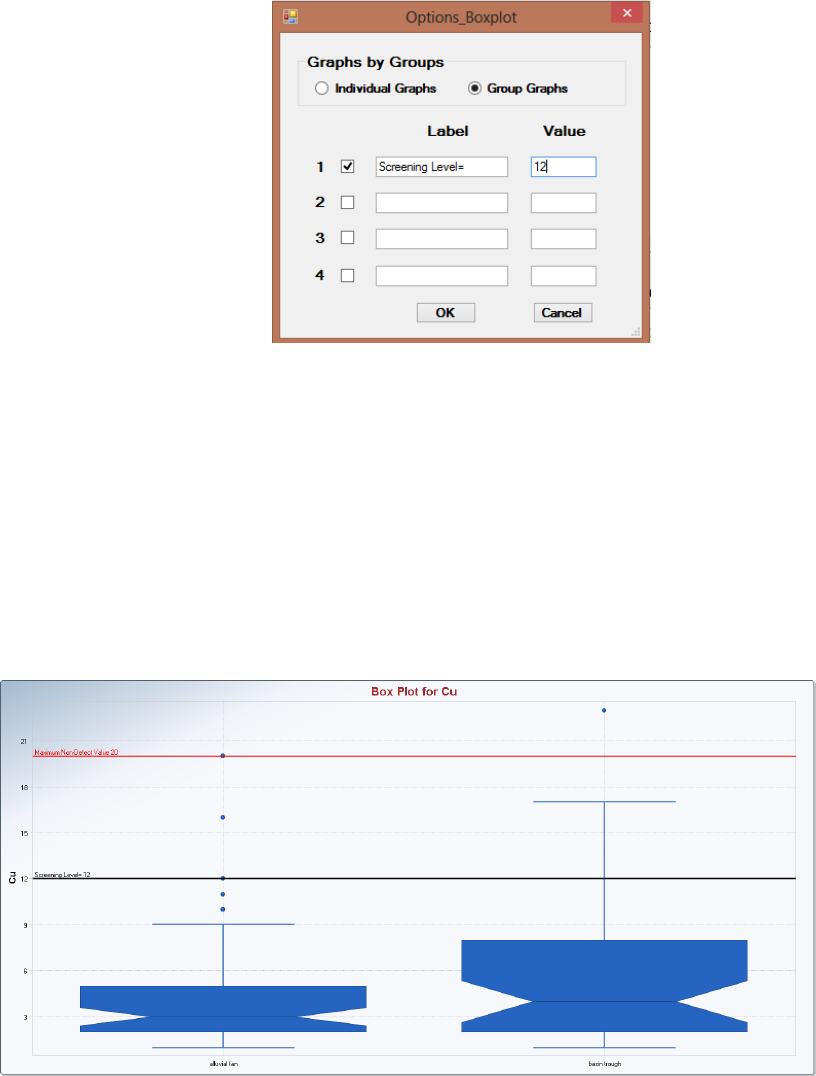

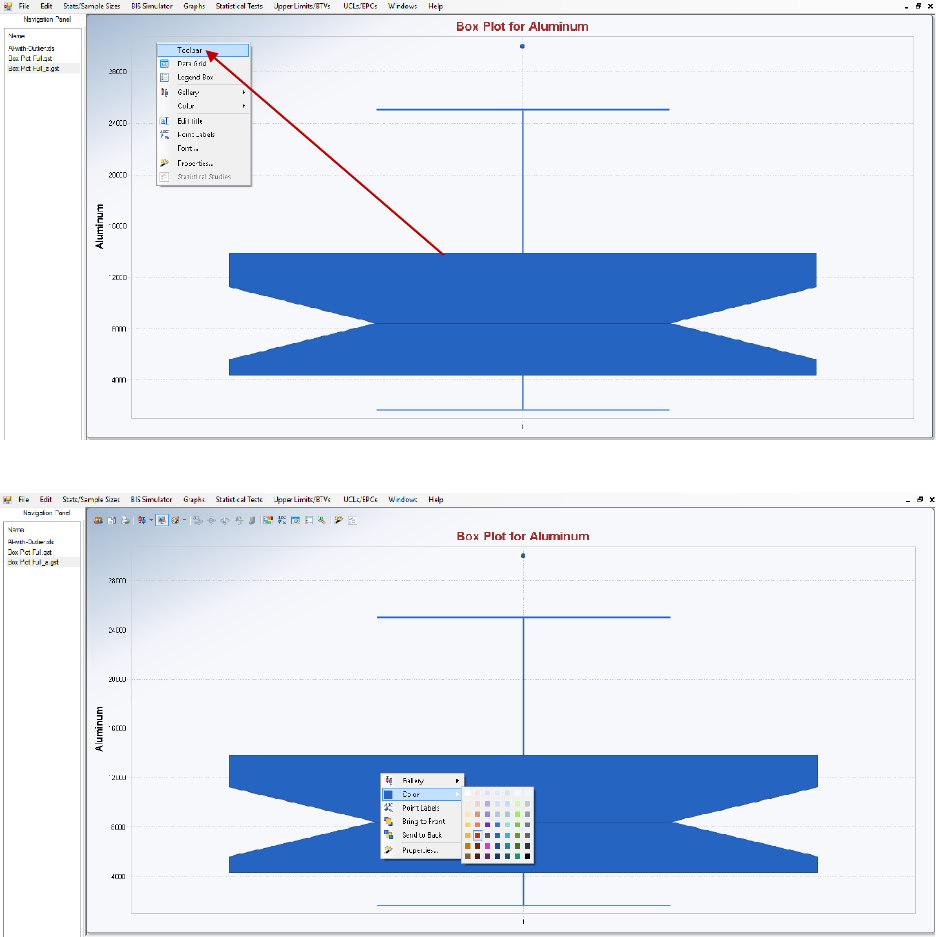

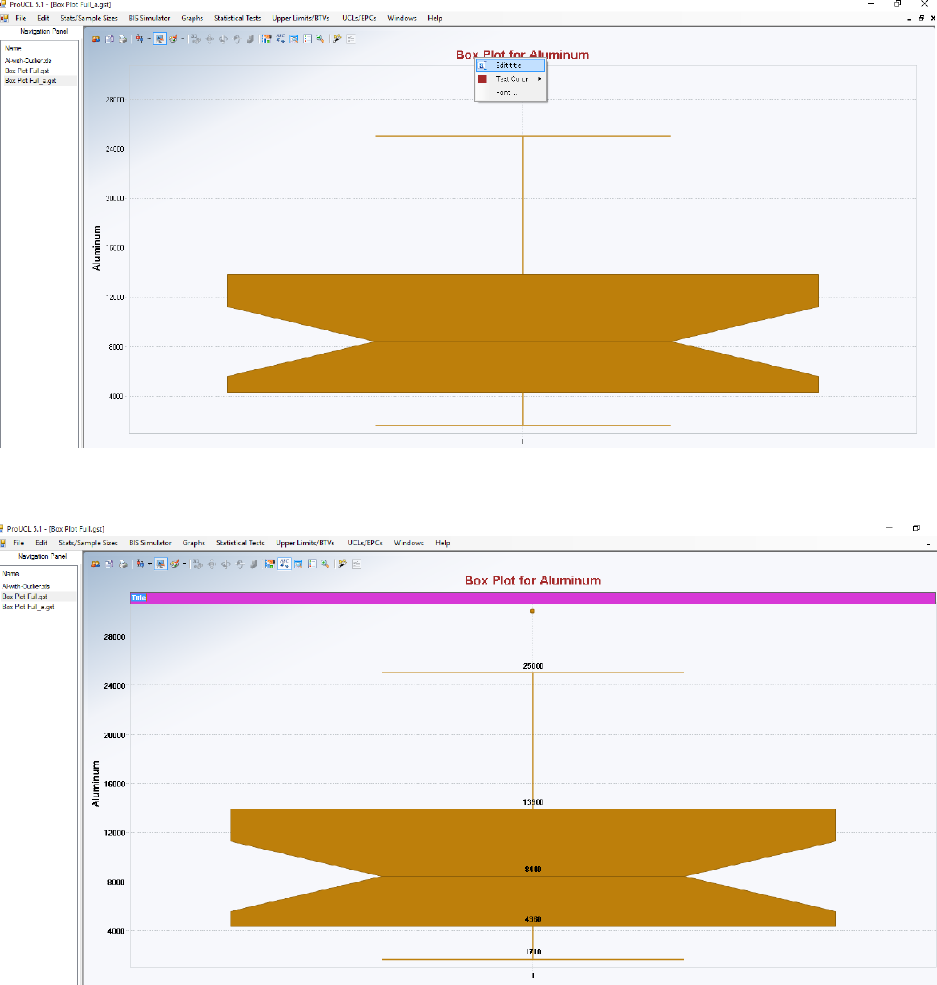

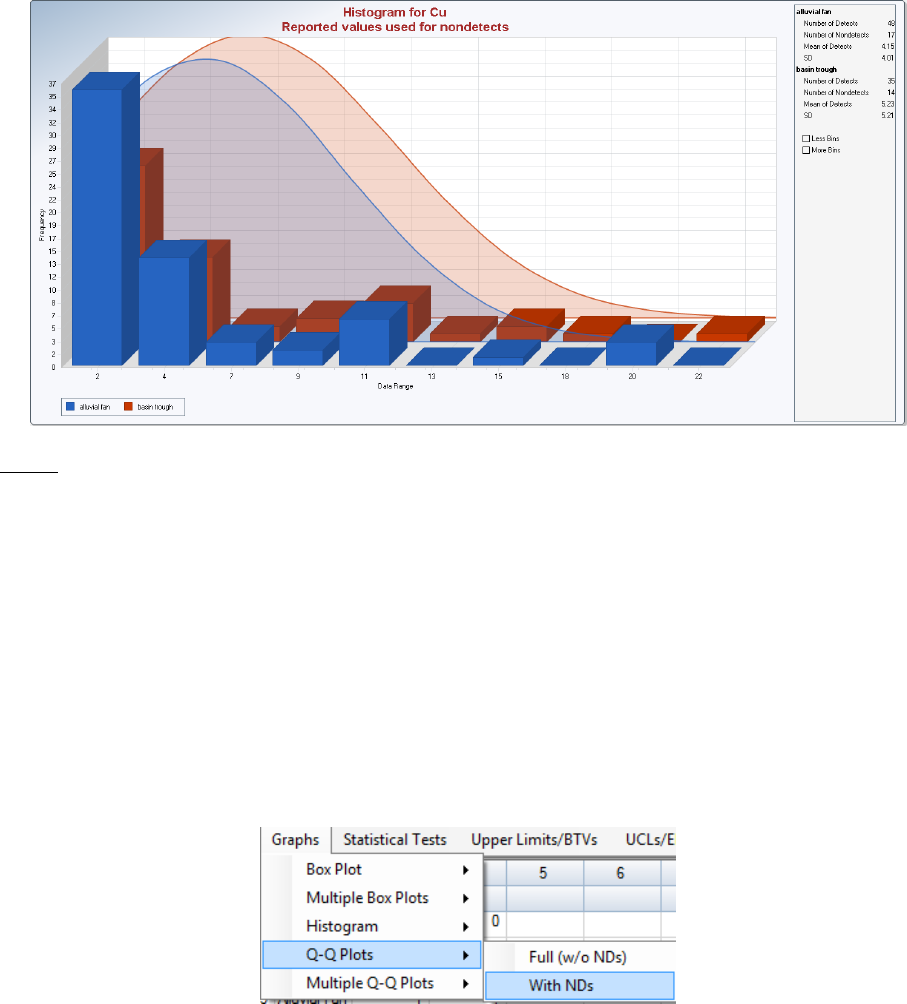

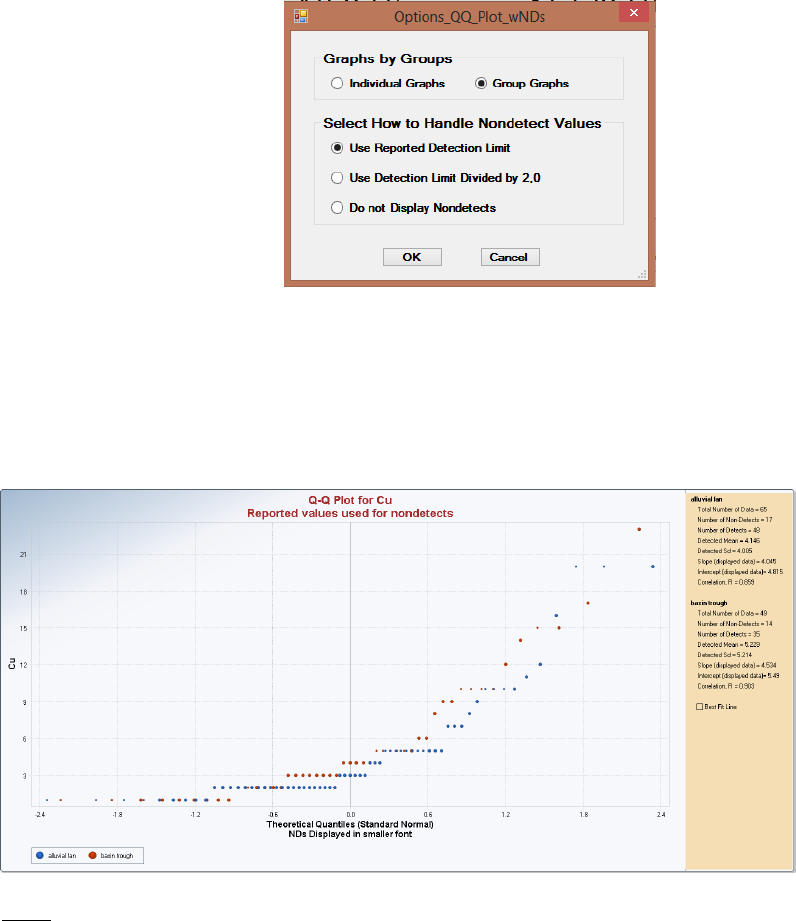

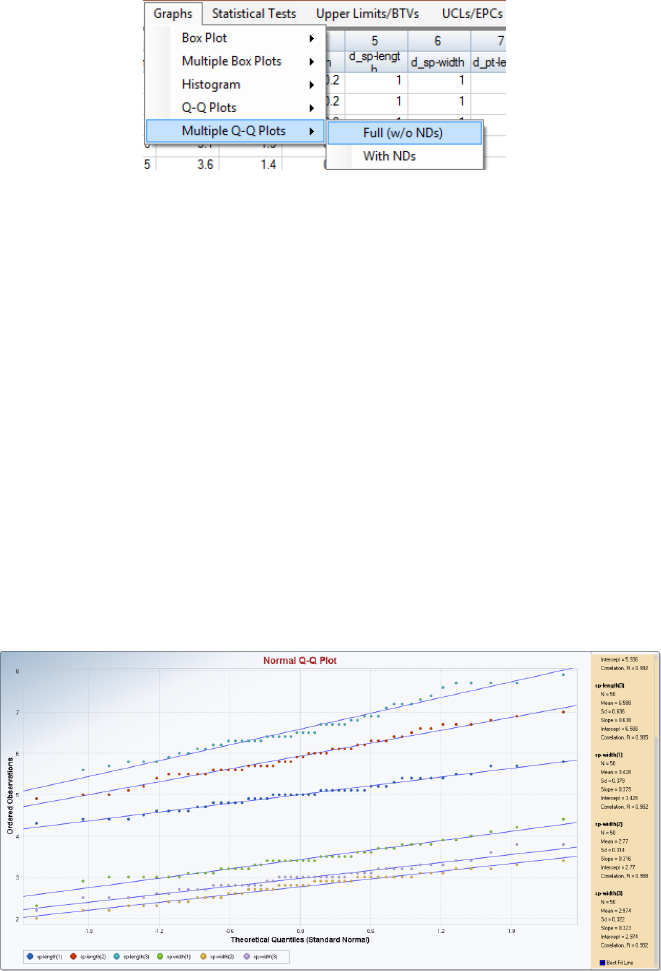

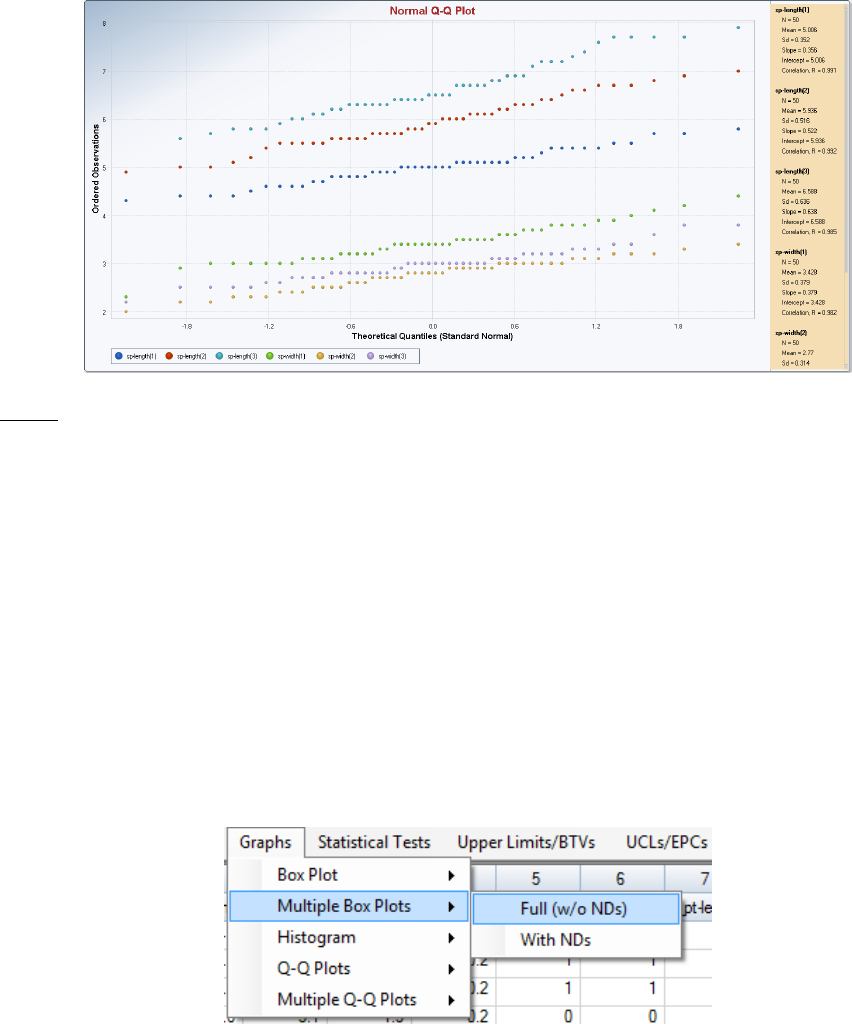

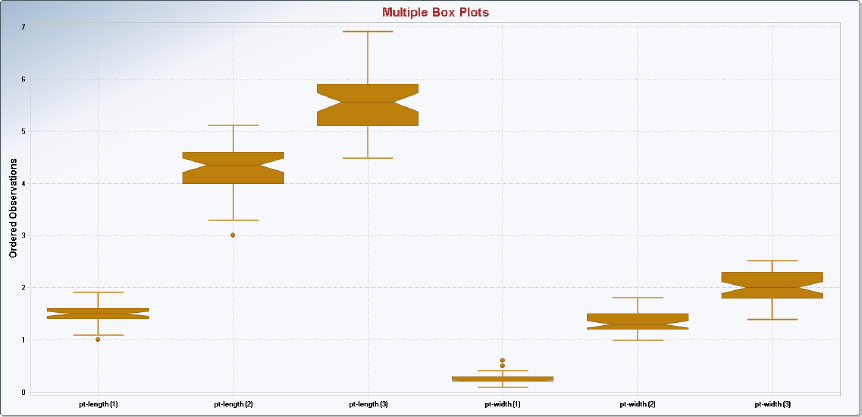

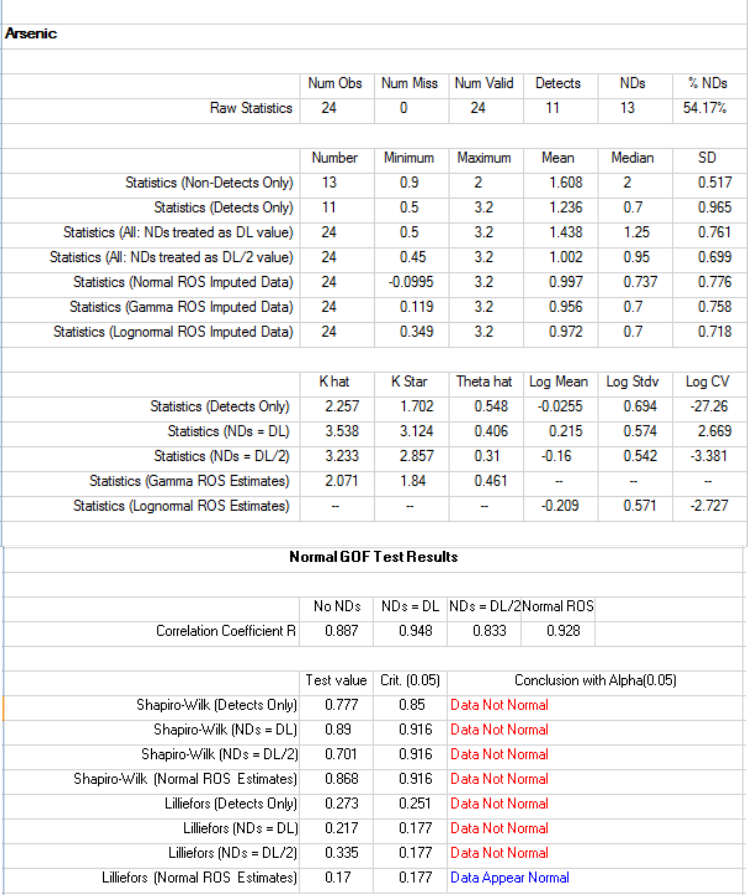

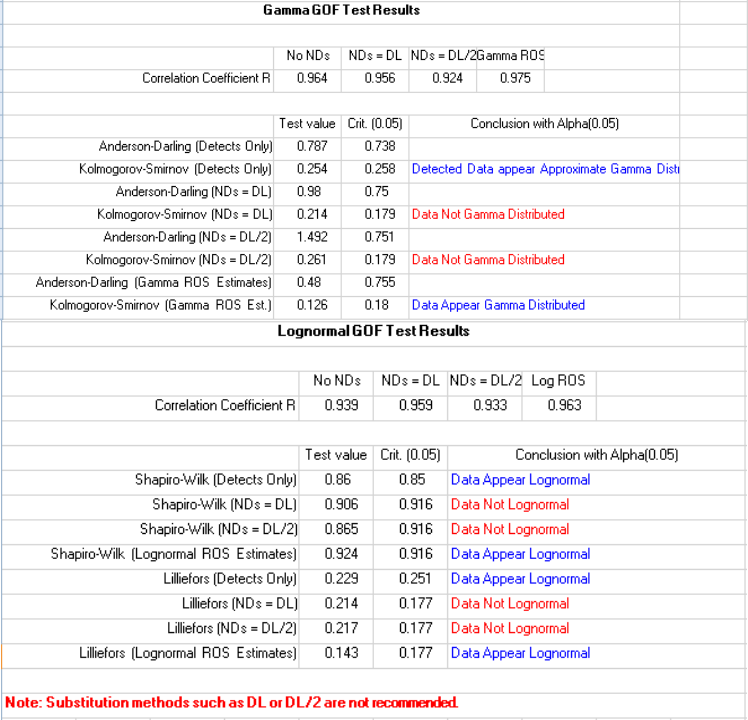

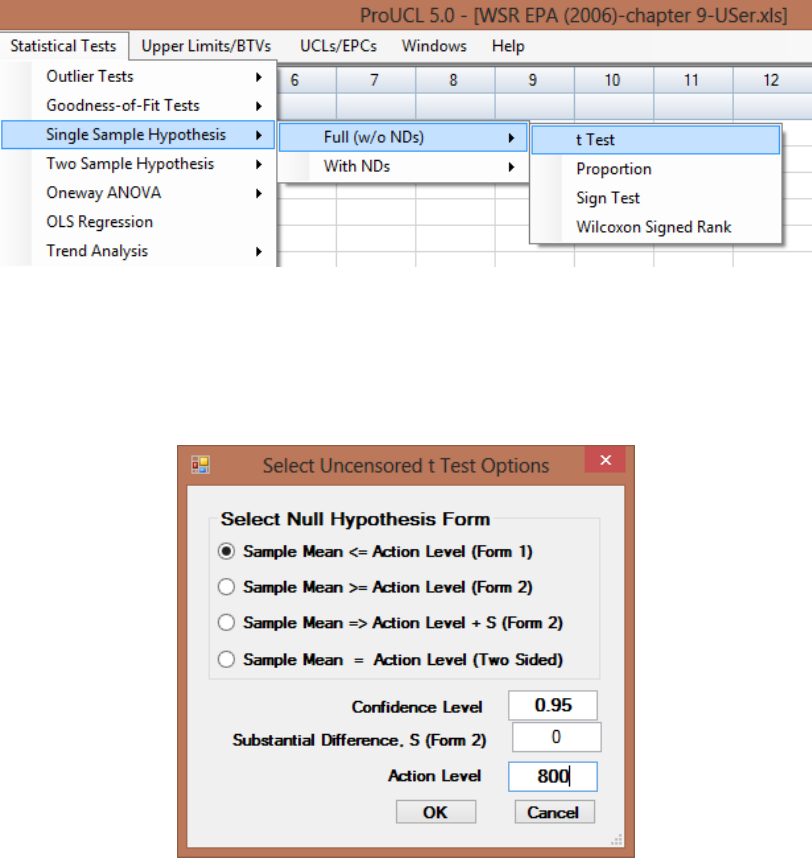

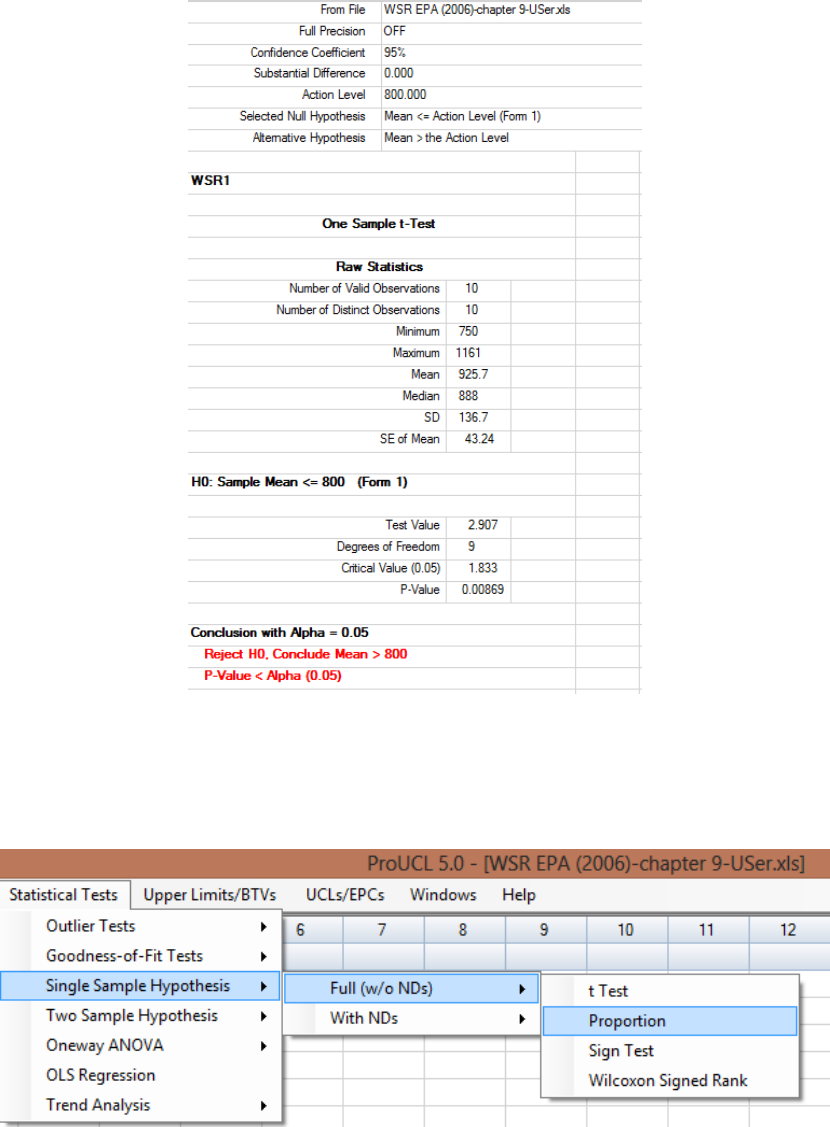

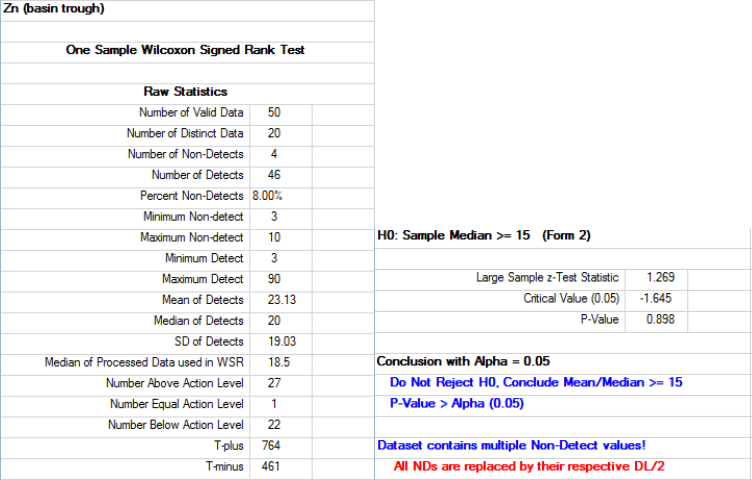

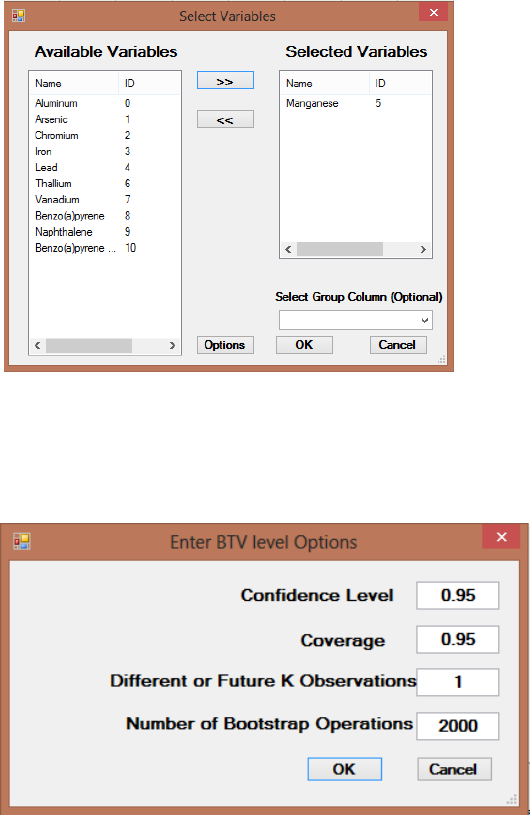

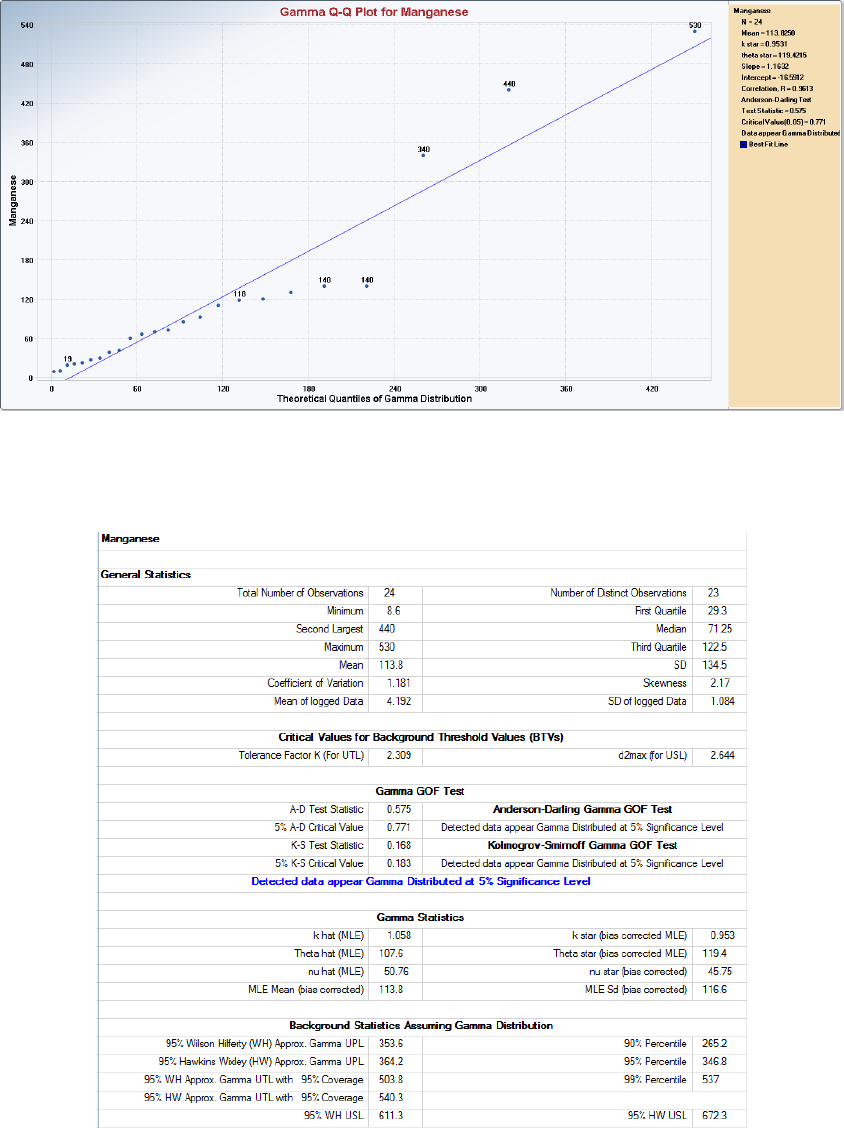

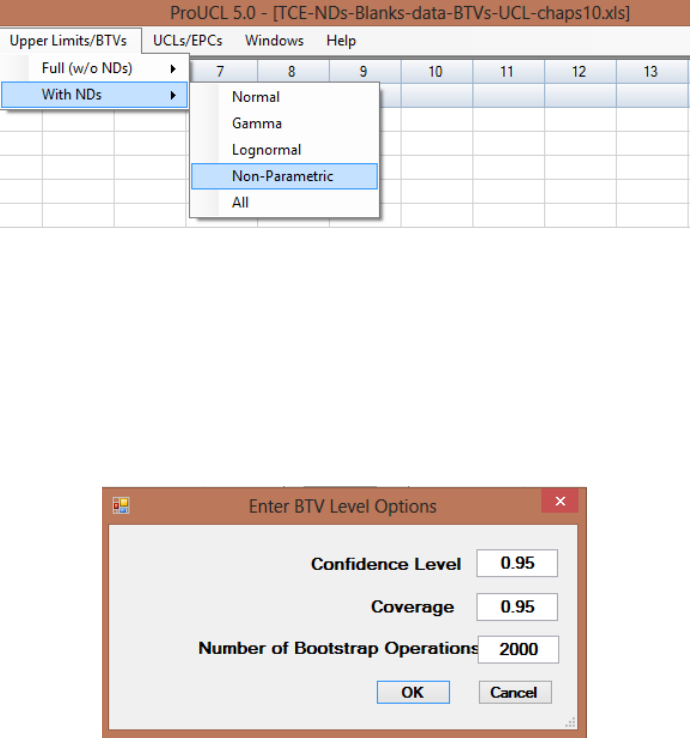

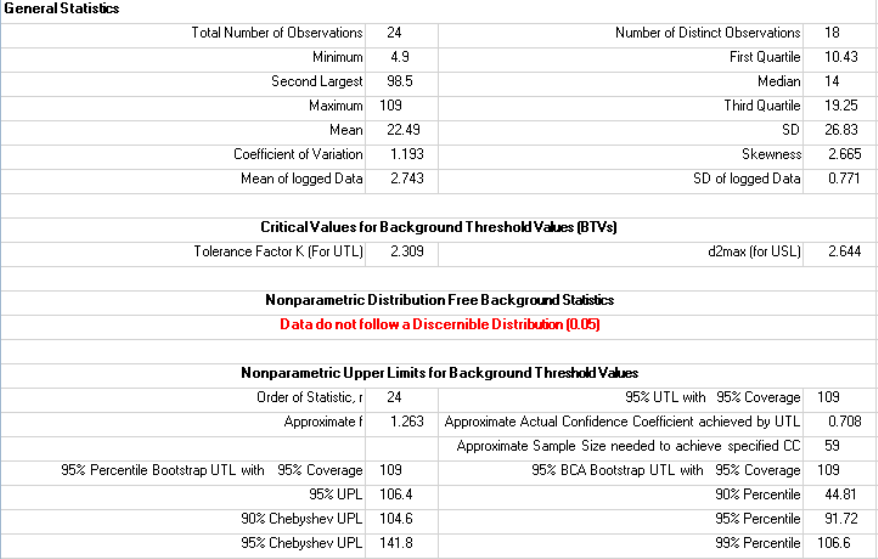

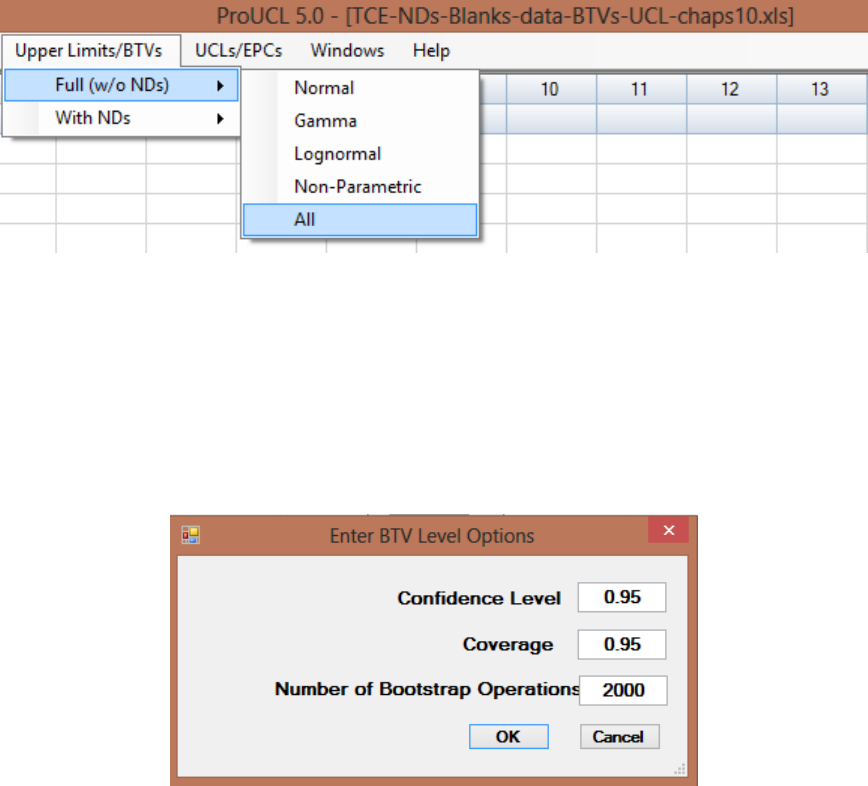

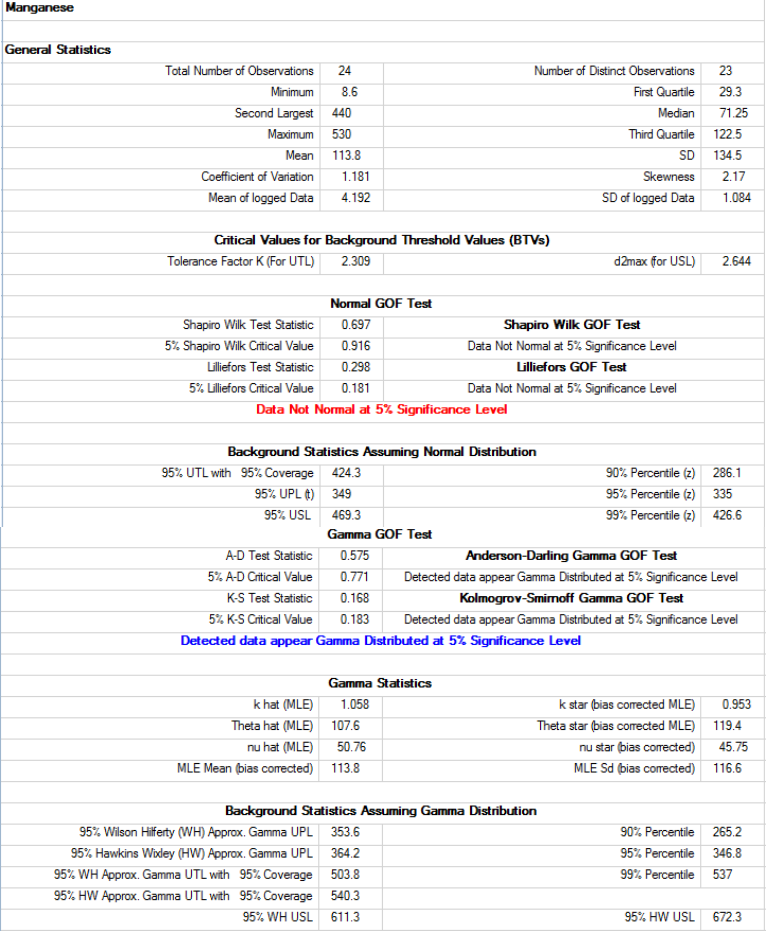

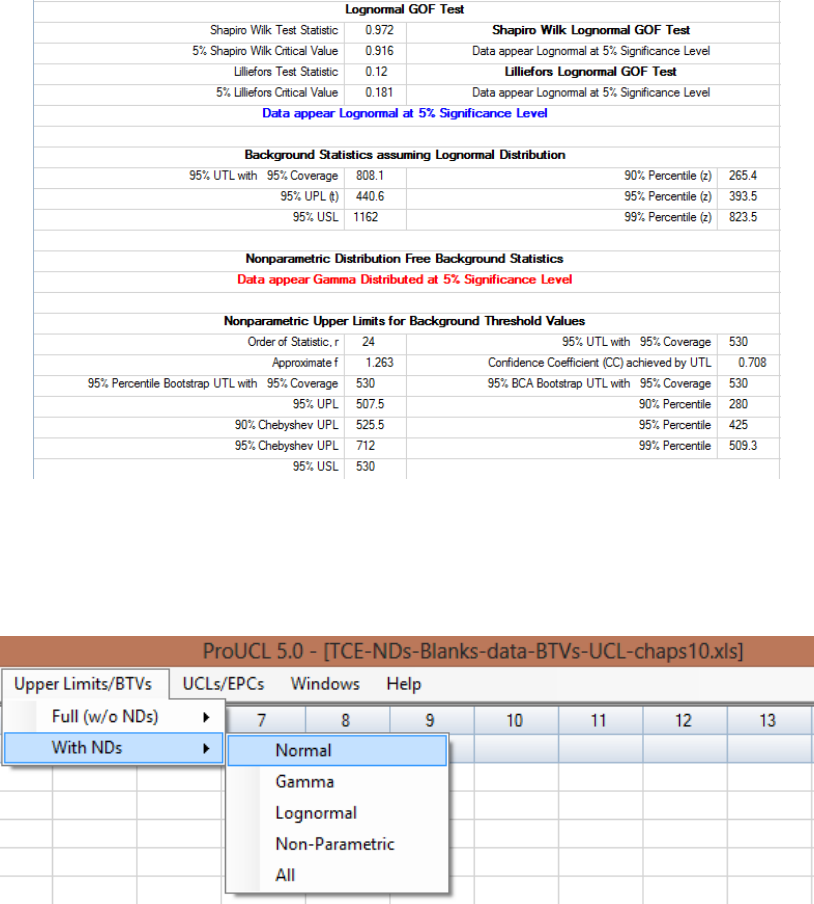

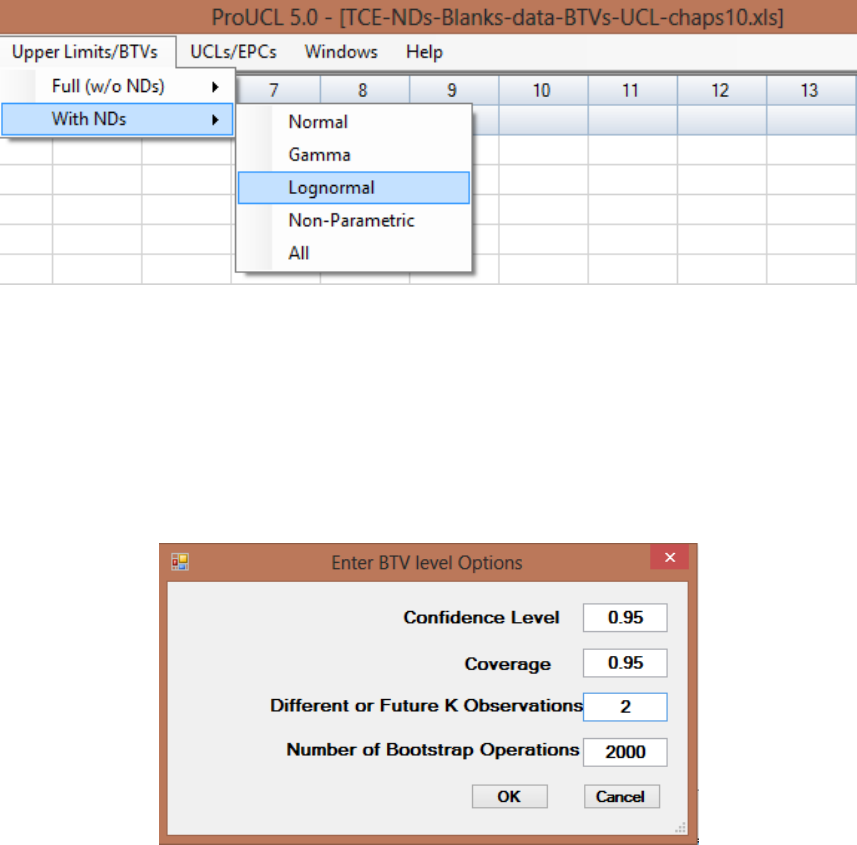

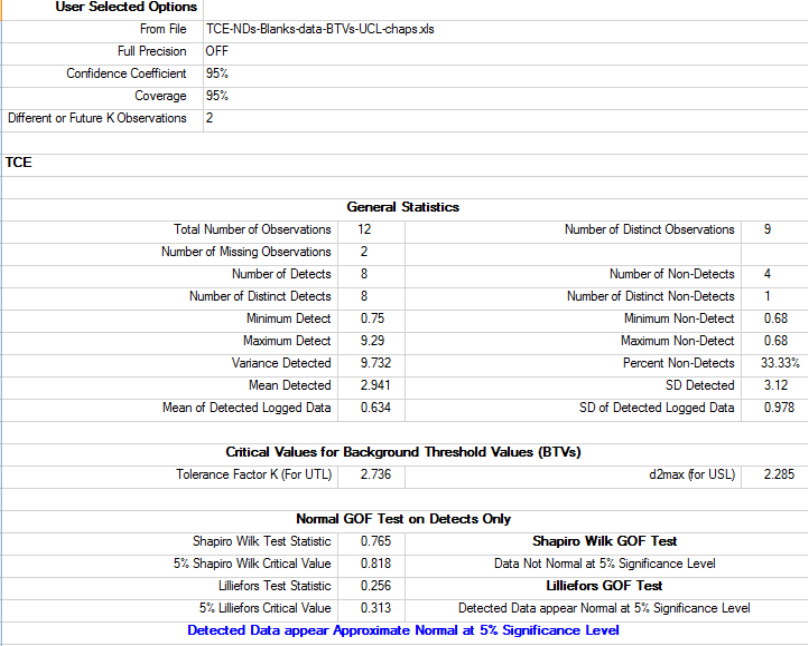

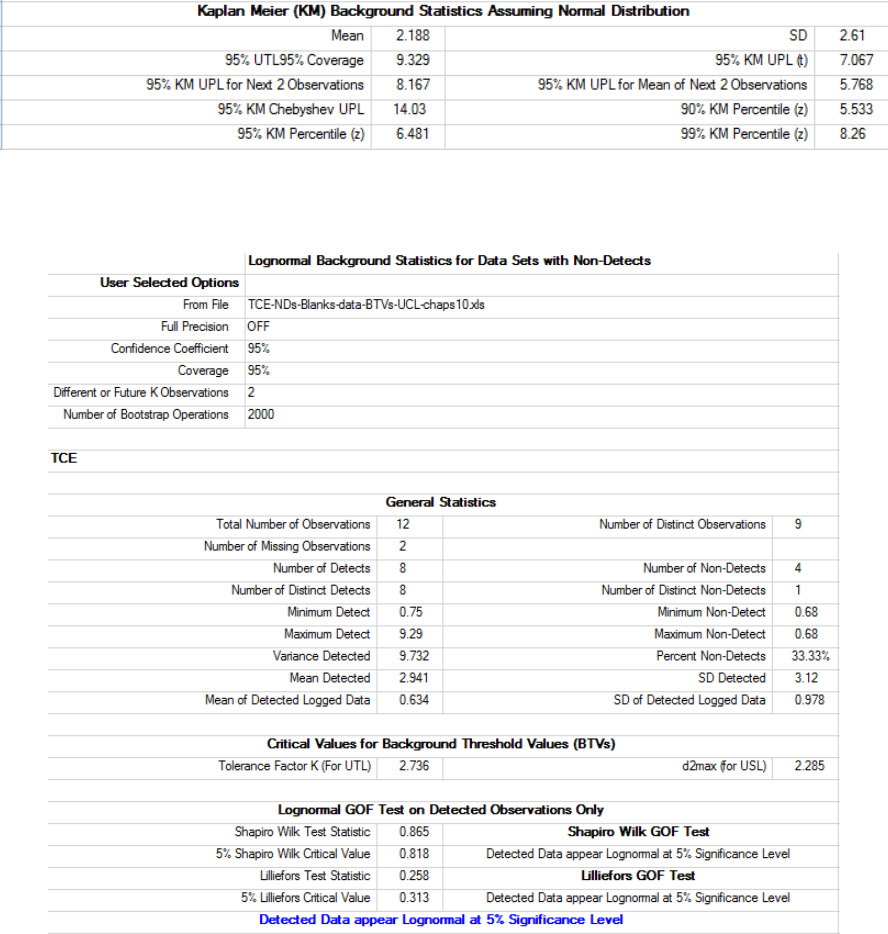

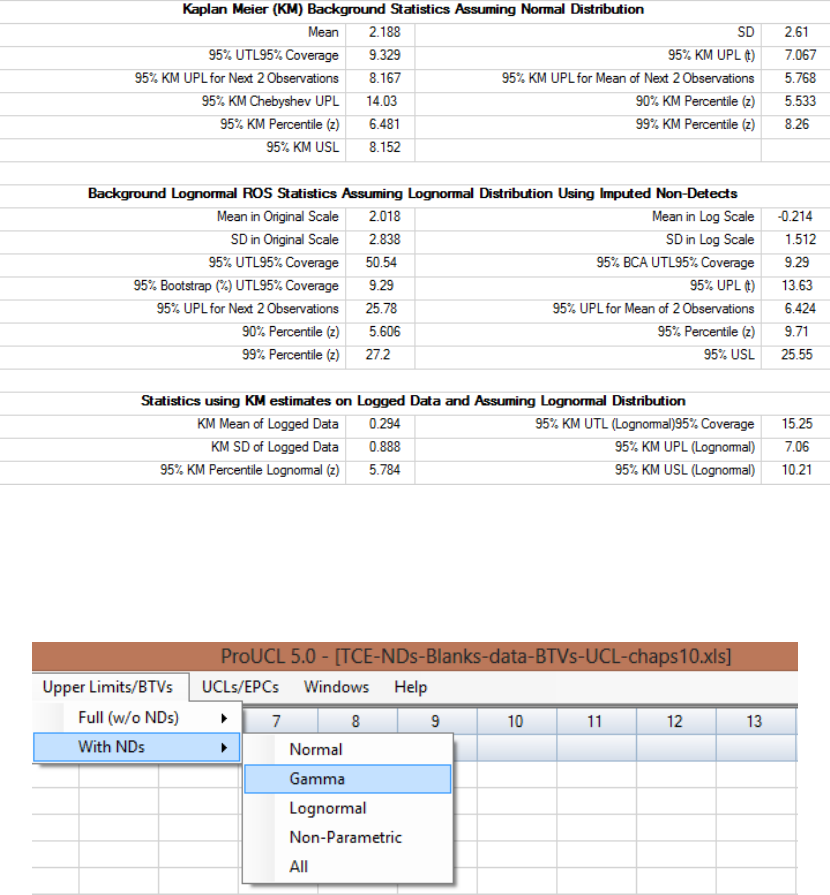

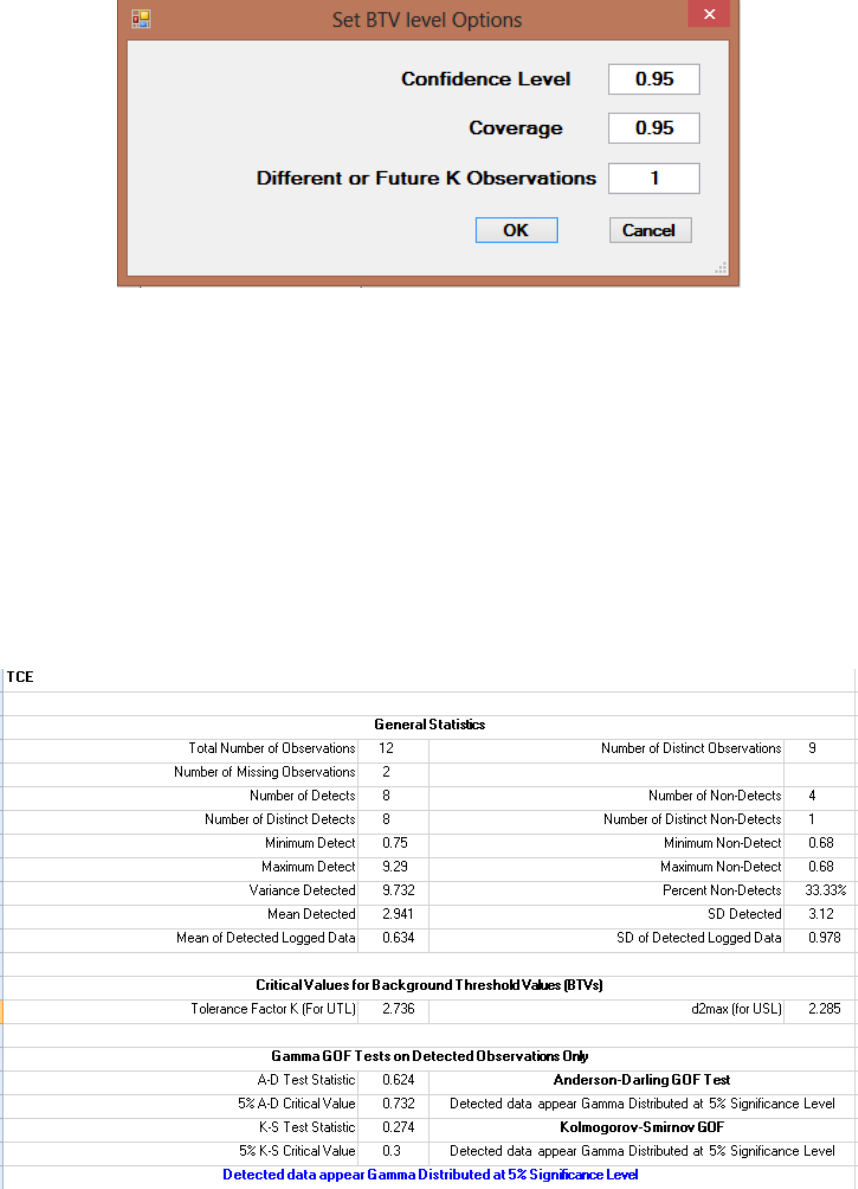

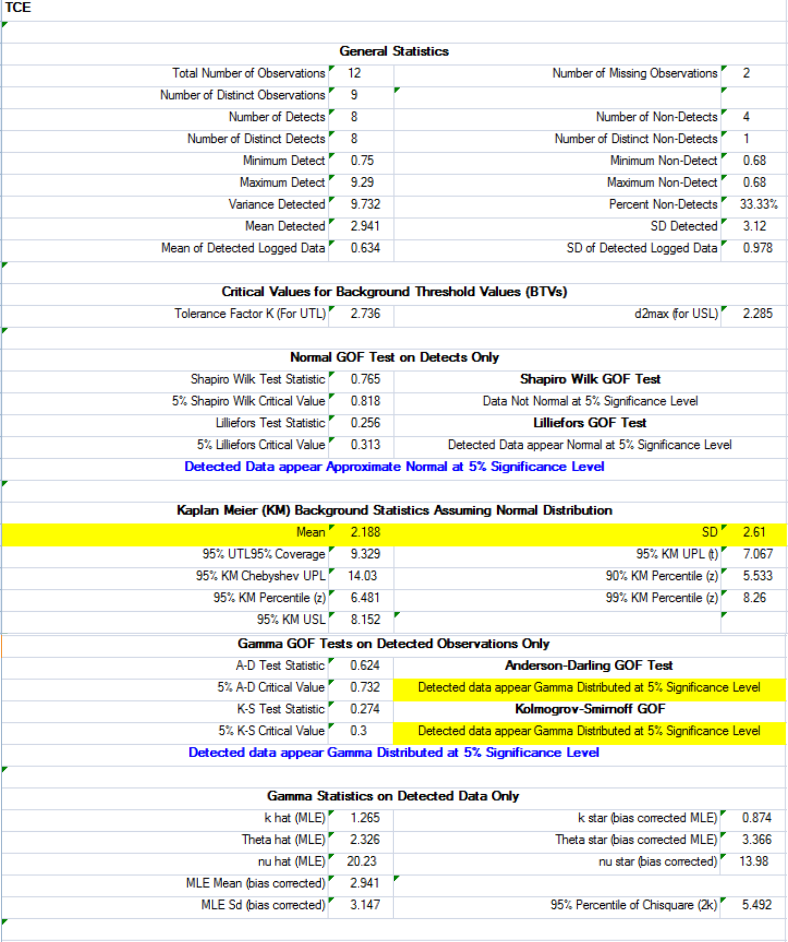

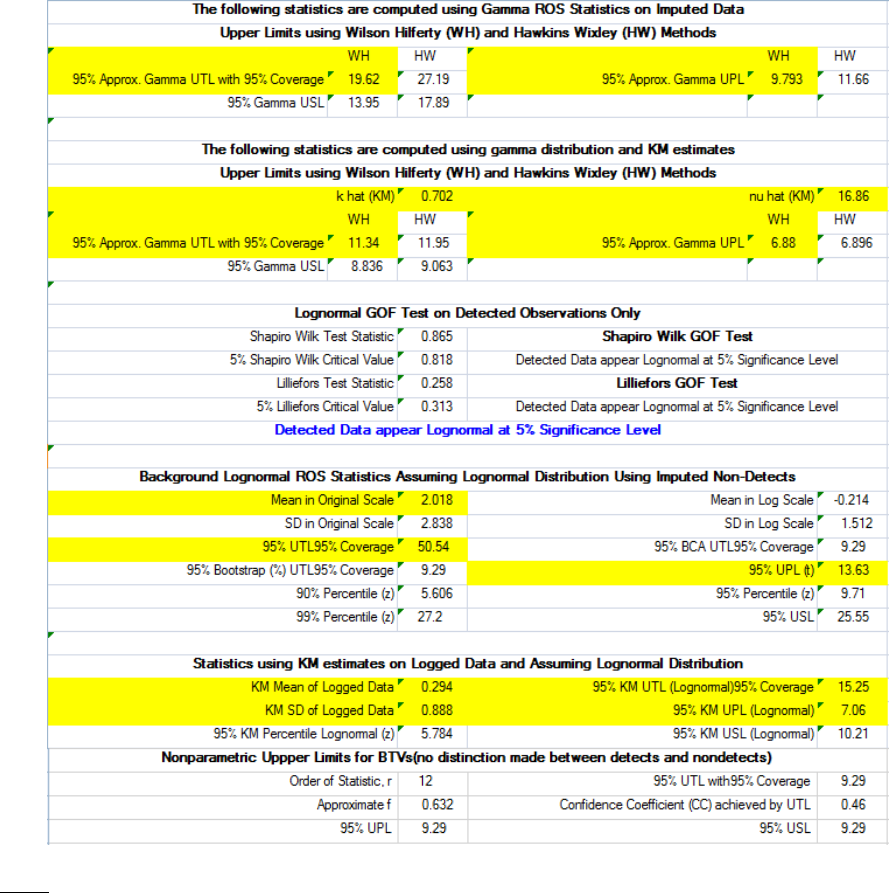

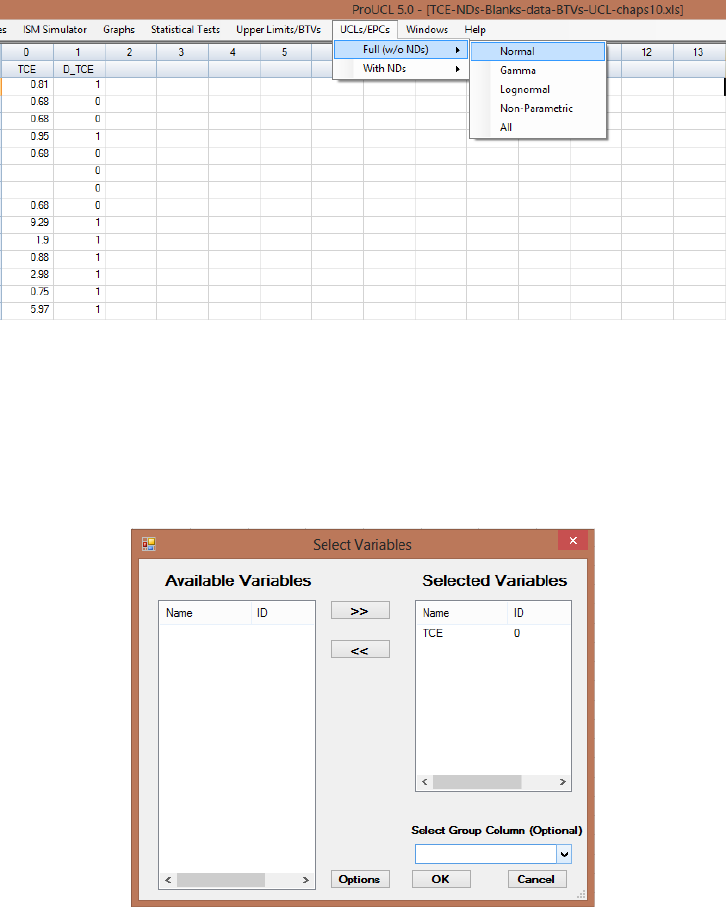

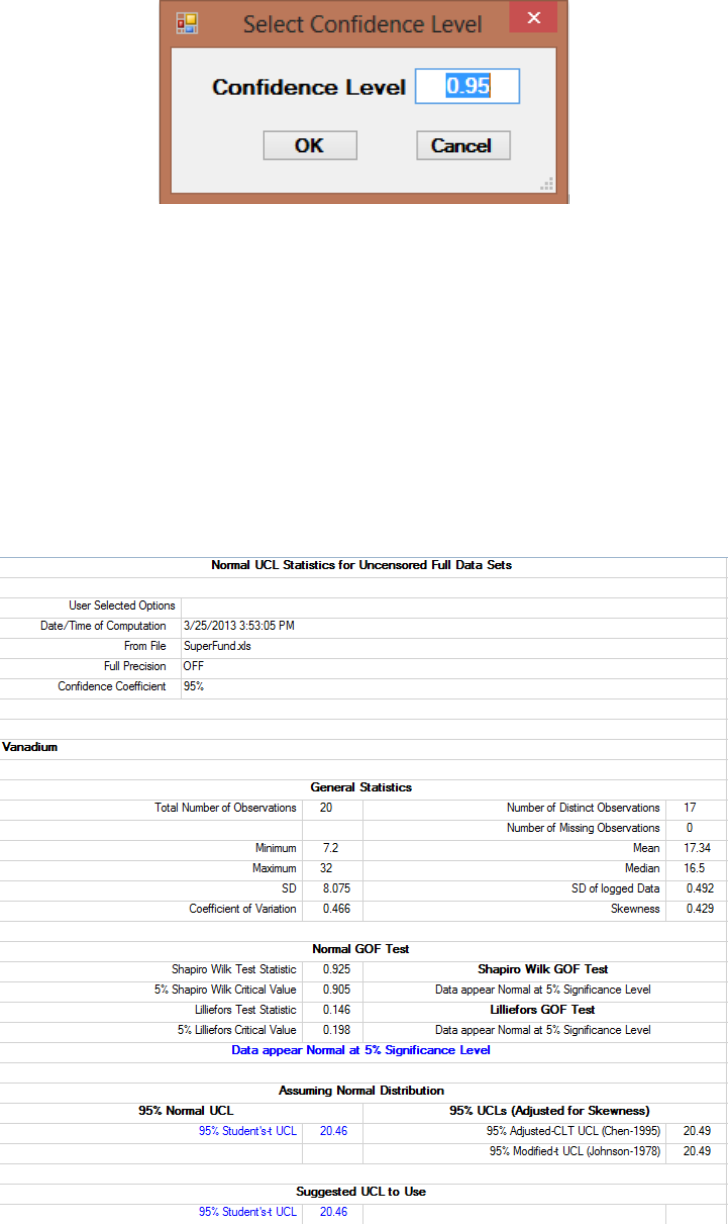

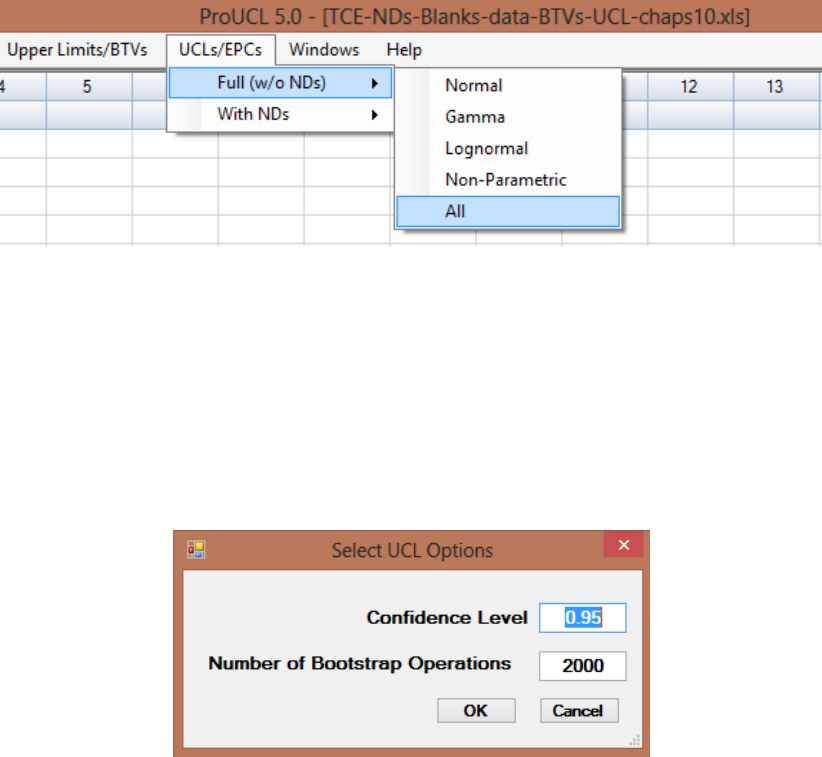

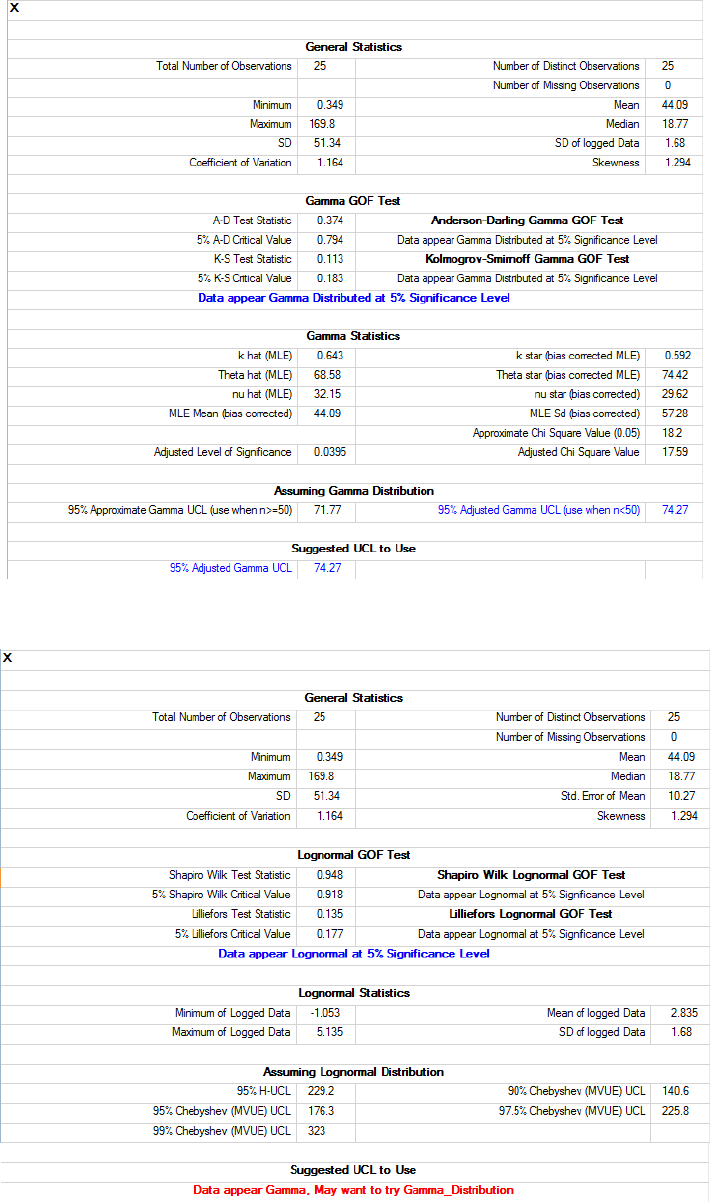

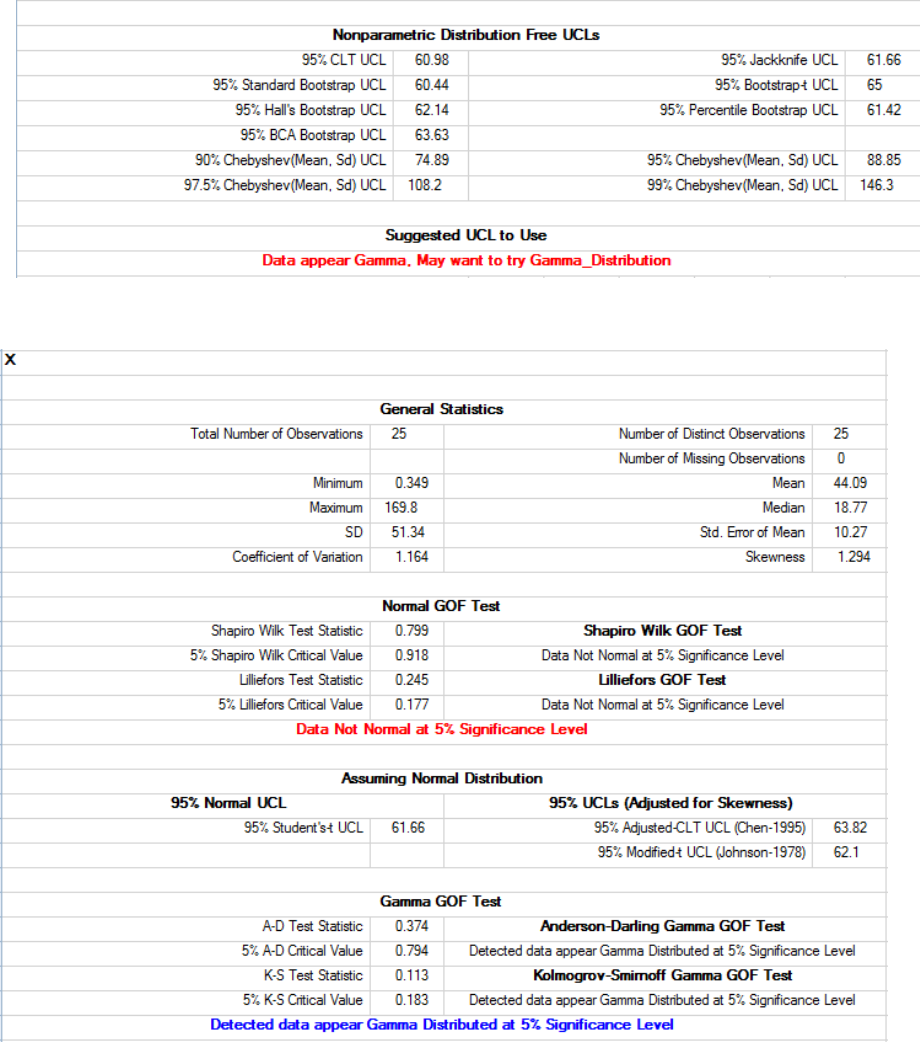

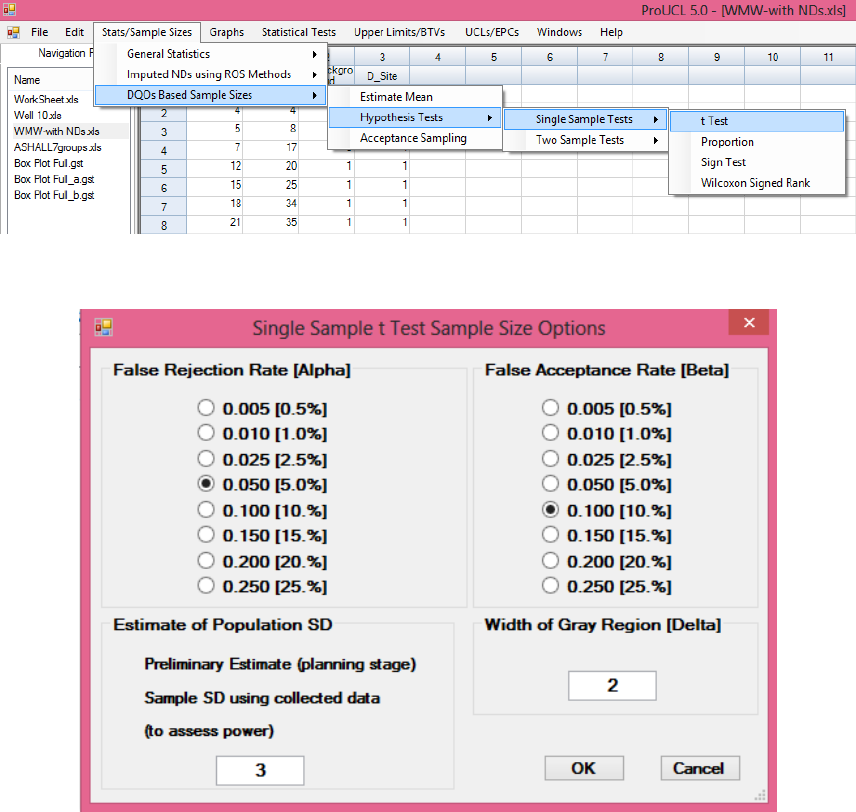

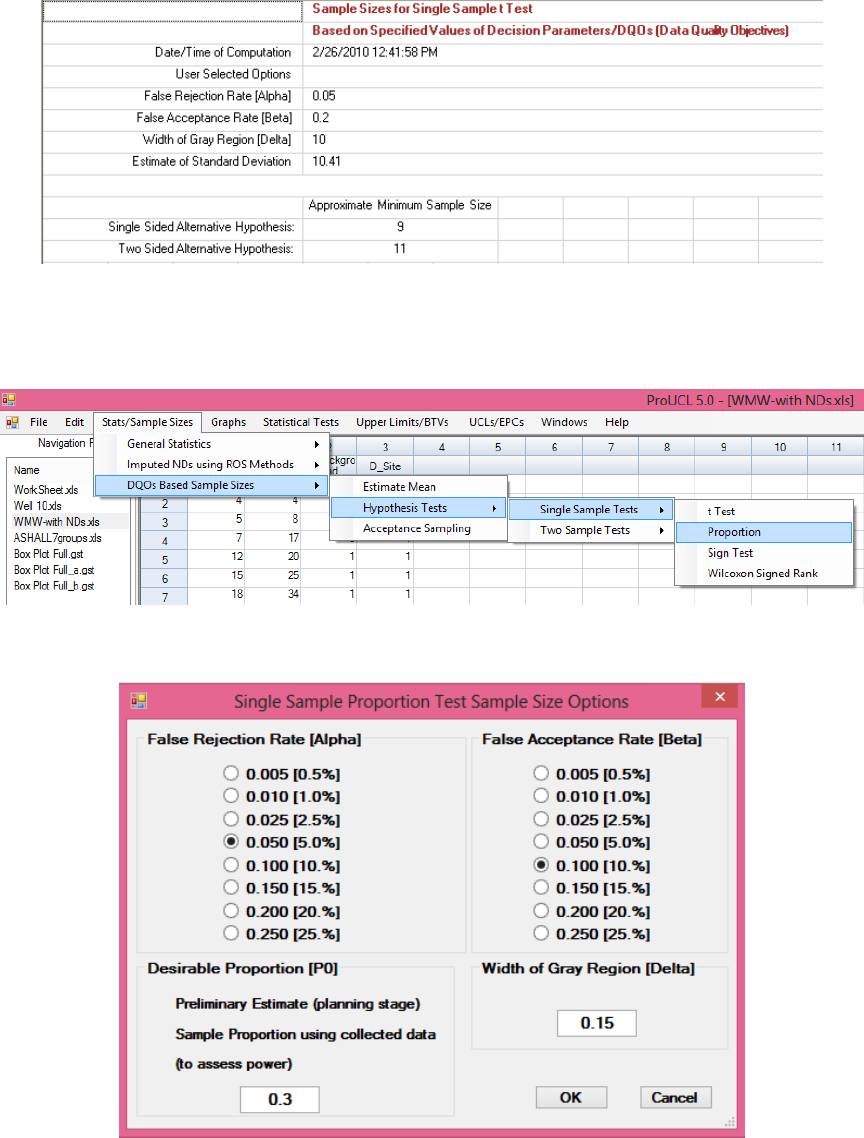

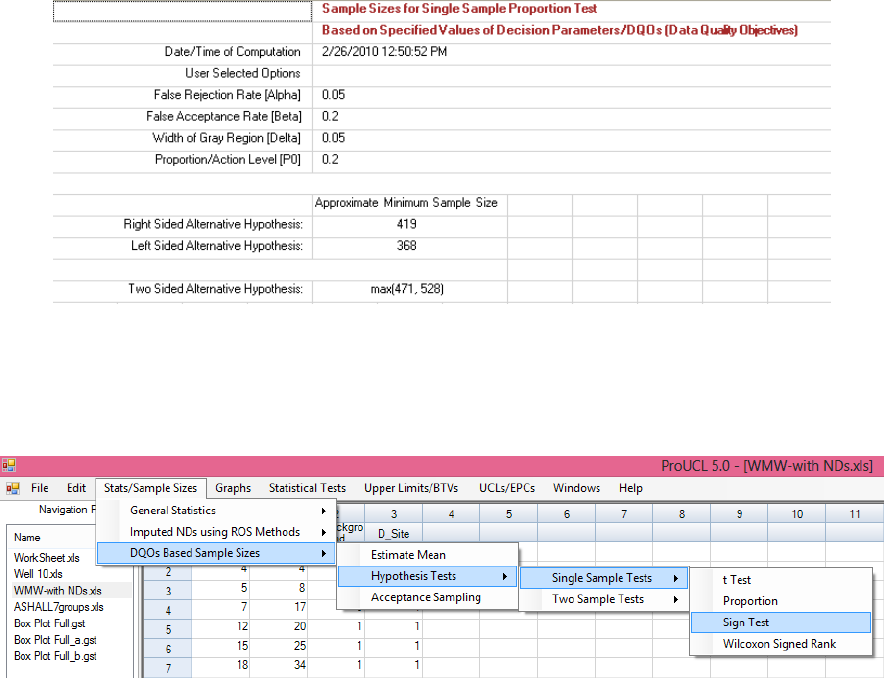

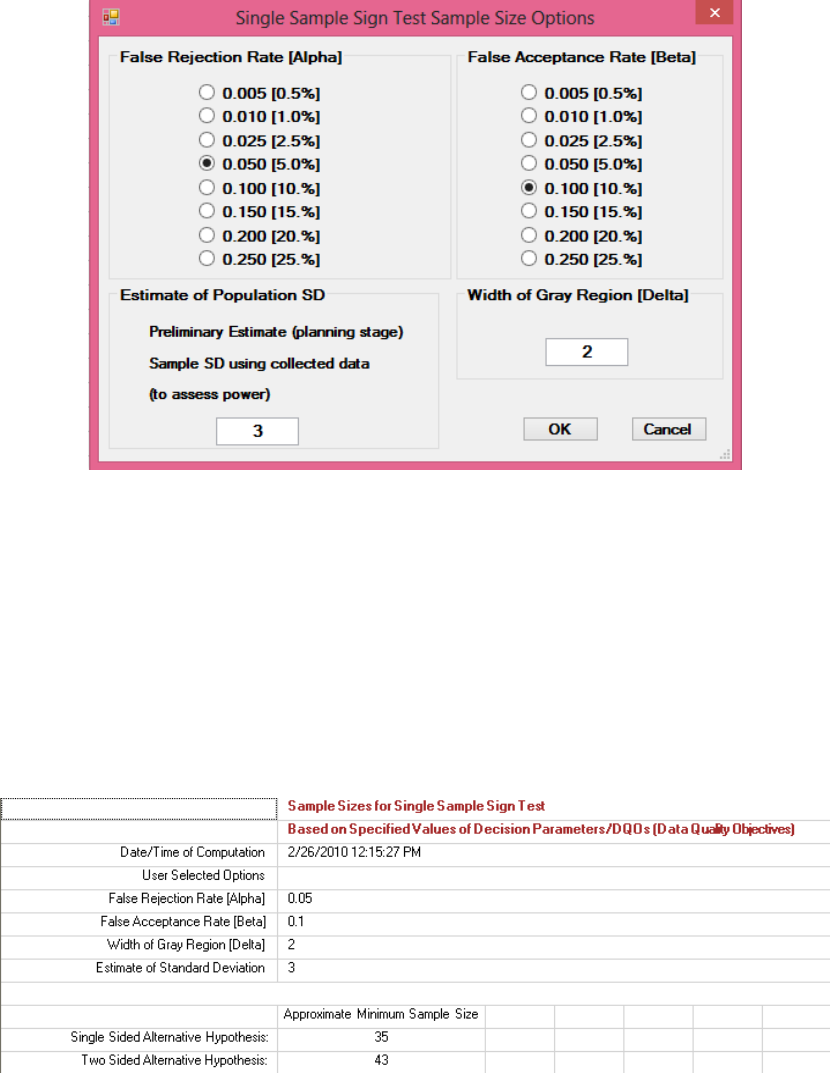

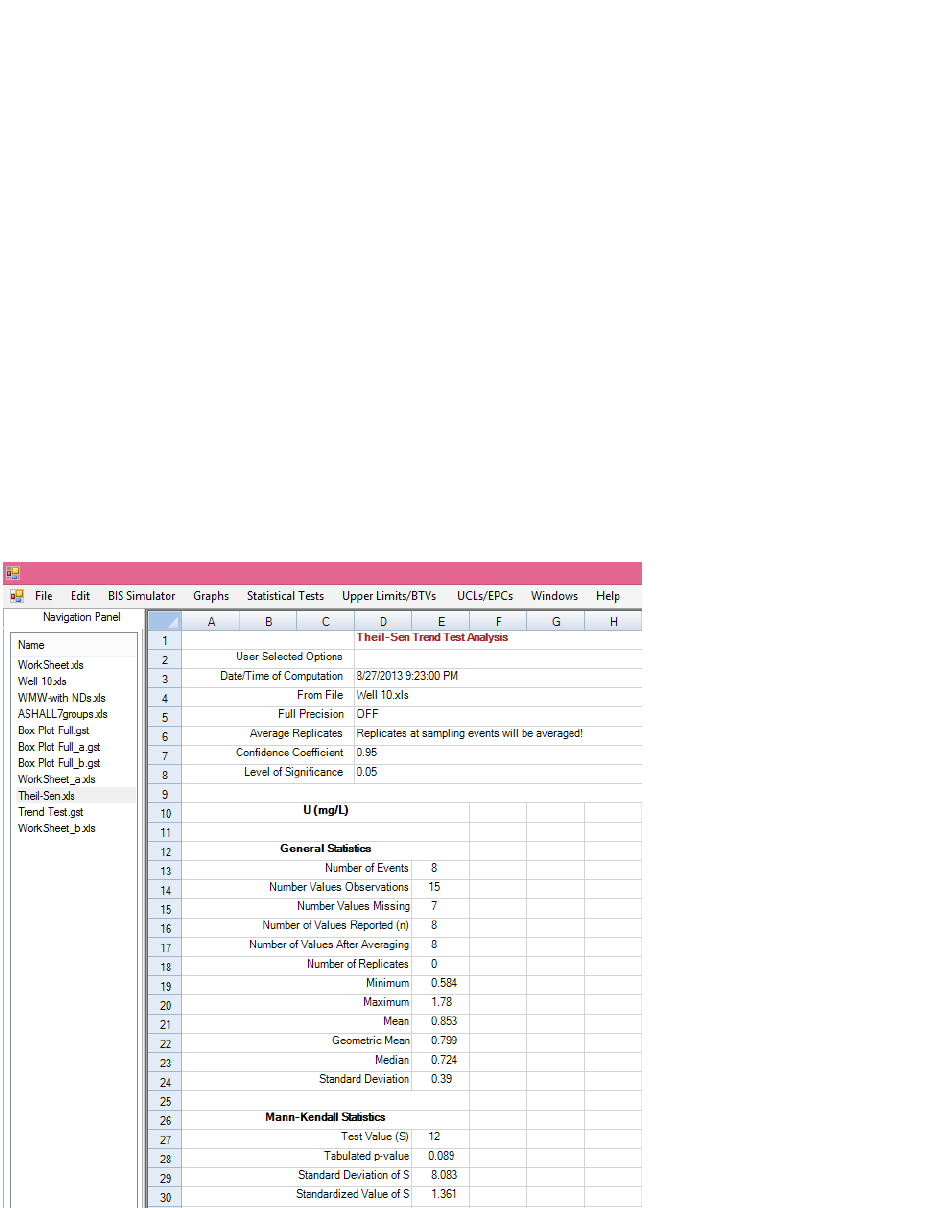

important to compute reliable UCLs and other decision making statistics of practical merit. In an effort to